I'm creating a big (satis) package repository in a GitLab CI build step and wanted to just host it in GitLab Pages (on premise install). This works flawlessly, until the public folder size gets big, as soon as not only package versions, but downloads are stored in it as well. I added a last step to the pages task to show folder size:

$ du -h public

2.7G public

Creating cache default...

public: found 564 matching files

vendor: found 3808 matching files

Created cache

Uploading artifacts...

public: found 564 matching files

ERROR: Uploading artifacts to coordinator... too large archive id=494 responseStatus=413 Request Entity Too Large status=413 Request Entity Too Large token=kDf7gQEd

FATAL: Too large

ERROR: Job failed: exit code 1

Of course that is a whole lot. I managed to delete some outdated minor version and was at

950MB public

Still the same problem. After some investigation there seem to be two relevant limits:

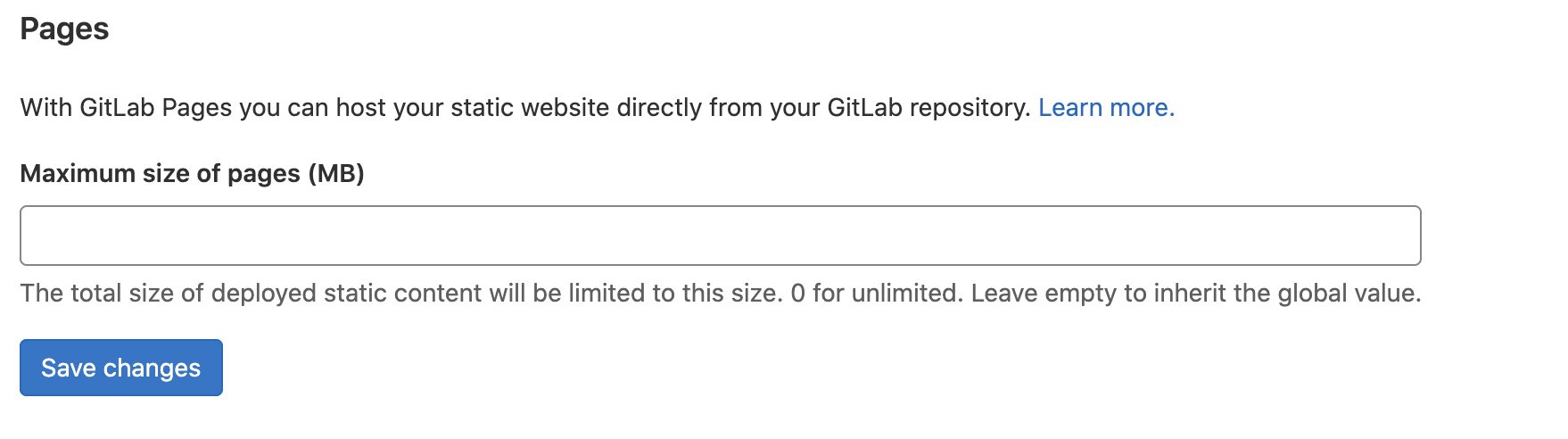

- Gitlab Pages System Configuration: Max Pages Size (was set to 0 for unlimited)

- NGINX max post size in /etc/gitlab/gitlab.rb (was set to disabled, seems to default to 250M). Which I changed to

nginx['client_max_body_size'] = '1024m'

Still the same problem. Even after deleting even more versions down to 580M folder size the same error resides.

As soon as I disable downloads and thus only have <10Meverything works.

How can I debug the cause of this? Or is there some other hidden configuration?

max artifact size? docs.gitlab.com/ee/user/admin_area/settings/… IIRC, the default is 100mb – Interlace