I'm using LangChain to build a NL application. I want the interactions with the LLM to be recorded in a variable I can use for logging and debugging purposes. I have created a very simple chain:

from typing import Any, Dict

from langchain import PromptTemplate

from langchain.callbacks.base import BaseCallbackHandler

from langchain.chains import LLMChain

from langchain.llms import OpenAI

llm = OpenAI()

prompt = PromptTemplate.from_template("1 + {number} = ")

handler = MyCustomHandler()

chain = LLMChain(llm=llm, prompt=prompt, callbacks=[handler])

chain.run(number=2)

To record what's going on, I have created a custom CallbackHandler:

class MyCustomHandler(BaseCallbackHandler):

def on_text(self, text: str, **kwargs: Any) -> Any:

print(f"Text: {text}")

self.log = text

def on_chain_start(

self, serialized: Dict[str, Any], inputs: Dict[str, Any], **kwargs: Any

) -> Any:

"""Run when chain starts running."""

print("Chain started running")

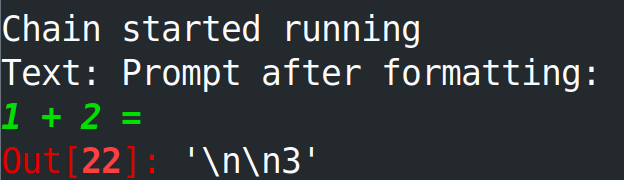

This works more or less as expected, but it has some side effects that I cannot figure out where they are coming from. The output is:

And the handler.log variable contains:

'Prompt after formatting:\n\x1b[32;1m\x1b[1;3m1 + 2 = \x1b[0m'

Where are the "Prompt after formatting" and the ANSI codes setting the text as green coming from? Can I get rid of them?

Overall, is there a better way I'm missing to use the callback system to log the application? This seems to be poorly documented.