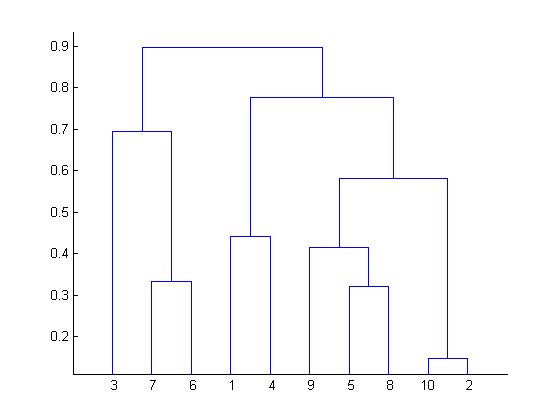

A dendrogram is a data structure used with hierarchical clustering algorithms that groups clusters at different "heights" of a tree - where the heights correspond to distance measures between clusters.

After a dendrogram is created from some input data set, it's often a further challenge to figure out where to "cut" the dendrogram, meaning selecting a height such that only clusters below that height are considered meaningful.

It's not always clear at what height to cut a dendrogram, but some algorithms exist, such as the DynamicTreeCut algorithm, that attempt to programatically select meaningful clusters from the dendrogram.

See:

https://stats.stackexchange.com/questions/3685/where-to-cut-a-dendrogram

Cutting dendrogram at highest level of purity

So I've been reading over the DynamicTreeCut algorithm, as well as a Java implementation of the algorithm. I understand how the algorithm works and what it's doing, in terms of a step by step breakdown of what's going on. However, I am failing to understand how this algorithm is doing anything meaningful. I think I am missing some key concept here.

In general, the algorithm iterates over a "height sequence" from a dendrogram. I'm not certain, but I assume that a "height sequence" just means the values along the Y axis of a dendrogram, i.e. the various heights at which cluster joins occur. If that's the case, we can assume that a "height sequence" is sorted in ascending order.

Then the algorithm calls for taking a "reference height", l, and subtracting it from each height in the input "height sequence". This gives you a vector of differences (D) between each height h[i] in the height sequence and the reference height l.

Then the algorithm attempts to find "transition points" - which are points in the differences vector where D[i] > 0 and D[i+1] < 0. In other words, points in the differences vector where the difference value transitioned from being positive to being negative.

And right here I am totally lost. I don't understand how these transition points can ever be meaningful. Firstly, it's my understanding that the input height sequence H is just the values on the Y axis of the dendrogram. So therefore the height sequence H should be in ascending order. Therefore, how can there ever be a point in the differences vector where we transition from positive to negative?

For example:

Suppose our input height sequence H is {1, 1.5, 2, 2.5, 3, 7, 9} and our reference value l is the average height (which would be 3.7). So if we create a difference vector D by subtracting l from each height in H, we'd get {-2.7, -2.2, -1.7, -1.2, -0.7, 3.3, 5.3}. So clearly there is no transition point here, nor could there ever be, because there are no points in the difference vector where D[i] > 0 and D[i+1] < 0, since the height sequence H is in ascending order.

So clearly I am totally misunderstanding something fundamental about this algorithm. Perhaps I am not understanding what is meant by the "height sequence". I am assuming it is simply the values on the Y-axis from the dendrogram, but clearly that doesn't make any sense with regard to what the algorithm is actually doing. Unfortunately, the authors don't really explain what is meant by a "dendrogram height sequence", nor does it appear to be some kind of standard terminology in use by the data science community.

So can something explain what the DynamicTreeCut algorithm is trying to achieve here, and where my understanding is going wrong?