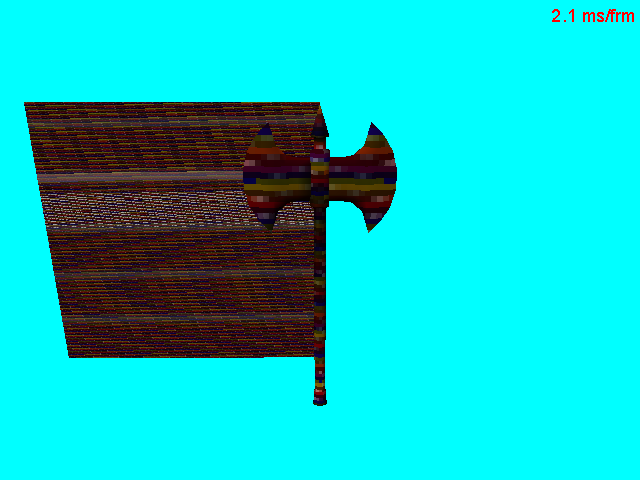

I'm trying use SDL2 to load a texture for OpenGL rendering of Wavefront Objects (currently I'm testing with the fixed pipeline, but I eventually plan to move to shaders). The problem is that the loaded texture applied to a quad (and a model that uses a small part in the bottom right of the texture) looks like that:

(source: image-upload.de)

The image loads fine and looks perfectly normal when drawn with SDL functions, so it's probably the conversion to an OGL texture that's broken. Note that I have alpha blending enabled and the texture is still completely opaque - so the values are not completely random, and probably not uninitialized memory. This is my code for converting the surface (cobbled together from various tutorials and questions on this site here):

GLuint glMakeTexture(bool mipmap = false, int request_size = 0) { // Only works on 32 Bit Surfaces

GLuint texture = 0;

if ((bool)_surface) {

int w,h;

if (request_size) { // NPOT and rectangular textures are widely supported since at least a decade now; you should never need this...

w = h = request_size;

if (w<_surface->w || h<_surface->h) return 0; // No can do.

} else {

w = _surface->w;

h = _surface->h;

}

SDL_LockSurface(&*_surface);

std::cout<<"Bits: "<<(int)_surface->format->BytesPerPixel<<std::endl;

Uint8 *temp = (Uint8*)malloc(w*h*sizeof(Uint32)); // Yes, I know it's 4...

if (!temp) return 0;

// Optimized code

/*for (int y = 0; y<h; y++) { // Pitch is given in bytes, so we need to cast to 8 bit here!

memcpy(temp+y*w*sizeof(Uint32),(Uint8*)_surface->pixels+y*_surface->pitch,_surface->w*sizeof(Uint32));

if (w>_surface->w) memset(temp+y*w*sizeof(Uint32)+_surface->w,0,(w-_surface->w)*sizeof(Uint32));

}

for (int y = _surface->h; y<h; y++) memset(temp+y*w*sizeof(Uint32),0,w*sizeof(Uint32));

GLenum format = (_surface->format->Rmask==0xFF)?GL_RGBA:GL_BGRA;*/

// Naive code for testing

for (int y = 0; y<_surface->h; y++)

for (int x = 0; x<_surface->w; x++) {

int mempos = (x+y*w)*4;

SDL_Color pcol = get_pixel(x,y);

temp[mempos] = pcol.r;

temp[mempos+1] = pcol.g;

temp[mempos+2] = pcol.b;

temp[mempos+3] = pcol.a;

}

GLenum format = GL_RGBA;

SDL_UnlockSurface(&*_surface);

glGenTextures(1, &texture);

glBindTexture(GL_TEXTURE_2D, texture);

if (mipmap) glTexParameteri(texture, GL_GENERATE_MIPMAP, GL_TRUE);

glTexImage2D(GL_TEXTURE_2D, 0, format, w, h, 0, format, GL_UNSIGNED_BYTE, temp);

if (mipmap) glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

else glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

free(temp); // Always clean up...

}

return texture;

}

EDIT: _surface is actually a std::shared_ptr to SDL_Surface. Thus, the &* when (un)locking it.

Btw, SDL claims the surface is formatted as 32 Bit RGBA on my machine, I checked that already.

The code that binds the texture and draws the quad is here:

glEnable(GL_TEXTURE_2D);

glTexEnvf(GL_TEXTURE_ENV, GL_TEXTURE_ENV_MODE, GL_MODULATE);

glBindTexture(GL_TEXTURE_2D,_texture[MAP_KD]);

static bool once = true;

if (once) {

int tex;

glGetIntegerv(GL_TEXTURE_BINDING_2D, &tex);

bool valid = glIsTexture(tex);

std::cout<<tex<<" "<<valid<<std::endl;

once = false;

}

glBegin(GL_TRIANGLE_STRIP);

//glColor3f(1.f,1.f,1.f);

glNormal3f(0,1,0);

glTexCoord2f(0.f,0.f); glVertex3f(0,0,0);

glTexCoord2f(0.f,1.f); glVertex3f(0,0,1);

glTexCoord2f(1.f,0.f); glVertex3f(1,0,0);

glTexCoord2f(1.f,1.f); glVertex3f(1,0,1);

glEnd();

The axe is drawn later from an index list; the code is way too long to share here (and besides, it works fine apart from the texture).

I also tried the naive method found in many tutorials of passing _surface->pixels to glTexImage2D(), but that doesn't help either (and I heard it's the wrong way to do it anyway, because pitch!=width*BytesPerPixel in general). The outcommented "optimized" code looks exactly the same, btw (as expected). I wrote the lower part for better testing. Setting all pixels to a specific color or making the texture partially transparent works as expected, so I assume OpenGL loads the values in temp correctly. It's likely my understanding of the memory layout in SDL2 Surfaces that's messed up.

FINAL EDIT: Solution (resetting GL_UNPACK_ROW_LENGTH is key, thanks to Peter Clark):

GLuint glTexture(bool mipmap = false) {

GLuint texture = 0;

if ((bool)_surface) {

GLenum texture_format, internal_format, tex_type;

if (_surface->format->BytesPerPixel == 4) {

if (_surface->format->Rmask == 0x000000ff) {

texture_format = GL_RGBA;

tex_type = GL_UNSIGNED_INT_8_8_8_8_REV;

} else {

texture_format = GL_BGRA;

tex_type = GL_UNSIGNED_INT_8_8_8_8;

}

internal_format = GL_RGBA8;

} else {

if (_surface->format->Rmask == 0x000000ff) {

texture_format = GL_RGB;

tex_type = GL_UNSIGNED_BYTE;

} else {

texture_format = GL_BGR;

tex_type = GL_UNSIGNED_BYTE;

}

internal_format = GL_RGB8;

}

int alignment = 8;

while (_surface->pitch%alignment) alignment>>=1; // x%1==0 for any x

glPixelStorei(GL_UNPACK_ALIGNMENT,alignment);

int expected_pitch = (_surface->w*_surface->format->BytesPerPixel+alignment-1)/alignment*alignment;

if (_surface->pitch-expected_pitch>=alignment) // Alignment alone wont't solve it now

glPixelStorei(GL_UNPACK_ROW_LENGTH,_surface->pitch/_surface->format->BytesPerPixel);

else glPixelStorei(GL_UNPACK_ROW_LENGTH,0);

glGenTextures(1, &texture);

glBindTexture(GL_TEXTURE_2D, texture);

glTexImage2D(GL_TEXTURE_2D, 0, internal_format, _surface->w, _surface->h, 0, texture_format, tex_type, _surface->pixels);

if (mipmap) {

glGenerateMipmap(GL_TEXTURE_2D);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

} else {

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_BASE_LEVEL, 0);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAX_LEVEL, 0);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

}

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glPixelStorei(GL_UNPACK_ALIGNMENT,4);

glPixelStorei(GL_UNPACK_ROW_LENGTH,0);

}

return texture;

}