After some experimenting, I've found that it is in fact possible to have two persistence units that use non-XA resources within a container-managed transaction. However, it may be implementation-dependent. TL;DR at the bottom.

JTA should require XA resources if more than one resource participates in a transaction. It uses X/Open XA for the purpose of allowing distributed transactions, for example over multiple databases, or a database and JMS queue. There is apparently some optimization (it may be GlassFish-specific, I'm not sure) that allows the last participant to be non-XA. In my use-case, however, both persistence units are for the same database (but a different set of tables, with some possible overlap) and both are non-XA. This means we'd expect an exception to be thrown when the second resource does not support XA.

Suppose this is our persistence.xml

<?xml version="1.0" encoding="UTF-8"?>

<persistence version="2.0"

xmlns="http://java.sun.com/xml/ns/persistence" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/persistence http://java.sun.com/xml/ns/persistence/persistence_2_0.xsd">

<persistence-unit name="playground" transaction-type="JTA">

<provider>org.hibernate.ejb.HibernatePersistence</provider>

<jta-data-source>jdbc/playground</jta-data-source>

<properties>

<property name="hibernate.dialect" value="be.dkv.hibernate.SQLServer2012Dialect" />

<property name="hibernate.hbm2ddl.auto" value="update" />

<property name="hibernate.show_sql" value="true" />

</properties>

</persistence-unit>

<persistence-unit name="playground-copy" transaction-type="JTA">

<provider>org.hibernate.ejb.HibernatePersistence</provider>

<jta-data-source>jdbc/playground</jta-data-source>

<mapping-file>META-INF/orm-playground-copy.xml</mapping-file>

<properties>

<property name="hibernate.dialect" value="be.dkv.hibernate.SQLServer2012Dialect" />

<property name="hibernate.hbm2ddl.auto" value="update" />

<property name="hibernate.show_sql" value="true" />

</properties>

</persistence-unit>

</persistence>

There are two persistence units, one with name playground, the other with name playground-copy. The latter has an ORM mapping file but that is a bit besides the point here. What is important is that both have the same <jta-data-source> specified.

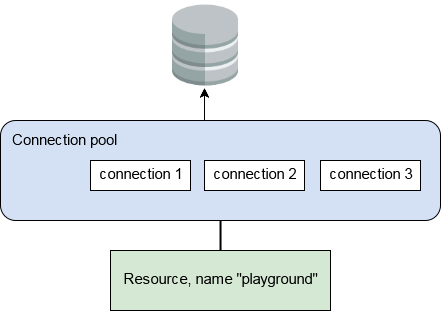

In the application server (GlassFish in this case), we'll have a JDBC connection pool, with a JDBC resource named playground that uses this pool.

![connection pool and resource]()

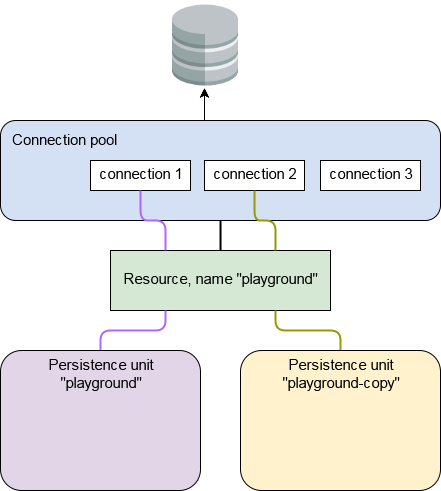

Now if two persistence contexts are injected into an EJB, and a method is called that is considered to be within a container-managed transaction, you'd expect things to look like this.

![persistence unit connections]()

Both persistence contexts utilize the same data source, but neither the transaction manager nor JPA layer should really care much about that. After all, they might have different data sources. Since the data source is backed by a connection pool anyway, you'd expect both units to get their own connection. XA would allow work to operate in a transactional way, because XA-enabled resources will implement 2-phase commit.

However, when attempting the above with the data source pointing to a connection pool with a non-XA implementation (and doing some actual persistence work), there was no exception and everything worked fine! XA support in the MSSQL server was even disabled and attempting to use an XA driver would result in an error until it was enabled, so it's not like I was accidentally using XA without knowing.

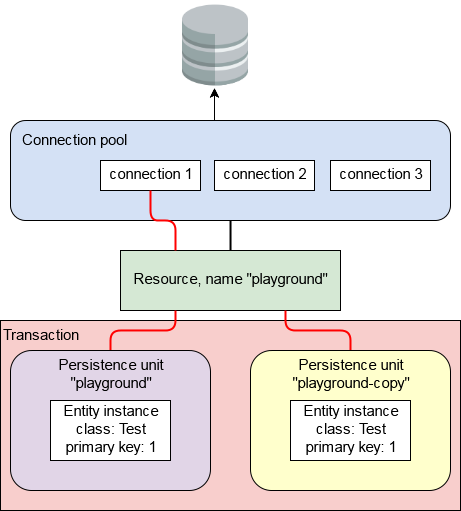

Going into the code with a debugger revealed that both persistence contexts, being different entity managers (as they should) did in fact use the same connection. Some further digging showed that the connection was not set as being in an XA transaction, and had the same transaction identifier on JDBC level. So the situation became this:

![shared connection]()

I can only assume that the JPA provider has an optimization to utilize the same connection if multiple units are created for the same transaction. So, why would this be okay? At the JDBC level, transactions are committed on a connection. As far as I know, the JDBC spec doesn't provide a method of having multiple transactions run on a single connection. That means that if the work for one persistence context is committed, the commit would also happen for the other one.

But that is actually why it works. The commit point for a distributed transaction should act as if all parts form a whole (assuming all voted "yes" in the voting phase). In this case, both persistence contexts are operating on the same connection, so they're implicitly a unit of work. Since the transaction is managed by the container, there's no immediate access to it anyway, meaning you can't move to commit one context and not the other. And with only a single connection to actually register with the transaction, it does not have to be XA, since it's not considered distributed from the transaction manager's point of view.

Note that this doesn't violate the locality of the persistence contexts. Fetching an entity from the database results in a separate object in both contexts. They can still operate independently from one another, just as they would with separate connections. In the above diagram, the fetched entities of the same type with the same primary key represent the same database row, but are separate objects managed by their respective entity managers.

To verify that this is indeed some optimization by the JPA provider, I created a second connection pool (to the same database) and a separate JDBC resource, set it for the second persistence unit and tested. This results in the expected exception:

Caused by: java.sql.SQLException: Error in allocating a connection.

Cause: java.lang.IllegalStateException: Local transaction already has 1 non-XA Resource: cannot add more resources.

If you create two JDBC resources, but point both to the same connection pool, then again it works just fine. This even worked when explicitly using class com.microsoft.sqlserver.jdbc.SQLServerConnectionPoolDataSource, confirming that it's probably an optimization on the JPA level rather than accidentally getting the same connection twice for the same data source (which would defeat the GlassFish pooling). When using an XA data source, it will indeed be an XA-enabled connection, but the JPA provider will still use the same one for both persistence contexts. Only when using separate pools will it in fact be two entirely separate XA-enabled connections and will you no longer get the above exception.

So, what's the catch? First of all, I haven't found anything describing (or mandating) this behaviour in the JPA or JTA specs. That means this is probably an implementation-specific optimization. Move to a different JPA provider, or even a different version, and it might no longer work.

Second, it's possible to get deadlocks. If you fetch the entity in the example above in both contexts, then change it in one and flush, it's fine. Fetch it in one context, call the flush method and then attempt to fetch it in the other, and you could have a deadlock. If you allow read-uncommitted transaction isolation, you would avoid this but what you'd see in one context would depend on when you fetched it with respects to a flush in the other. So manual flush calls could be tricky.

For reference, the GlassFish version used was 3.1.2.2. The JPA provider was Hibernate version 3.6.4.Final.

TL;DR

Yes, you can use two persistence contexts with the same non-XA resource in a JavaEE container-managed transaction, and ACID properties are preserved. However, this is thanks to what is likely a Hibernate optimization when multiple EntityManagers are created for the same transaction with the same data source. Since it doesn't seem mandated by the JPA or JTA specs you can probably not rely on this behaviour across JPA implementations, versions or application servers. So test and don't expect full portability.