In order to be able to determine whether the user clicked on any of my 3D objects I’m trying to turn the screen coordinates of the click into a vector which I then use to check whether any of my triangles got hit. To do so I’m using the XMVector3Unproject method provided by DirectX and I’m implementing everything in C++/CX.

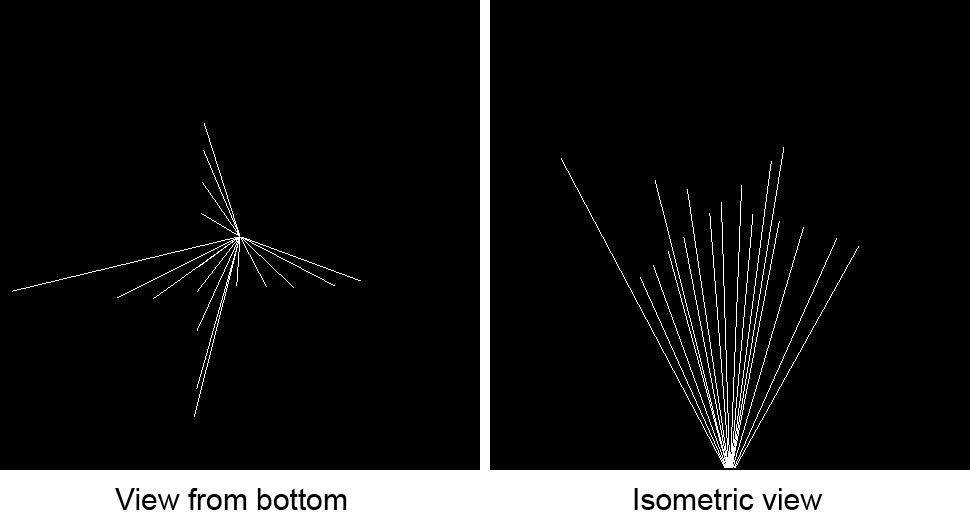

The problem that I’m facing is that the vector that results from unprojecting the screen coordinates is not at all as I expect it to be. The below image illustrates this:

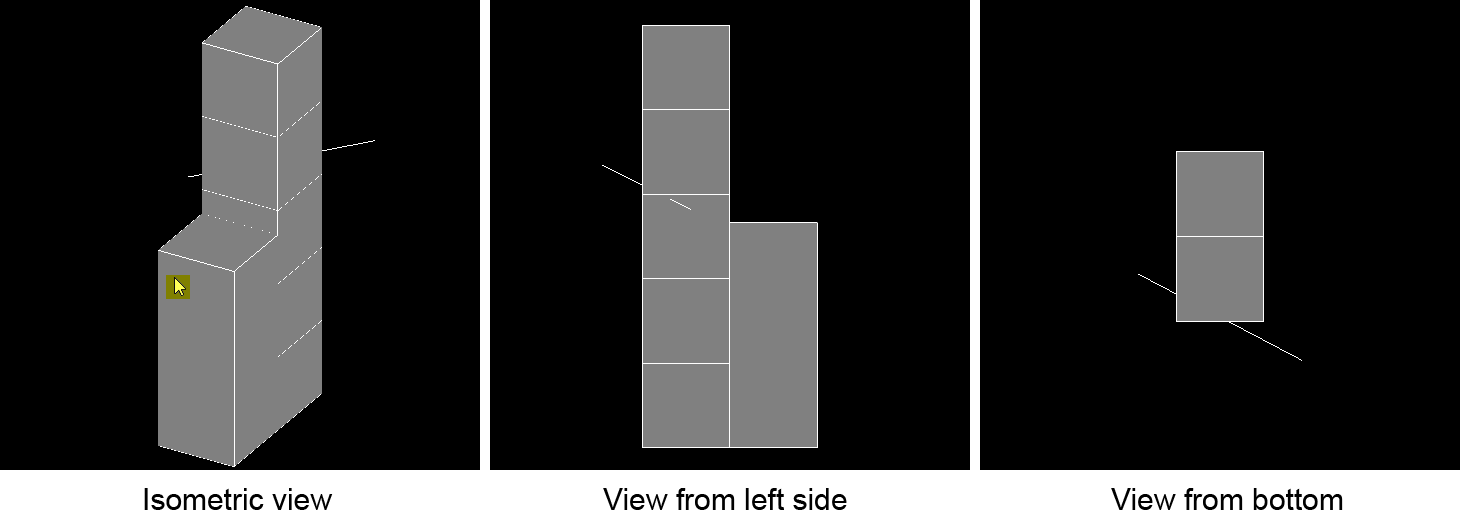

The cursor position at the time that the click occurs (highlighted in yellow) is visible in the isometric view on the left. As soon as I click, the vector resulting from unprojecting appears behind the model indicated in the images as the white line penetrating the model. So instead of originating at the cursor location and going into the screen in the isometric view it is appearing at a completely different position.

The cursor position at the time that the click occurs (highlighted in yellow) is visible in the isometric view on the left. As soon as I click, the vector resulting from unprojecting appears behind the model indicated in the images as the white line penetrating the model. So instead of originating at the cursor location and going into the screen in the isometric view it is appearing at a completely different position.

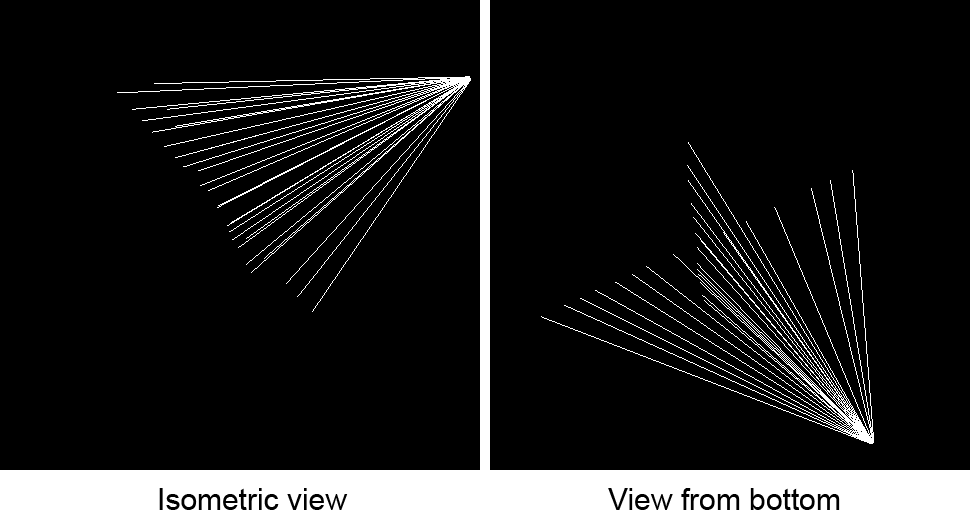

When I move the mouse in the isometric view horizontally while clicking and after that moving the mouse vertically and clicking the below pattern appears. All lines in the two images represent vectors resulting from clicking. The model has been removed for better visibility.

So as can be seen from the above image all vectors seem to originate from the same location. If I change the view and repeat the process the same pattern appears but with a different origin of the vectors.

So as can be seen from the above image all vectors seem to originate from the same location. If I change the view and repeat the process the same pattern appears but with a different origin of the vectors.

Here are the code snippets that I use to come up with this. First of all I receive the cursor position using the below code and pass it to my “SelectObject” method together with the width and height of the drawing area:

void Demo::OnPointerPressed(Object^ sender, PointerEventArgs^ e)

{

Point currentPosition = e->CurrentPoint->Position;

if(m_model->SelectObject(currentPosition.X, currentPosition.Y, m_renderTargetWidth, m_renderTargetHeight))

{

m_RefreshImage = true;

}

}

The “SelectObject” method looks as follows:

bool Model::SelectObject(float screenX, float screenY, float screenWidth, float screenHeight)

{

XMMATRIX projectionMatrix = XMLoadFloat4x4(&m_modelViewProjectionConstantBufferData->projection);

XMMATRIX viewMatrix = XMLoadFloat4x4(&m_modelViewProjectionConstantBufferData->view);

XMMATRIX modelMatrix = XMLoadFloat4x4(&m_modelViewProjectionConstantBufferData->model);

XMVECTOR v = XMVector3Unproject(XMVectorSet(screenX, screenY, 5.0f, 0.0f),

0.0f,

0.0f,

screenWidth,

screenHeight,

0.0f,

1.0f,

projectionMatrix,

viewMatrix,

modelMatrix);

XMVECTOR rayOrigin = XMVector3Unproject(XMVectorSet(screenX, screenY, 0.0f, 0.0f),

0.0f,

0.0f,

screenWidth,

screenHeight,

0.0f,

1.0f,

projectionMatrix,

viewMatrix,

modelMatrix);

// Code to retrieve v0, v1 and v2 is omitted

if(Intersects(rayOrigin, XMVector3Normalize(v - rayOrigin), v0, v1, v2, depth))

{

return true;

}

}

Eventually the calculated vector is used by the Intersects method of the DirectX::TriangleTests namespace to detect if a triangle got hit. I’ve omitted the code in the above snipped because it is not relevant for this problem.

To render these images I use an orthographic projection matrix and a camera that can be rotated around both its local x- and y-axis which generates the view matrix. The world matrix always stays the same, i.e. it is simply an identity matrix.

The view matrix is calculated as follows (based on the example in Frank Luna’s book 3D Game Programming):

void Camera::SetViewMatrix()

{

XMFLOAT3 cameraPosition;

XMFLOAT3 cameraXAxis;

XMFLOAT3 cameraYAxis;

XMFLOAT3 cameraZAxis;

XMFLOAT4X4 viewMatrix;

// Keep camera's axes orthogonal to each other and of unit length.

m_cameraZAxis = XMVector3Normalize(m_cameraZAxis);

m_cameraYAxis = XMVector3Normalize(XMVector3Cross(m_cameraZAxis, m_cameraXAxis));

// m_cameraYAxis and m_cameraZAxis are already normalized, so there is no need

// to normalize the below cross product of the two.

m_cameraXAxis = XMVector3Cross(m_cameraYAxis, m_cameraZAxis);

// Fill in the view matrix entries.

float x = -XMVectorGetX(XMVector3Dot(m_cameraPosition, m_cameraXAxis));

float y = -XMVectorGetX(XMVector3Dot(m_cameraPosition, m_cameraYAxis));

float z = -XMVectorGetX(XMVector3Dot(m_cameraPosition, m_cameraZAxis));

XMStoreFloat3(&cameraPosition, m_cameraPosition);

XMStoreFloat3(&cameraXAxis , m_cameraXAxis);

XMStoreFloat3(&cameraYAxis , m_cameraYAxis);

XMStoreFloat3(&cameraZAxis , m_cameraZAxis);

viewMatrix(0, 0) = cameraXAxis.x;

viewMatrix(1, 0) = cameraXAxis.y;

viewMatrix(2, 0) = cameraXAxis.z;

viewMatrix(3, 0) = x;

viewMatrix(0, 1) = cameraYAxis.x;

viewMatrix(1, 1) = cameraYAxis.y;

viewMatrix(2, 1) = cameraYAxis.z;

viewMatrix(3, 1) = y;

viewMatrix(0, 2) = cameraZAxis.x;

viewMatrix(1, 2) = cameraZAxis.y;

viewMatrix(2, 2) = cameraZAxis.z;

viewMatrix(3, 2) = z;

viewMatrix(0, 3) = 0.0f;

viewMatrix(1, 3) = 0.0f;

viewMatrix(2, 3) = 0.0f;

viewMatrix(3, 3) = 1.0f;

m_modelViewProjectionConstantBufferData->view = viewMatrix;

}

It is influenced by two methods which rotate the camera around the x-and y-axis of the camera:

void Camera::ChangeCameraPitch(float angle)

{

XMMATRIX rotationMatrix = XMMatrixRotationAxis(m_cameraXAxis, angle);

m_cameraYAxis = XMVector3TransformNormal(m_cameraYAxis, rotationMatrix);

m_cameraZAxis = XMVector3TransformNormal(m_cameraZAxis, rotationMatrix);

}

void Camera::ChangeCameraYaw(float angle)

{

XMMATRIX rotationMatrix = XMMatrixRotationAxis(m_cameraYAxis, angle);

m_cameraXAxis = XMVector3TransformNormal(m_cameraXAxis, rotationMatrix);

m_cameraZAxis = XMVector3TransformNormal(m_cameraZAxis, rotationMatrix);

}

The world / model matrix and the projection matrix are calculated as follows:

void Model::SetProjectionMatrix(float width, float height, float nearZ, float farZ)

{

XMMATRIX orthographicProjectionMatrix = XMMatrixOrthographicRH(width, height, nearZ, farZ);

XMFLOAT4X4 orientation = XMFLOAT4X4

(

1.0f, 0.0f, 0.0f, 0.0f,

0.0f, 1.0f, 0.0f, 0.0f,

0.0f, 0.0f, 1.0f, 0.0f,

0.0f, 0.0f, 0.0f, 1.0f

);

XMMATRIX orientationMatrix = XMLoadFloat4x4(&orientation);

XMStoreFloat4x4(&m_modelViewProjectionConstantBufferData->projection, XMMatrixTranspose(orthographicProjectionMatrix * orientationMatrix));

}

void Model::SetModelMatrix()

{

XMFLOAT4X4 orientation = XMFLOAT4X4

(

1.0f, 0.0f, 0.0f, 0.0f,

0.0f, 1.0f, 0.0f, 0.0f,

0.0f, 0.0f, 1.0f, 0.0f,

0.0f, 0.0f, 0.0f, 1.0f

);

XMMATRIX orientationMatrix = XMLoadFloat4x4(&orientation);

XMStoreFloat4x4(&m_modelViewProjectionConstantBufferData->model, XMMatrixTranspose(orientationMatrix));

}

Frankly speaking I do not yet understand the problem that I’m facing. I’d be grateful if anyone with a deeper insight could give me some hints as to where I need to apply changes so that the vector calculated from the unprojection starts at the cursor position and moves into the screen.

Edit 1:

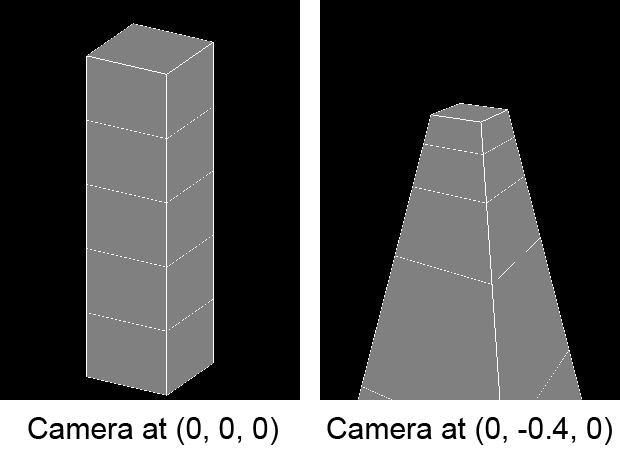

I assume it has to do with the fact that my camera is located at (0, 0, 0) in world coordinates. The camera rotates around its local x- and y-axis. From what I understand the view matrix created by the camera builds the plane onto which the image is projected. If that is the case it would explain why the ray is at a somehow "unexpected" location.

My assumption is that I need to move the camera out of the center so that it is located outside of the object. However, if simply modify the member variable m_cameraPosition of the camera my model gets totally distorted.

Anyone out there able and willing to help?