After deploying a new version of my application in Docker,

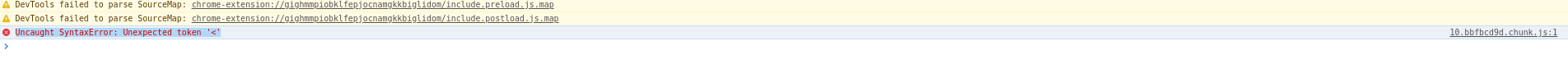

I see my console having the following error that break my application:

Uncaught SyntaxError: Unexpected token '<'

In this screenshot, the source that is missing is called: 10.bbfbcd9d.chunk.js, the content of this file looks like:

(this.webpackJsonp=this.webpackJsonp||[]).push([[10],{1062:function(e,t,n){"use strict";var r=n(182);n.d(t,"a",(function(){return r.a}))},1063:function(e,t,n){var ...{source:Z[De],resizeMode:"cover",style:[Y.fixed,{zIndex:-1}]})))}))}}]);

//# sourceMappingURL=10.859374a0.chunk.js.map

This error happens because :

- On every release, we build a new

Dockerimage that only include chunks from the latest version - Some clients are running an outdated version and the server won't have a resolution for an old chunk because of (1)

Chunks are

.jsfile that are produced bywebpack, see code splitting for more information

Reloading the application will update the version to latest, but it still breaks the app for all users that use an outdated version.

A possible fix I have tried consisted of refreshing the application. If the requested chunk was missing on the server, I was sending a reload signal if the request for a .js file ended up in the wildcard route.

Wild card is serving the

index.htmlof the web application, this for delegating routing to client-side routing in case of an user refreshing it's page

// Handles any requests that don't match the ones above

app.get('*', (req, res) => {

// prevent old version to download a missing old chunk and force application reload

if (req.url.slice(-3) === '.js') {

return res.send(`window.location.reload(true)`);

}

return res.sendFile(join(__dirname, '../web-build/index.html'));

});

This appeared to be a bad fix especially on Google Chrome for Android, I have seen my app being refreshed in an infinite loop. (And yes, that is also an ugly fix!)

Since it's not a reliable solution for my end users, I am looking for another way to reload the application if the user client is outdated.

My web application is build using webpack, it's exactly as if it was a create-react-app application, the distributed build directory is containing many .js chunks files.

These are some possible fix I got offered on webpack issue tracker, some were offered by the webpack creator itself:

- Don't remove old builds. <= I am building a Docker image so this is a bit challenging

- catch

import()errors and reload. You can also do it globally by patching__webpack_load_chunk__somewhere. <= I don't get that patch or where to useimport(), I am not myself producing those chunks and it's just a production feature - let the server send

window.location.reload(true)for not existing js files, but this is a really weird hack. <= it makes my application reload in loop on chrome android - Do not send HTML for

.jsrequests, even if they don't exist, this only leads to weird errors <= that is not fixing my problem

Related issues

How can I implement a solution that would prevent this error?