I was also facing this issue while doing Insert/Update/Delete ~1500 to ~3000 record of ~10kb in size on single partition in DynamoDB, which was consuming ~100000 WCU for single partition.

which results into above mentioned exception in question,

Lets understand the exception in parts here:

Throughput exceeds the current capacity of your table or index. DynamoDB is automatically scaling your table or index so please try again shortly

which means current RCU/WCU is exceeded (Applicable to both On-Demand or Provision mode)

If exceptions persist, check if you have a hot key

i.e. partition which is selected has become a hot, because of exceeding WCU/RCU

Refer this for calculating RCU/WCU in DynamoDB: https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/ProvisionedThroughput.html

https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/bp-partition-key-design.html

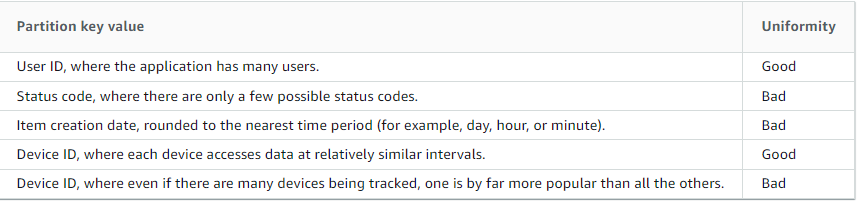

Here dynamo is suggesting to redesign your partition keys based on below example, so my case is falling under second case where I had only single partition and where I was performing high CRUD operation in sorter time.

![enter image description here]()

Now to support this use case

Dynamo strongly recommend that you use an exponential backoff algorithm. If

you retry the batch operation immediately, the underlying read or

write requests can still fail due to throttling on the individual

tables. If you delay the batch operation using exponential backoff,

the individual requests in the batch are much more likely to succeed.

Dynamo suggest to add delay in between, considering Dynamo has already performed retry based on AWS SDK’s default implementation by an exponential backoff algorithm, so retry might not help here.

So I implemented these 2 steps to resolve this issue

Divided all my multiple operation in batch of 200 items by DocumentBatchWrite (works fine for smaller set of records ~500 records of ~10kb size)

and for thousands of records (of same size mentioned above) I added delay of 1-2 seconds based on batch size, between each batch operation.

After this I'm not facing any throttling exception from DynamoDB

Note: one need to adjust batch size and delay in-between batch operation

based on records count.

Refer this for suggestive resolutions on throttling from DynamoDB : https://aws.amazon.com/premiumsupport/knowledge-center/dynamodb-table-throttled/