Your English is fine.

TL;DR - 5-30s depending on user experience.

I suggest long poll timeouts be 100x the server's "request" time. This makes a strong argument for 5-20s timeouts, depending on your urgency to detect dropped connections and disappeared clients.

Here's why:

- Most examples use 20-30 seconds.

- Most routers will silently drop connections that stay open too long.

- Clients may "disappear" for reasons like network errors or going into low power state.

- Servers cannot detect dropped connections. This makes 5 min timeouts a bad practice as they will tie up sockets and resources. This would be an easy DOS attack on your server.

So, < 30 seconds would be "normal". How should you choose?

What is the cost-benefit of making the long-poll connections?

Let's say a regular request takes 100ms of server "request" time to open/close the connection, run a database query, and compute/send a response.

A 10 second timeout would be 10,000 ms, and your request time is 1% of the long-polling time. 100 / 10,000 = .01 = 1%

A 20 second timeout would be 100/20000 = 0.5%

A 30 second timeout = 0.33%, etc.

After 30 seconds, the practical benefit of the longer timeout will always be less than: 0.33% performance improvement. There is little reason for > 30s

Conclusion

I suggest long poll timeouts be 100x the server's "request" time. This makes a strong argument for 5-20s timeouts, depending on your urgency to detect dropped connections and disappeared clients.

Best practice: Configure your client and server to abandon requests at the same timeout. Give the client extra network ping time for safety. E.g. server = 100x request time, client = 102x request time.

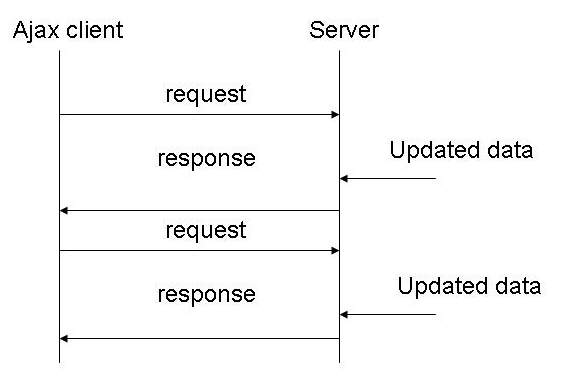

Best practice: Long polling is superior to websockets for many/most use cases because of the lack of complexity, more scalable architecture, and HTTP's well-known security attack surface area.