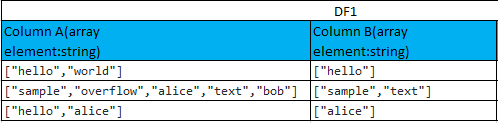

You can use a user-defined function. My example dataframe differs a bit from yours, but the code should work fine:

import pandas as pd

from pyspark.sql.types import *

#example df

df=sqlContext.createDataFrame(pd.DataFrame(data=[[["hello", "world"],

["world"]],[["sample", "overflow", "text"], ["sample", "text"]]], columns=["A", "B"]))

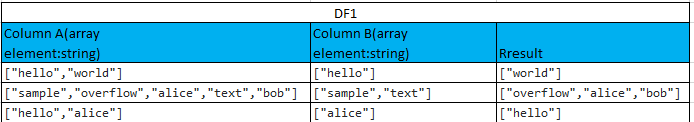

# define udf

differencer=udf(lambda x,y: list(set(x)-set(y)), ArrayType(StringType()))

df=df.withColumn('difference', differencer('A', 'B'))

EDIT:

This does not work if there are duplicates as set retains only uniques. So you can amend the udf as follows:

differencer=udf(lambda x,y: [elt for elt in x if elt not in y] ), ArrayType(StringType()))