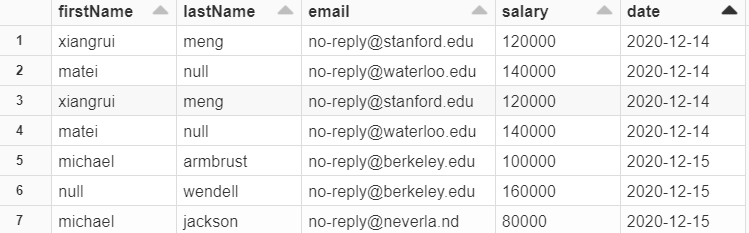

So I have a dataframe which has a column, file_date. For a given run, the dataframe has only data for one unique file_date. For instance, in a run, let us assume that there are say about 100 records with a file_date of 2020_01_21.

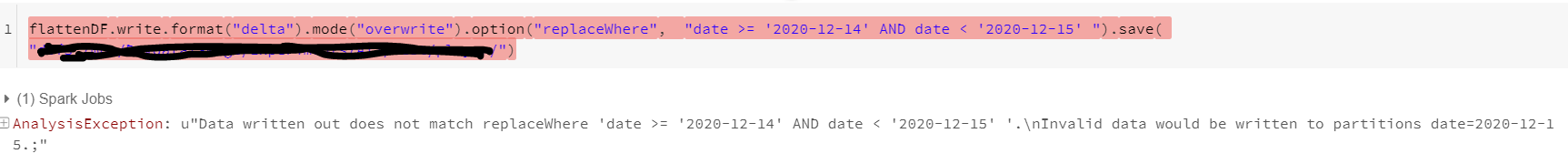

I am writing this data using the following

(df

.repartition(1)

.write

.format("delta")

.partitionBy("FILE_DATE")

.mode("overwrite")

.option("overwriteSchema", "true")

.option("replaceWhere","FILE_DATE=" + run_for_file_date)

.mode("overwrite")

.save("/mnt/starsdetails/starsGrantedDetails/"))

My requirement is to create a folder/partition for every FILE_DATE as there is a good chance that data for a specific file_date will be rerun and the specific file_date’s data has to be overwritten. Unfortunately in the above code, if I don’t place the “replaceWhere” option, it just overwrites data for other partitions too but if I write the above, data seems to be overwriting correctly the specific partition but every time the write is done, I am getting the following error.

Please note I have also set the following spark config before the write:

spark.conf.set("spark.sql.sources.partitionOverwriteMode","dynamic")

But I am still getting the following error:

AnalysisException: "Data written out does not match replaceWhere 'FILE_DATE=2020-01-19'.\nInvalid data would be written to partitions FILE_DATE=2020-01-20.;"

Can you kindly help please.