A friend of mine has been having trouble getting her site indexed by google and asked me to have a look, but that is not something I really know much about and was hoping for some assistance.

Looking at her search console, google crawl shows an error of soft-404 on the index page. I marked this as fixed a few times, because the site looks fine to me but it keeps coming back.

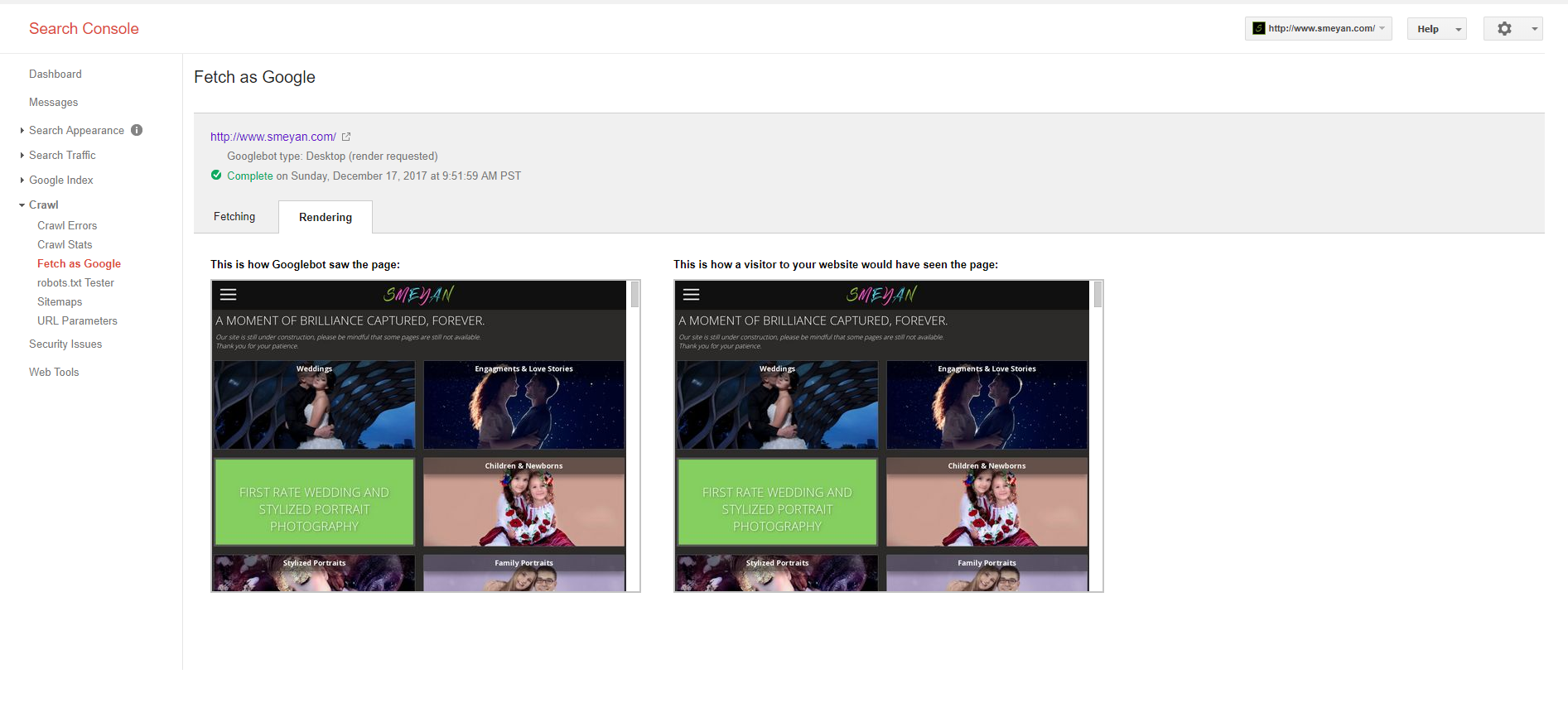

If I fetch the site as google it seems to be working fine, although it is showing the mobile version instead of the desktop.

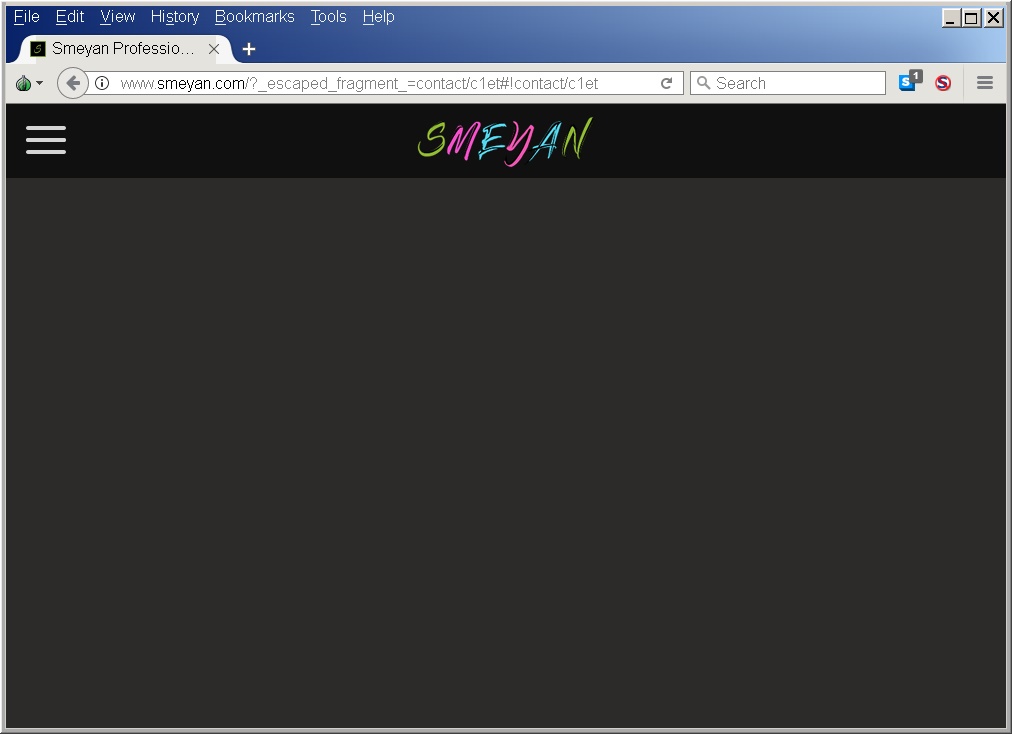

It keeps giving another reoccurring 404 of a page http://www.smeyan.com/new-page, which doesn't exist anywhere I can see including server files or sitemaps.

Here is what I know about this site:

It used to be a wix site and was moved to a host gator shared server 2-3 months ago.

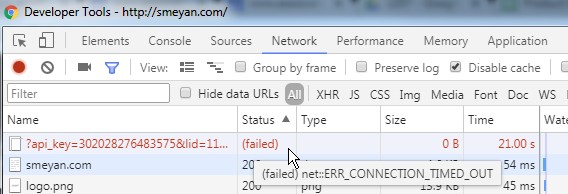

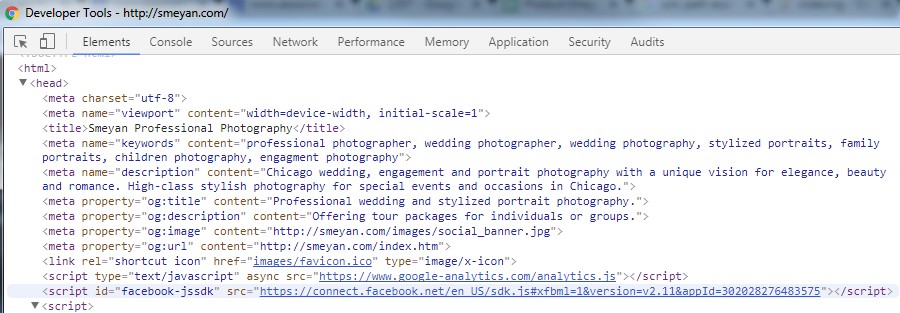

It's using JavaScript/jQuery .load to get page content outside the index.html template.

It has 2 sitemaps one for the URLs and one for both URLs and images http://www.smeyan.com/sitemap_url.xml http://www.smeyan.com/sitemap.xml

It has been about 2 months since it was submitted for indexing and google has not indexed any of the content when you search for site:www.smeyan.com it shows some old stuff from the wix server. Although search console says it has 172 images indexed.

it has www. as a preference set in search console.

Has anyone experienced this and has an direction for a fix?