I'm using Google Cloud Composer (managed Airflow on Google Cloud Platform) with image version composer-0.5.3-airflow-1.9.0 and Python 2.7, and I'm facing a weird issue : after importing my DAGs, they are not clickable from the Web UI (and there are no buttons "Trigger DAG", "Graph view", ...), while all works perfectly when running a local Airflow.

Even if non usable from the webserver on Composer, my DAGs still exist. I can list them using CLI (list_dags), describe them (list_tasks) and even trigger them (trigger_dag).

Minimal example reproducing the issue

A minimal example I used to reproduce the issue is shown below. Using a hook (here, GoogleCloudStorageHook) is very important, since the bug on Composer happens when a hook is used. Initially, I was using a custom hook (in a custom plugin), and was facing the same issue.

Basically here, the example lists all entries in a GCS bucket (my-bucket) and generate a DAG for each entry beginning with my_dag.

import datetime

from airflow import DAG

from airflow.contrib.hooks.gcs_hook import GoogleCloudStorageHook

from airflow.operators.bash_operator import BashOperator

google_conn_id = 'google_cloud_default'

gcs_conn = GoogleCloudStorageHook(google_conn_id)

bucket = 'my-bucket'

prefix = 'my_dag'

entries = gcs_conn.list(bucket, prefix=prefix)

for entry in entries:

dag_id = str(entry)

dag = DAG(

dag_id=dag_id,

start_date=datetime.datetime.today(),

schedule_interval='0 0 1 * *'

)

op = BashOperator(

task_id='test',

bash_command='exit 0',

dag=dag

)

globals()[dag_id] = dag

Results on Cloud Composer

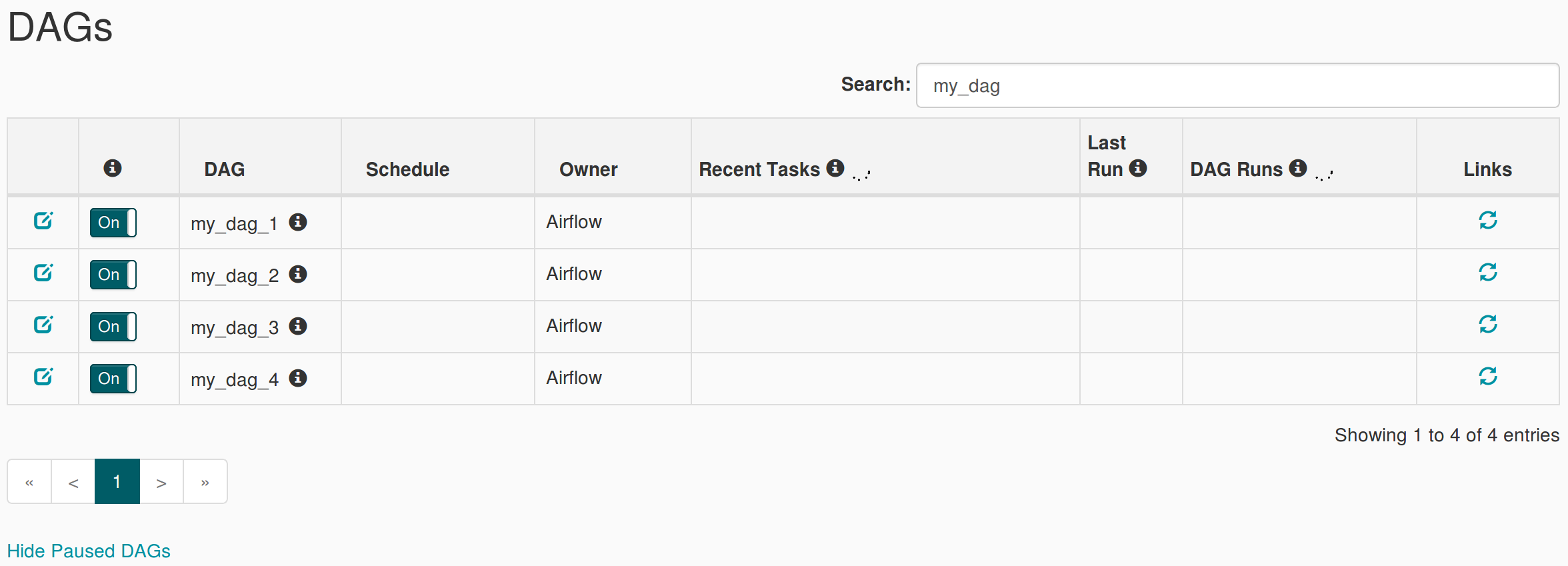

After importing this file to Composer, here's the result (I have 4 files beginning with my_dag in my-bucket) :

As I explained, DAGs are not clickable and the columns "Recent Tasks" and "DAG Runs" are loading forever. The "info" mark next to each DAG name says : This DAG isn't available in the webserver DagBag object. It shows up in this list because the scheduler marked it as active in the metadata database.

Of course, refreshing is not useful, and when accessing the DAG Graph View by the direct URL (https://****.appspot.com/admin/airflow/graph?dag_id=my_dag_1), it shows an error : DAG "my_dag_1" seems to be missing.

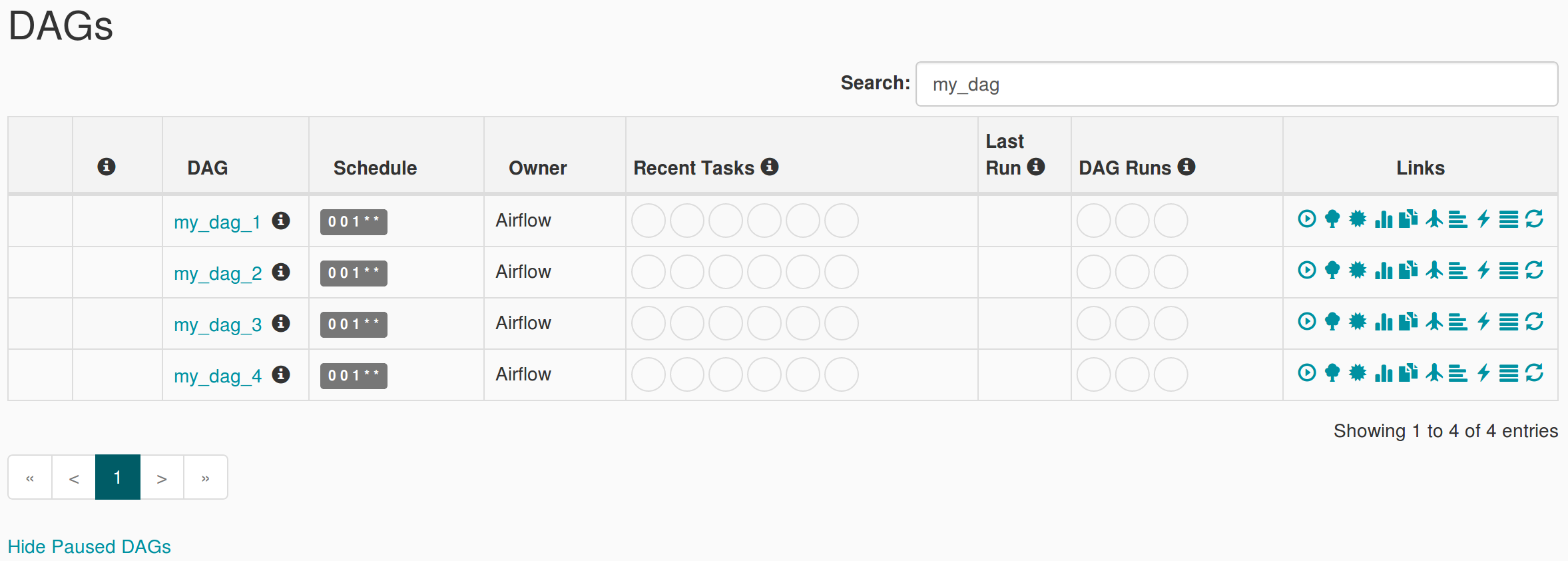

Results on local Airflow

When importing the script on a local Airflow, the webserver works fine :

Some tests

If I replace the line entries = gcs_conn.list(bucket, prefix=prefix) with hard-coded values like entries = [u'my_dag_1', u'my_dag_2', u'my_dag_3', u'my_dag_4'], then DAGs are clickable on Composer Web UI (and all buttons on "links" columns appear). It seems that, from other tests I have made on my initial problem, calling a method from a hook (not just initializing the hook) causes the issue. Of course, DAGs in Composer work normally on simple examples (no hooks method calls involved).

I have no idea why this happened, I have also inspected the logs (by setting logging_level = DEBUG in airflow.cfg) but could not see something wrong. I'm suspecting a bug in the webserver, but I cannot get a significant stack trace. Webserver logs from Composer (hosted on App Engine) are not available, or at least I did not find a way to access them.

Did someone experienced the same issue or similar ones with Composer Web UI ? I think the problem is coming from the usage of hooks, but I may be wrong. It can just be a side effect. To be honest, I am lost after testing so many things. I'll be glad if someone can help me. Thanks!

Update

When deploying a self-managed webserver on Kubernetes following this guide : https://cloud.google.com/composer/docs/how-to/managing/deploy-webserver, my DAGs are clickable from this self-managed webserver.

entrieswith hard-coded values instead of calling the hook method (as I showed in the Tests section) works. @VirajParekh Actually no, I can't reproduce the problem with my local Airflow install, and that's my main concern! So I think this bug is related to Composer, but I can't find something relevant in the logs :( – Otisotitis