I've been looking to squeeze a little more performance out of my code; recently, while browsing this Python wiki page, I found this claim:

Multiple assignment is slower than individual assignment. For example "x,y=a,b" is slower than "x=a; y=b".

Curious, I tested it (on Python 2.7):

$ python -m timeit "x, y = 1.2, -1.4"

10000000 loops, best of 3: 0.0365 usec per loop

$ python -m timeit "x = 1.2" "y = -1.4"

10000000 loops, best of 3: 0.0542 usec per loop

I repeated several times, in different orders, etc., but the multiple assignment snippet consistently performed at least 30% better than the individual assignment. Obviously the parts of my code involving variable assignment aren't going to be the source of any significant bottlenecks, but my curiousity is piqued nonetheless. Why is multiple assignment apparently faster than individual assignment, when the documentation suggests otherwise?

EDIT:

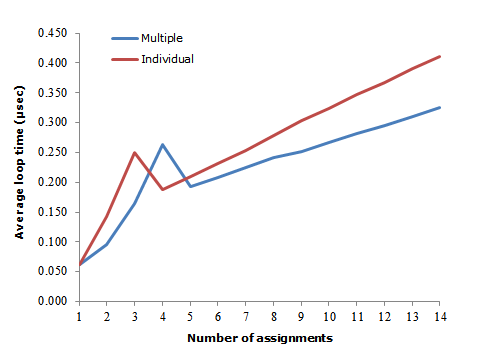

I tested assignment to more than two variables and got the following results:

The trend seems more or less consistent; can anyone reproduce it?

(CPU: Intel Core i7 @ 2.20GHz)