I am having a hard time understanding the output shape of keras.layers.Conv2DTranspose

Here is the prototype:

keras.layers.Conv2DTranspose(

filters,

kernel_size,

strides=(1, 1),

padding='valid',

output_padding=None,

data_format=None,

dilation_rate=(1, 1),

activation=None,

use_bias=True,

kernel_initializer='glorot_uniform',

bias_initializer='zeros',

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None

)

In the documentation (https://keras.io/layers/convolutional/), I read:

If output_padding is set to None (default), the output shape is inferred.

In the code (https://github.com/keras-team/keras/blob/master/keras/layers/convolutional.py), I read:

out_height = conv_utils.deconv_length(height,

stride_h, kernel_h,

self.padding,

out_pad_h,

self.dilation_rate[0])

out_width = conv_utils.deconv_length(width,

stride_w, kernel_w,

self.padding,

out_pad_w,

self.dilation_rate[1])

if self.data_format == 'channels_first':

output_shape = (batch_size, self.filters, out_height, out_width)

else:

output_shape = (batch_size, out_height, out_width, self.filters)

and (https://github.com/keras-team/keras/blob/master/keras/utils/conv_utils.py):

def deconv_length(dim_size, stride_size, kernel_size, padding, output_padding, dilation=1):

"""Determines output length of a transposed convolution given input length.

# Arguments

dim_size: Integer, the input length.

stride_size: Integer, the stride along the dimension of `dim_size`.

kernel_size: Integer, the kernel size along the dimension of `dim_size`.

padding: One of `"same"`, `"valid"`, `"full"`.

output_padding: Integer, amount of padding along the output dimension, can be set to `None` in which case the output length is inferred.

dilation: dilation rate, integer.

# Returns

The output length (integer).

"""

assert padding in {'same', 'valid', 'full'}

if dim_size is None:

return None

# Get the dilated kernel size

kernel_size = kernel_size + (kernel_size - 1) * (dilation - 1)

# Infer length if output padding is None, else compute the exact length

if output_padding is None:

if padding == 'valid':

dim_size = dim_size * stride_size + max(kernel_size - stride_size, 0)

elif padding == 'full':

dim_size = dim_size * stride_size - (stride_size + kernel_size - 2)

elif padding == 'same':

dim_size = dim_size * stride_size

else:

if padding == 'same':

pad = kernel_size // 2

elif padding == 'valid':

pad = 0

elif padding == 'full':

pad = kernel_size - 1

dim_size = ((dim_size - 1) * stride_size + kernel_size - 2 * pad + output_padding)

return dim_size

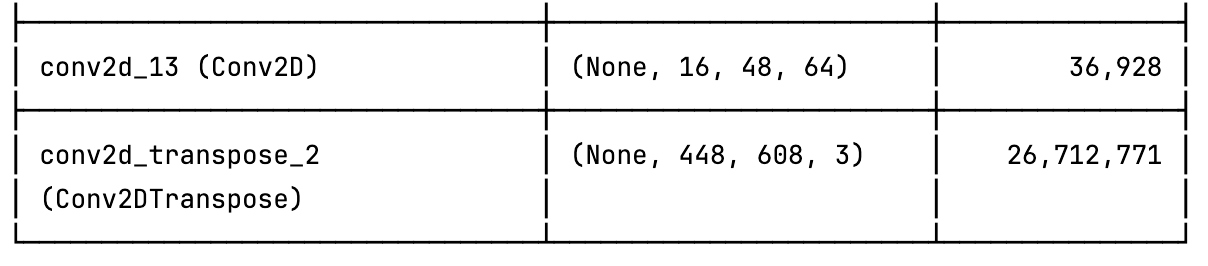

I understand that Conv2DTranspose is kind of a Conv2D, but reversed.

Since applying a Conv2D with kernel_size = (3, 3), strides = (10, 10) and padding = "same" to a 200x200 image will output a 20x20 image, I assume that applying a Conv2DTranspose with kernel_size = (3, 3), strides = (10, 10) and padding = "same" to a 20x20 image will output a 200x200 image.

Also, applying a Conv2D with kernel_size = (3, 3), strides = (10, 10) and padding = "same" to a 195x195 image will also output a 20x20 image.

So, I understand that there is kind of an ambiguity on the output shape when applying a Conv2DTranspose with kernel_size = (3, 3), strides = (10, 10) and padding = "same" (user might want output to be 195x195, or 200x200, or many other compatible shapes).

I assume that "the output shape is inferred." means that a default output shape is computed according to the parameters of the layer, and I assume that there is a mechanism to specify an output shape differnet from the default one, if necessary.

This said, I do not really understand

the meaning of the "output_padding" parameter

the interactions between parameters "padding" and "output_padding"

the various formulas in the function keras.conv_utils.deconv_length

Could someone explain this?

Many thanks,

Julien