Overview: A Multi-pass, Data-Mutating Method

(See here for a better answer.)

Yes, there is a way.

A method to do just this is described in the paper "Fast Reproducible Floating-Point Summation" by Demmel and Nguyen (2013). I've included both a serial and parallel implementation of it below.

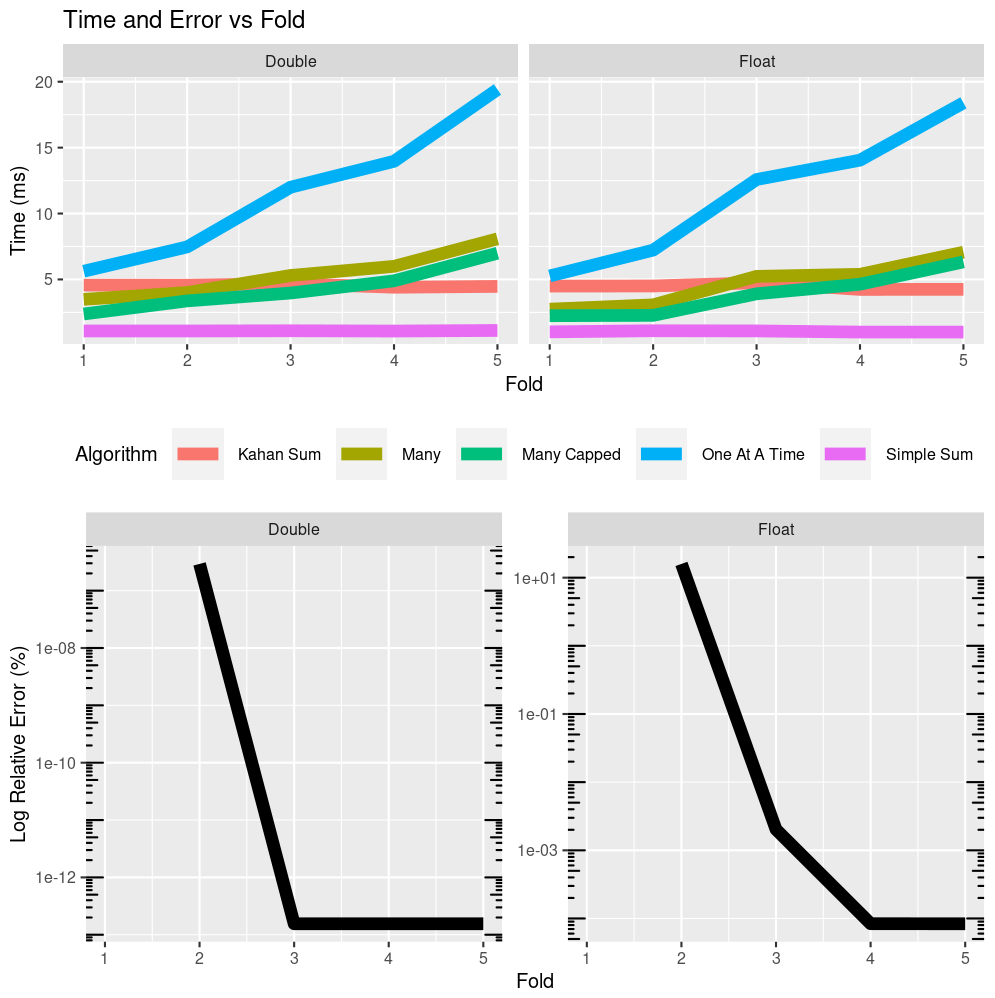

Here we'll compare three algorithms:

- Conventional left-to-right summation: this is fast, inaccurate, and non-reproducible with respect to permutations in the input order.

- Kahan summation: this is slower, very accurate (essentially O(1) in the input size), and, while non-reproducible with respect to permutations in the input order, closer to reproducibility in a narrow sense.

- Deterministic summation: this is somewhat slower than Kahan summation, can be quite accurate, and is bitwise reproducible.

Conventional summation is inaccurate because as the sum grows the least-significant digits of the numbers we add to it get silently dropped. Kahan summation overcomes this by keeping a running "compensation" sum which holds onto the least-significant digits. The deterministic summation uses a similar strategy to maintain accuracy but, before performing the summation, "pre-rounds" the input numbers to a common base so that their sum can be computed accurately without any rounding error.

Tests

To study the algorithms, we'll run a test on 1 million numbers each drawn uniformly from the range [-1000, 1000]. To demonstrate both the range of answers of the algorithms as well as their determinism, the input will be shuffled and summed 100 times. The parallel algorithms are run using 12 threads.

The "correct" summation (as determined by using Kahan summation in long double mode and choosing the most frequently occuring value) is

310844.700699143717685046795

A result I'll assert, which the code below demonstrates rigorously, is that for each data type studied the deterministic algorithm yields the same result for each of the 100 data orderings and that these results are the same for both the serial and parallel variants of the algorithm.

The results of the various algorithms are as follows:

Serial Float

=========================================================

Simple summation accumulation type = float

Average deterministic summation time = 0.00462s

Average simple summation time = 0.00144s (3.20x faster than deterministic)

Average Kahan summation time = 0.00290s (1.59x faster than deterministic)

Deterministic value = 256430592.000000000 (very different from actual)

Distinct Kahan values = 3 (with single-precision accumulator)

Distinct Simple values = 93 (with single-precision accumulator)

Distinct Simple values = 1 (with double-precision accumulator)

Parallel Float

=========================================================

Simple summation accumulation type = float

Average deterministic summation time = 0.00576s

Average simple summation time = 0.00206s (2.79x faster than deterministic)

Average Kahan summation time = 0.00599s (0.96x faster than deterministic)

Deterministic value = 256430592.000000000 (very different from actual)

Distinct Kahan values = 3 (with single-precision accumulator)

Distinct Simple values = 89 (with single-precision accumulator)

Distinct Simple values = 1 (with double-precision accumulator)

Serial Double

=========================================================

Average deterministic summation time = 0.00600s

Average simple summation time = 0.00171s (3.49x faster than deterministic)

Average Kahan summation time = 0.00378s (1.58x faster than deterministic)

Deterministic value = 310844.70069914375199005 (epsilon difference from actual value)

Distinct Kahan values = 4

Distinct Simple values = 88

Parallel Double

=========================================================

Average deterministic summation time = 0.01074s

Average simple summation time = 0.00289s (3.71x faster than deterministic)

Average Kahan summation time = 0.00648s (1.65x faster than deterministic)

Deterministic value = 310844.70069914375199005 (epsilon difference from actual value)

Distinct Kahan values = 2

Distinct Simple values = 83

Serial Long Double

=========================================================

Average deterministic summation time = 0.01072s

Average simple summation time = 0.00215s (4.96x faster than deterministic)

Average Kahan summation time = 0.00448s (2.39x faster than deterministic)

Deterministic value = 310844.700699143717685046795 (no discernable difference from actual)

Distinct Kahan values = 3

Distinct Simple values = 94

Parallel Long Double

=========================================================

Average deterministic summation time = 0.01854s

Average simple summation time = 0.00466s (3.97x faster than deterministic)

Average Kahan summation time = 0.00635s (2.91x faster than deterministic)

Deterministic value = 310844.700699143717685046795 (no discernable difference from actual)

Distinct Kahan values = 1

Distinct Simple values = 82

Discussion

So, what have we learned? From the single-precision results we see that using a double-length accumulator makes the conventional summation algorithm reproducible for this data set, though that almost certainly wouldn't be the case for arbitrary datasets. Still, this can be a cheap way to get improved accuracy when it works.

When the accumulator used by conventional summation is of the same size as the numbers being added, it works very poorly: we ran 100 tests and got nearly as many distinct results from conventional summation.

Kahan summation on the other hand produces very few distinct results (thus it is "more" deterministic) and takes approximately twice as long as conventional summation.

The deterministic algorithm takes ~4x longer than simple summation and about 1.5-3x longer than Kahan summation, but gets approximately the same answers for both the double and long double types except with bitwise determinism (it always gets the same answer no matter how the input data is sorted).

However, the single-precision floating-point gets a very bad answer, unlike the single-precision conventional and Kahan summations, which turn out to be quite close to the actual answer. Why is this?

The paper we've been working from determines that if there are n numbers in the input and we use k folding rounds (the paper recommends 2, which is what we use here), then the deterministic and conventional sums will have similar error bounds provided

n^k * e^(k-1) << 1

where e is the floating-point epsilon of the data type. These epsilon values are:

Single-precision epsilon = 0.000000119

Double-precision epsilon = 0.00000000000000022

Long double-precision epsilon = 0.000000000000000000108

Plugging these in to the equation along with n=1M we get:

Single condition = 119000

Double condition = 0.00022

Long double condition = 0.000000108

So we see that it's expected that single-precision would perform poorly for an input of this size.

Another point to note is that although long double takes 16 bytes on my machine, this is only for alignment purposes: the true length that's used for compute is only 80-bits. Therefore, two long doubles will compare numerically equally but their unused bits are arbitrary. For true bitwise reproducibility the unused bits would need to be determined and set to a known value.

Code

//Compile with:

//g++ -g -O3 repro_vector.cpp -fopenmp

//NOTE: Always comile with `-g`. It doesn't slow down your code, but does make

//it debuggable and improves ease of profiling

#include <algorithm>

#include <bitset> //Used for showing bitwise representations

#include <cfenv> //Used for setting floating-point rounding modes

#include <chrono> //Used for timing algorithms

#include <climits> //Used for showing bitwise representations

#include <iostream>

#include <omp.h> //OpenMP

#include <random>

#include <stdexcept>

#include <string>

#include <typeinfo>

#include <unordered_map>

#include <vector>

constexpr int ROUNDING_MODE = FE_UPWARD;

constexpr int N = 1'000'000;

constexpr int TESTS = 100;

// Simple timer class for tracking cumulative run time of the different

// algorithms

struct Timer {

double total = 0;

std::chrono::high_resolution_clock::time_point start_time;

Timer() = default;

void start() {

start_time = std::chrono::high_resolution_clock::now();

}

void stop() {

const auto now = std::chrono::high_resolution_clock::now();

const auto time_span = std::chrono::duration_cast<std::chrono::duration<double>>(now - start_time);

total += time_span.count();

}

};

//Simple class to enable directed rounding in floating-point math and to reset

//the rounding mode afterwards, when it goes out of scope

struct SetRoundingMode {

const int old_rounding_mode;

SetRoundingMode(const int mode) : old_rounding_mode(fegetround()) {

if(std::fesetround(mode)!=0){

throw std::runtime_error("Failed to set directed rounding mode!");

}

}

~SetRoundingMode(){

if(std::fesetround(old_rounding_mode)!=0){

throw std::runtime_error("Failed to reset rounding mode to original value!");

}

}

static std::string get_rounding_mode_string() {

switch (fegetround()) {

case FE_DOWNWARD: return "downward";

case FE_TONEAREST: return "to-nearest";

case FE_TOWARDZERO: return "toward-zero";

case FE_UPWARD: return "upward";

default: return "unknown";

}

}

};

// Used to make showing bitwise representations somewhat more intuitive

template<class T>

struct binrep {

const T val;

binrep(const T val0) : val(val0) {}

};

// Display the bitwise representation

template<class T>

std::ostream& operator<<(std::ostream& out, const binrep<T> a){

const char* beg = reinterpret_cast<const char*>(&a.val);

const char *const end = beg + sizeof(a.val);

while(beg != end){

out << std::bitset<CHAR_BIT>(*beg++);

if(beg < end)

out << ' ';

}

return out;

}

//Simple serial summation algorithm with an accumulation type we can specify

//to more fully explore its behaviour

template<class FloatType, class SimpleAccumType>

FloatType serial_simple_summation(const std::vector<FloatType> &vec){

SimpleAccumType sum = 0;

for(const auto &x: vec){

sum += x;

}

return sum;

}

//Parallel variant of the simple summation algorithm above

template<class FloatType, class SimpleAccumType>

FloatType parallel_simple_summation(const std::vector<FloatType> &vec){

SimpleAccumType sum = 0;

#pragma omp parallel for default(none) reduction(+:sum) shared(vec)

for(size_t i=0;i<vec.size();i++){

sum += vec[i];

}

return sum;

}

//Kahan's compensated summation algorithm for accurately calculating sums of

//many numbers with O(1) error

template<class FloatType>

FloatType serial_kahan_summation(const std::vector<FloatType> &vec){

FloatType sum = 0.0f;

FloatType c = 0.0f;

for (const auto &num: vec) {

const auto y = num - c;

const auto t = sum + y;

c = (t - sum) - y;

sum = t;

}

return sum;

}

//Parallel version of Kahan's compensated summation algorithm (could be improved

//by better accounting for the compsenation during the reduction phase)

template<class FloatType>

FloatType parallel_kahan_summation(const std::vector<FloatType> &vec){

//Parallel phase

std::vector<FloatType> sum(omp_get_max_threads(), 0);

FloatType c = 0;

#pragma omp parallel for default(none) firstprivate(c) shared(sum,vec)

for (size_t i=0;i<vec.size();i++) {

const auto tid = omp_get_thread_num();

const auto y = vec[i] - c;

const auto t = sum.at(tid) + y;

c = (t - sum[tid]) - y;

sum[tid] = t;

}

//Serial reduction phase

//This could be more accurate if it took the remaining compensation values

//from above into account

FloatType total_sum = 0.0f;

FloatType total_c = 0.0f;

for(const auto &num: sum){

const auto y = num - total_c;

const auto t = total_sum + y;

total_c = (t - total_sum) - y;

total_sum = t;

}

return total_sum;

}

// Error-free vector transformation. Algorithm 4 from Demmel and Nguyen (2013)

template<class FloatType>

FloatType ExtractVectorNew2(

const FloatType M,

const typename std::vector<FloatType>::iterator &begin,

const typename std::vector<FloatType>::iterator &end

){

// Should use the directed rounding mode of the parent thread

auto Mold = M;

for(auto v=begin;v!=end;v++){

auto Mnew = Mold + (*v);

auto q = Mnew - Mold;

(*v) -= q;

Mold = Mnew;

}

//This is the exact sum of high order parts q_i

//v is now the vector of low order parts r_i

return Mold - M;

}

template<class FloatType>

FloatType mf_from_deltaf(const FloatType delta_f){

const int power = std::ceil(std::log2(delta_f));

return static_cast<FloatType>(3.0) * std::pow(2, power);

}

//Implements the error bound discussed near Equation 6 of

//Demmel and Nguyen (2013).

template<class FloatType>

bool is_error_bound_appropriate(const size_t N, const int k){

const auto eps = std::numeric_limits<FloatType>::epsilon();

const auto ratio = std::pow(N, k) * std::pow(eps, k-1);

//If ratio << 1, then the conventional non-reproducible sum and the

//deterministic sum will have error bounds of the same order. We arbitrarily

//choose 1e-4 to represent this

return ratio < 1e-3;

}

//Serial bitwise deterministic summation.

//Algorithm 8 from Demmel and Nguyen (2013).

template<class FloatType>

FloatType serial_bitwise_deterministic_summation(

std::vector<FloatType> vec, // Note that we're making a copy!

const int k

){

constexpr FloatType eps = std::numeric_limits<FloatType>::epsilon();

const auto n = vec.size();

const auto adr = SetRoundingMode(ROUNDING_MODE);

if(n==0){

return 0;

}

if(!is_error_bound_appropriate<FloatType>(vec.size(), k)){

std::cout<<"WARNING! Error bounds of deterministic sum are large relative to conventional summation!"<<std::endl;

}

FloatType m = std::abs(vec.front());

for(const auto &x: vec){

m = std::max(m, std::abs(x));

}

FloatType delta_f = n * m / (1 - 4 * (n + 1) * eps);

FloatType Mf = mf_from_deltaf(delta_f);

std::vector<FloatType> Tf(k);

for(int f=0;f<k-1;f++){

Tf[f] = ExtractVectorNew2<FloatType>(Mf, vec.begin(), vec.end());

delta_f = n * (4 * eps * Mf / 3) / (1 - 4 * (n + 1) * eps);

Mf = mf_from_deltaf(delta_f);

}

FloatType M = Mf;

for(const FloatType &v: vec){

M += v;

}

Tf[k-1] = M - Mf;

FloatType T = 0;

for(const FloatType &tf: Tf){

T += tf;

}

return T;

}

//Parallel bitwise deterministic summation.

//Algorithm 9 from Demmel and Nguyen (2013).

template<class FloatType>

FloatType parallel_bitwise_deterministic_summation(

std::vector<FloatType> vec, // Note that we're making a copy!

const int k

){

constexpr FloatType eps = std::numeric_limits<FloatType>::epsilon();

const auto n = vec.size();

const auto adr = SetRoundingMode(ROUNDING_MODE);

if(n==0){

return 0;

}

if(!is_error_bound_appropriate<FloatType>(vec.size(), k)){

std::cout<<"WARNING! Error bounds of deterministic sum are large relative to conventional summation!"<<std::endl;

}

std::vector<FloatType> Tf(k);

// Note that this reduction would require communication if we had

// implemented this to work on multiple nodes

FloatType m = std::abs(vec.front());

#pragma omp parallel for default(none) reduction(max:m) shared(vec)

for(size_t i=0;i<vec.size();i++){

m = std::max(m, std::abs(vec[i]));

}

// Note that this reduction would require communication if we had

// implemented this to work on multiple nodes

#pragma omp declare reduction(vec_plus : std::vector<FloatType> : \

std::transform(omp_out.begin(), omp_out.end(), omp_in.begin(), omp_out.begin(), std::plus<FloatType>())) \

initializer(omp_priv = decltype(omp_orig)(omp_orig.size()))

#pragma omp parallel default(none) reduction(vec_plus:Tf) shared(k,m,n,vec,std::cout)

{

const auto adr = SetRoundingMode(ROUNDING_MODE);

const auto threads = omp_get_num_threads();

const auto tid = omp_get_thread_num();

const auto values_per_thread = n / threads;

const auto nlow = tid * values_per_thread;

const auto nhigh = (tid<threads-1) ? ((tid+1) * values_per_thread) : n;

FloatType delta_f = n * m / (1 - 4 * (n + 1) * eps);

FloatType Mf = mf_from_deltaf(delta_f);

for(int f=0;f<k-1;f++){

Tf[f] = ExtractVectorNew2<FloatType>(Mf, vec.begin() + nlow, vec.begin() + nhigh);

delta_f = n * (4 * eps * Mf / 3) / (1 - 4 * (n + 1) * eps);

Mf = mf_from_deltaf(delta_f);

}

FloatType M = Mf;

for(size_t i=nlow;i<nhigh;i++){

M += vec[i];

}

Tf[k-1] = M - Mf;

}

FloatType T = 0;

for(const FloatType &tf: Tf){

T += tf;

}

return T;

}

//Convenience wrappers

template<bool Parallel, class FloatType>

FloatType bitwise_deterministic_summation(

const std::vector<FloatType> &vec, // Note that we're making a copy!

const int k

){

if(Parallel){

return parallel_bitwise_deterministic_summation<FloatType>(vec, k);

} else {

return serial_bitwise_deterministic_summation<FloatType>(vec, k);

}

}

template<bool Parallel, class FloatType, class SimpleAccumType>

FloatType simple_summation(const std::vector<FloatType> &vec){

if(Parallel){

return parallel_simple_summation<FloatType, SimpleAccumType>(vec);

} else {

return serial_simple_summation<FloatType, SimpleAccumType>(vec);

}

}

template<bool Parallel, class FloatType>

FloatType kahan_summation(const std::vector<FloatType> &vec){

if(Parallel){

return serial_kahan_summation<FloatType>(vec);

} else {

return parallel_kahan_summation<FloatType>(vec);

}

}

// Timing tests for the summation algorithms

template<bool Parallel, class FloatType, class SimpleAccumType>

FloatType PerformTestsOnData(

const int TESTS,

std::vector<FloatType> floats, //Make a copy so we use the same data for each test

std::mt19937 gen //Make a copy so we use the same data for each test

){

Timer time_deterministic;

Timer time_kahan;

Timer time_simple;

//Very precise output

std::cout.precision(std::numeric_limits<FloatType>::max_digits10);

std::cout<<std::fixed;

std::cout<<"Parallel? "<<Parallel<<std::endl;

if(Parallel){

std::cout<<"Max threads = "<<omp_get_max_threads()<<std::endl;

}

std::cout<<"Floating type = "<<typeid(FloatType).name()<<std::endl;

std::cout<<"Floating type epsilon = "<<std::numeric_limits<FloatType>::epsilon()<<std::endl;

std::cout<<"Simple summation accumulation type = "<<typeid(SimpleAccumType).name()<<std::endl;

std::cout<<"Number of tests = "<<TESTS<<std::endl;

std::cout<<"Input sample = "<<std::endl;

for(size_t i=0;i<10;i++){

std::cout<<"\t"<<floats[i]<<std::endl;

}

//Get a reference value

std::unordered_map<FloatType, uint32_t> simple_sums;

std::unordered_map<FloatType, uint32_t> kahan_sums;

const auto ref_val = bitwise_deterministic_summation<Parallel, FloatType>(floats, 2);

for(int test=0;test<TESTS;test++){

std::shuffle(floats.begin(), floats.end(), gen);

time_deterministic.start();

const auto my_val = bitwise_deterministic_summation<Parallel, FloatType>(floats, 2);

time_deterministic.stop();

if(ref_val!=my_val){

std::cout<<"ERROR: UNEQUAL VALUES ON TEST #"<<test<<"!"<<std::endl;

std::cout<<"Reference = "<<ref_val <<std::endl;

std::cout<<"Current = "<<my_val <<std::endl;

std::cout<<"Reference bits = "<<binrep<FloatType>(ref_val)<<std::endl;

std::cout<<"Current bits = "<<binrep<FloatType>(my_val) <<std::endl;

throw std::runtime_error("Values were not equal!");

}

time_kahan.start();

const auto kahan_sum = kahan_summation<Parallel, FloatType>(floats);

kahan_sums[kahan_sum]++;

time_kahan.stop();

time_simple.start();

const auto simple_sum = simple_summation<Parallel, FloatType, SimpleAccumType>(floats);

simple_sums[simple_sum]++;

time_simple.stop();

}

std::cout<<"Average deterministic summation time = "<<(time_deterministic.total/TESTS)<<std::endl;

std::cout<<"Average simple summation time = "<<(time_simple.total/TESTS)<<std::endl;

std::cout<<"Average Kahan summation time = "<<(time_kahan.total/TESTS)<<std::endl;

std::cout<<"Ratio Deterministic to Simple = "<<(time_deterministic.total/time_simple.total)<<std::endl;

std::cout<<"Ratio Deterministic to Kahan = "<<(time_deterministic.total/time_kahan.total)<<std::endl;

std::cout<<"Reference value = "<<std::fixed<<ref_val<<std::endl;

std::cout<<"Reference bits = "<<binrep<FloatType>(ref_val)<<std::endl;

std::cout<<"Distinct Kahan values = "<<kahan_sums.size()<<std::endl;

std::cout<<"Distinct Simple values = "<<simple_sums.size()<<std::endl;

int count = 0;

for(const auto &kv: kahan_sums){

std::cout<<"\tKahan sum values (N="<<std::fixed<<kv.second<<") "<<kv.first<<" ("<<binrep<FloatType>(kv.first)<<")"<<std::endl;

if(count++==10){

break;

}

}

count = 0;

for(const auto &kv: simple_sums){

std::cout<<"\tSimple sum values (N="<<std::fixed<<kv.second<<") "<<kv.first<<" ("<<binrep<FloatType>(kv.first)<<")"<<std::endl;

if(count++==10){

break;

}

}

std::cout<<std::endl;

return ref_val;

}

// Use this to make sure the tests are reproducible

template<class FloatType, class SimpleAccumType>

void PerformTests(

const int TESTS,

const std::vector<long double> &long_floats,

std::mt19937 &gen

){

std::vector<FloatType> floats(long_floats.begin(), long_floats.end());

const auto serial_val = PerformTestsOnData<false, FloatType, SimpleAccumType>(TESTS, floats, gen);

const auto parallel_val = PerformTestsOnData<true, FloatType, SimpleAccumType>(TESTS, floats, gen);

//Note that the `long double` type may only use 12-16 bytes (to maintain

//alignment), but only 80 bits, resulting in bitwise indeterminism in the last

//few bits; however, the floating-point values themselves will be equal.

std::cout<<"########################################"<<std::endl;

std::cout<<"### Serial and Parallel values match for "

<<typeid(FloatType).name()

<<"? "

<<(serial_val==parallel_val)

<<std::endl;

std::cout<<"########################################\n"<<std::endl;

}

int main(){

std::random_device rd;

// std::mt19937 gen(rd()); //Enable for randomness

std::mt19937 gen(123456789); //Enable for reproducibility

std::uniform_real_distribution<long double> distr(-1000, 1000);

std::vector<long double> long_floats;

for(int i=0;i<N;i++){

long_floats.push_back(distr(gen));

}

PerformTests<double, double>(TESTS, long_floats, gen);

PerformTests<long double, long double>(TESTS, long_floats, gen);

PerformTests<float, float>(TESTS, long_floats, gen);

PerformTests<float, double>(TESTS, long_floats, gen);

return 0;

}

Edit for Eric Postpischil

Eric says:

A generator such as I described will produce numbers with largely the same quantum—all near multiples of the divisor. This may not include a wide variety of exponents in the samples, so it may not well model a distribution where the numbers are being rounded in the interior of the significand when being added. E.g., if we add many numbers of the form 123.45, they will add fine for a while, although rounding errors will grow as the partial sums accumulate. But if we add numbers of forms 12345, 123.45, and 1.2345, different errors occur earlier

Adding 1M values of 123.45 gives a single long double Kahan value of 123450000.000000002837623469532. The deterministic long double value differs from this by -0.00000000000727595761 while the deterministic double value differs by -0.00000001206353772047 while the deterministic float differs by -3.68% (as expected given its large epsilon).

Choosing 1M values randomly from the set {1.2345, 12.345, 123.45, 1234.5, 12345} gives two long double Kahan values: A=2749592287.563000000780448317528 (N=54) and B=2749592287.563000000547617673874 (N=46). The deterministic long double value matches (A) exactly; the deterministic double value differs from (A) by -0.00000020139850676247; the deterministic float value differs from (A) by -257% (again, expected).

2**256digits for float32 (plus some spare depending on the maximal number of inputs), or2**2048digits for float64. – Trustingdoubleas auint64_tand just sum ints together? associativity would come for free, and parallel sums trivial. you might also want to sum the doubles for a human to see, but the test would just be looking at the bits – Mickey