I've implemented a RPScreenRecorder, which records screen as well as mic audio. After multiple recordings are completed I stop the recording and merge the Audios with Videos using AVMutableComposition and then Merge all the videos to form Single Video.

For screen recording and getting the video and audio files, I am using

- (void)startCaptureWithHandler:(nullable void(^)(CMSampleBufferRef sampleBuffer, RPSampleBufferType bufferType, NSError * _Nullable error))captureHandler completionHandler:

For stopping the recording. I Call this function:

- (void)stopCaptureWithHandler:(void (^)(NSError *error))handler;

And these are pretty Straight forward.

Most of the times it works great, I receive both video and audio CMSampleBuffers.

But some times it so happens that startCaptureWithHandler only sends me audio buffers but not video buffers.

And once I encounter this problem, it won't go until I restart my device and reinstall the app. This makes my app so unreliable for the user.

I think this is a replay kit issue but unable to found out related issues with other developers.

let me know if any one of you came across this issue and got the solution.

I have check multiple times but haven't seen any issue in configuration. But here it is anyway.

NSError *videoWriterError;

videoWriter = [[AVAssetWriter alloc] initWithURL:fileString fileType:AVFileTypeQuickTimeMovie

error:&videoWriterError];

NSError *audioWriterError;

audioWriter = [[AVAssetWriter alloc] initWithURL:audioFileString fileType:AVFileTypeAppleM4A

error:&audioWriterError];

CGFloat width =UIScreen.mainScreen.bounds.size.width;

NSString *widthString = [NSString stringWithFormat:@"%f", width];

CGFloat height =UIScreen.mainScreen.boNSString *heightString = [NSString stringWithFormat:@"%f", height];unds.size.height;

NSDictionary * videoOutputSettings= @{AVVideoCodecKey : AVVideoCodecTypeH264,

AVVideoWidthKey: widthString,

AVVideoHeightKey : heightString};

videoInput = [[AVAssetWriterInput alloc] initWithMediaType:AVMediaTypeVideo outputSettings:videoOutputSettings];

videoInput.expectsMediaDataInRealTime = true;

AudioChannelLayout acl;

bzero( &acl, sizeof(acl));

acl.mChannelLayoutTag = kAudioChannelLayoutTag_Mono;

NSDictionary * audioOutputSettings = [ NSDictionary dictionaryWithObjectsAndKeys:

[ NSNumber numberWithInt: kAudioFormatAppleLossless ], AVFormatIDKey,

[ NSNumber numberWithInt: 16 ], AVEncoderBitDepthHintKey,

[ NSNumber numberWithFloat: 44100.0 ], AVSampleRateKey,

[ NSNumber numberWithInt: 1 ], AVNumberOfChannelsKey,

[ NSData dataWithBytes: &acl length: sizeof( acl ) ], AVChannelLayoutKey,

nil ];

audioInput = [[AVAssetWriterInput alloc] initWithMediaType:AVMediaTypeAudio outputSettings:audioOutputSettings];

[audioInput setExpectsMediaDataInRealTime:YES];

[videoWriter addInput:videoInput];

[audioWriter addInput:audioInput];

[[AVAudioSession sharedInstance] setCategory: AVAudioSessionCategoryPlayAndRecord withOptions:AVAudioSessionCategoryOptionDefaultToSpeaker error:nil];

[RPScreenRecorder.sharedRecorder startCaptureWithHandler:^(CMSampleBufferRef _Nonnull sampleBuffer, RPSampleBufferType bufferType, NSError * _Nullable myError) {

Block

}

The startCaptureWithHandler function has pretty straight forward functionality as well:

[RPScreenRecorder.sharedRecorder startCaptureWithHandler:^(CMSampleBufferRef _Nonnull sampleBuffer, RPSampleBufferType bufferType, NSError * _Nullable myError) {

dispatch_sync(dispatch_get_main_queue(), ^{

if(CMSampleBufferDataIsReady(sampleBuffer))

{

if (self->videoWriter.status == AVAssetWriterStatusUnknown)

{

self->writingStarted = true;

[self->videoWriter startWriting];

[self->videoWriter startSessionAtSourceTime:CMSampleBufferGetPresentationTimeStamp(sampleBuffer)];

[self->audioWriter startWriting];

[self->audioWriter startSessionAtSourceTime:CMSampleBufferGetPresentationTimeStamp(sampleBuffer)];

}

if (self->videoWriter.status == AVAssetWriterStatusFailed) {

return;

}

if (bufferType == RPSampleBufferTypeVideo)

{

if (self->videoInput.isReadyForMoreMediaData)

{

[self->videoInput appendSampleBuffer:sampleBuffer];

}

}

else if (bufferType == RPSampleBufferTypeAudioMic)

{

// printf("\n+++ bufferAudio received %d \n",arc4random_uniform(100));

if (writingStarted){

if (self->audioInput.isReadyForMoreMediaData)

{

[self->audioInput appendSampleBuffer:sampleBuffer];

}

}

}

}

});

}

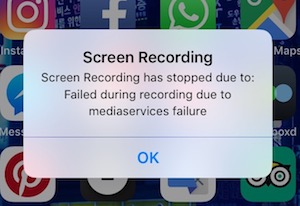

Also, when this situation occurs, the system screen recorder gets corrupted as well. On clicking system recorder, this error shows up:

The error says "Screen recording has stopped due to: Failure during recording due to Mediaservices error".

There must be two reasons:

- iOS Replay kit is in beta, which is why it is giving problem after sometimes of usage.

- I have implemented any problematic logic, which is cause replaykit to crash.

If it's issue no. 1, then no problem. If this is issue no. 2 then I have to know where I might be wrong?

Opinions and help will be appreciated.