I have a 151-by-151 matrix A. It's a correlation matrix, so there are 1s on the main diagonal and repeated values above and below the main diagonal. Each row/column represents a person.

For a given integer n I will seek to reduce the size of the matrix by kicking people out, such that I am left with a n-by-n correlation matrix that minimises the total sum of the elements. In addition to obtaining the abbreviated matrix, I also need to know the row number of the people who should be booted out of the original matrix (or their column number - they'll be the same number).

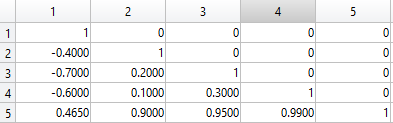

As a starting point I take A = tril(A), which will remove redundant off-diagonal elements from the correlation matrix.

So, if n = 4 and we have the hypothetical 5-by-5 matrix above, it's very clear that person 5 should be kicked out of the matrix, since that person is contributing a lot of very high correlations.

It's also clear that person 1 should not be kicked out, since that person contributes a lot of negative correlations, and thus brings down the sum of the matrix elements.

I understand that sum(A(:)) will sum everything in the matrix. However, I'm very unclear about how to search for the minimum possible answer.

I noticed a similar question Finding sub-matrix with minimum elementwise sum, which has a brute force solution as the accepted answer. While that answer works fine there it's impractical for a 151-by-151 matrix.

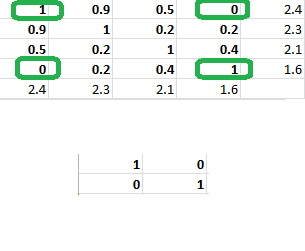

EDIT: I had thought of iterating, but I don't think that truly minimizes the sum of elements in the reduced matrix. Below I have a 4-by-4 correlation matrix in bold, with sums of rows and columns on the edges. It's apparent that with n = 2 the optimal matrix is the 2-by-2 identity matrix involving Persons 1 and 4, but according to the iterative scheme I would have kicked out Person 1 in the first phase of iteration, and so the algorithm makes a solution that is not optimal. I wrote a program that always generated optimal solutions, and it works well when n or k are small, but when trying to make an optimal 75-by-75 matrix from a 151-by-151 matrix I realised my program would take billions of years to terminate.

I vaguely recalled that sometimes these n choose k problems can be resolved with dynamic programming approaches that avoid recomputing things, but I can't work out how to solve this, and nor did googling enlighten me.

I'm willing to sacrifice precision for speed if there's no other option, or the best program will take more than a week to generate a precise solution. However, I'm happy to let a program run for up to a week if it will generate a precise solution.

If it's not possible for a program to optimise the matrix within an reasonable timeframe, then I would accept an answer that explains why n choose k tasks of this particular sort can't be resolved within reasonable timeframes.

sum(A, 2)returns the sum of each row. – Houdonxto minimizex'*S*xwhereSis a given symmetric, positive definite matrix andxis subject to the constraints that the entries ofxare either1or0and the elements ofxsum ton. Correct? – Paronychia