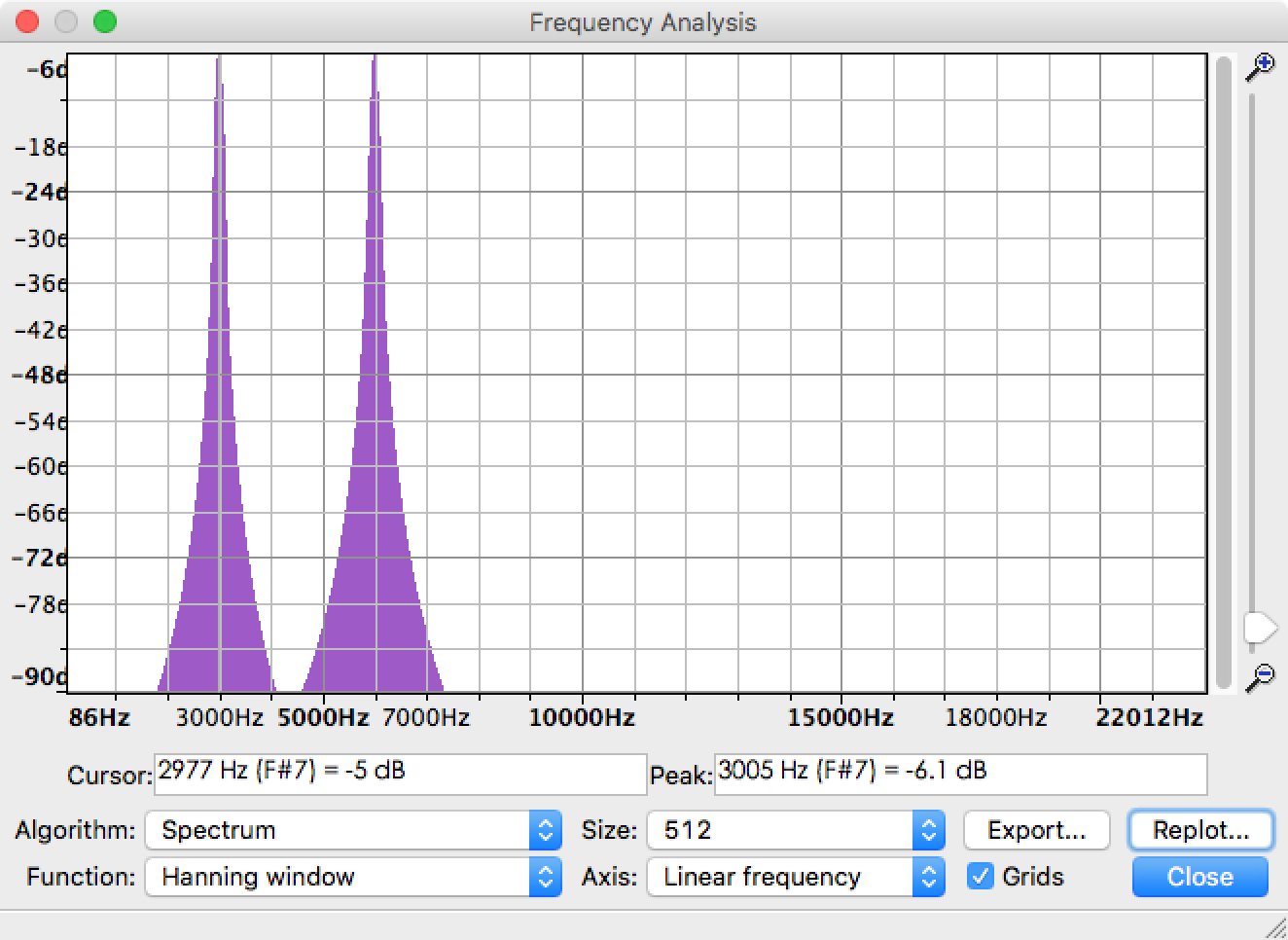

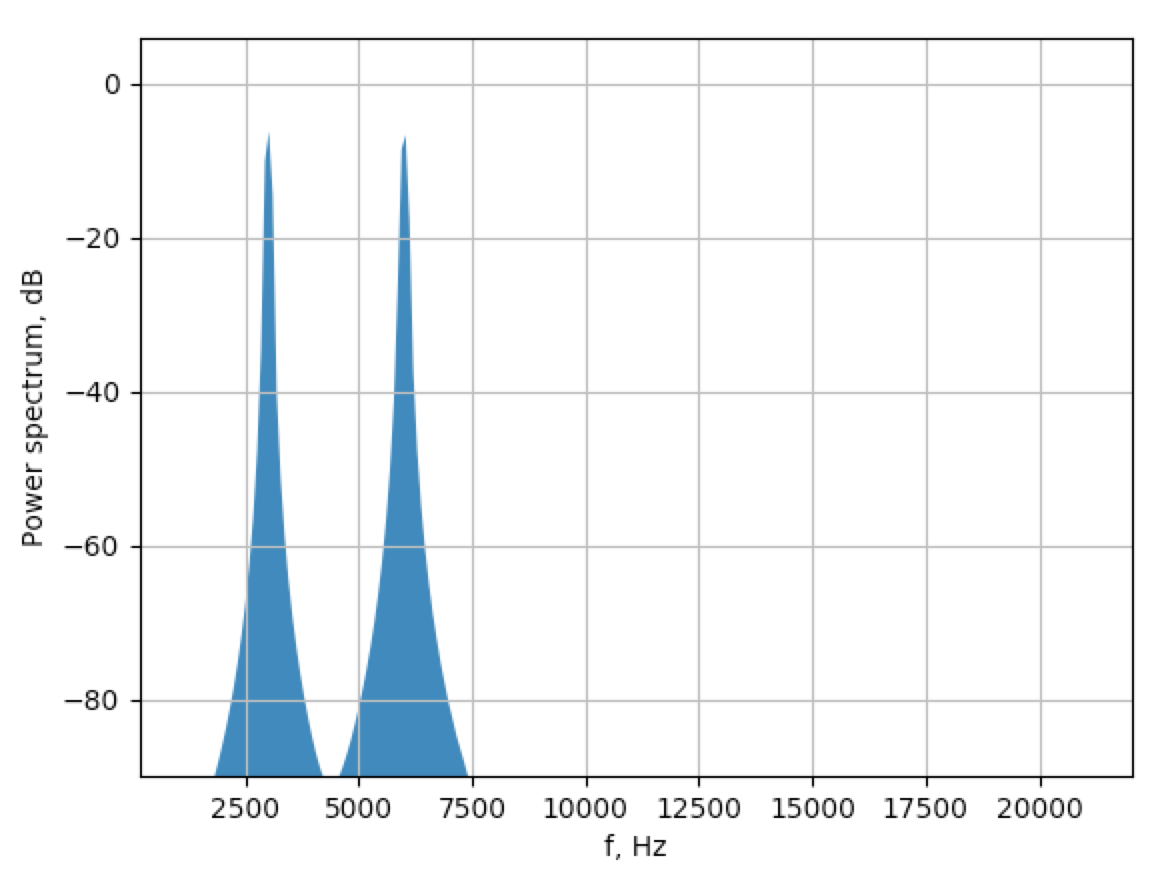

I'm using FFT to extract the amplitude of each frequency components from an audio file. Actually, there is already a function called Plot Spectrum in Audacity that can help to solve the problem. Taking this example audio file which is composed of 3kHz sine and 6kHz sine, the spectrum result is like the following picture. You can see peaks are at 3KHz and 6kHz, no extra frequency.

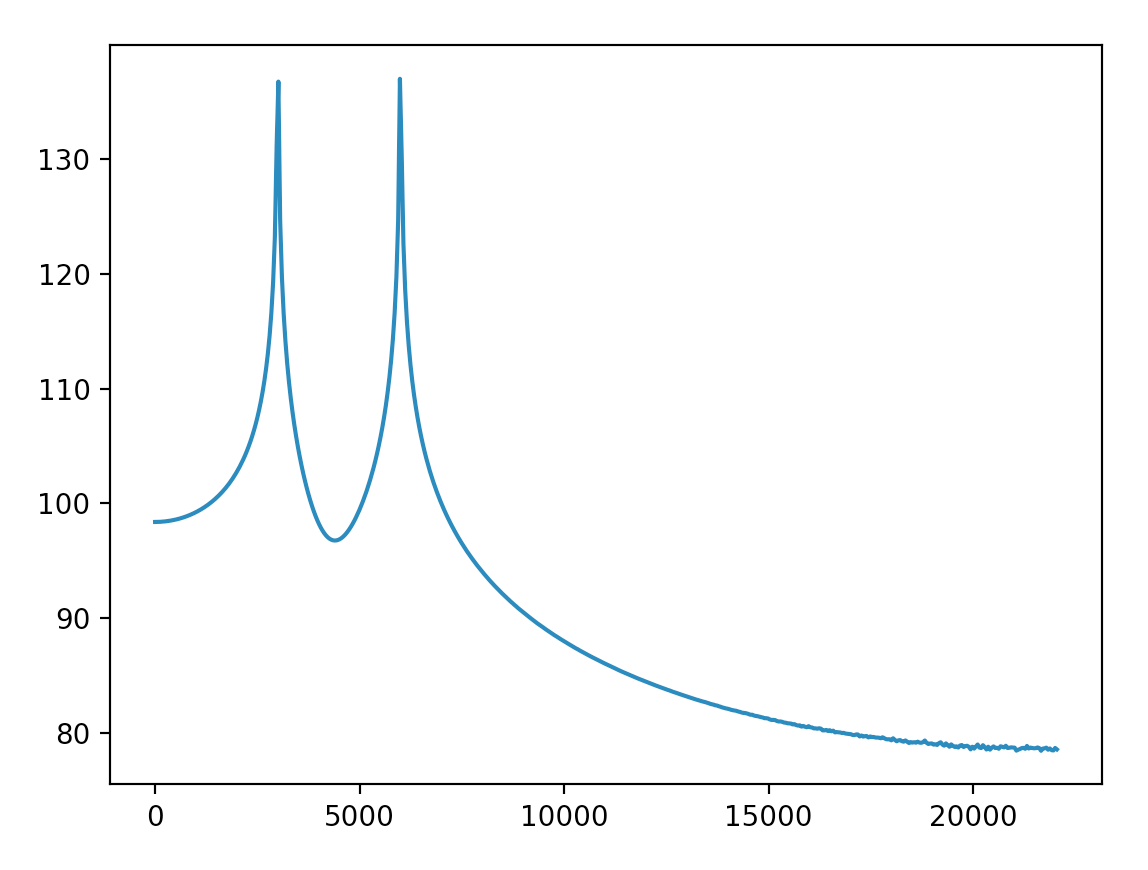

Now I need to implement the same function and plot the similar result in Python. I'm close to the Audacity result with the help of rfft but I still have problems to solve after getting this result.

- What's physical meaning of the amplitude in the second picture?

- How to normalize the amplitude to 0dB like the one in Audacity?

- Why do the frequency over 6kHz have such high amplitude (≥90)? Can I scale those frequency to relative low level?

Related code:

import numpy as np

from pylab import plot, show

from scipy.io import wavfile

sample_rate, x = wavfile.read('sine3k6k.wav')

fs = 44100.0

rfft = np.abs(np.fft.rfft(x))

p = 20*np.log10(rfft)

f = np.linspace(0, fs/2, len(p))

plot(f, p)

show()

Update

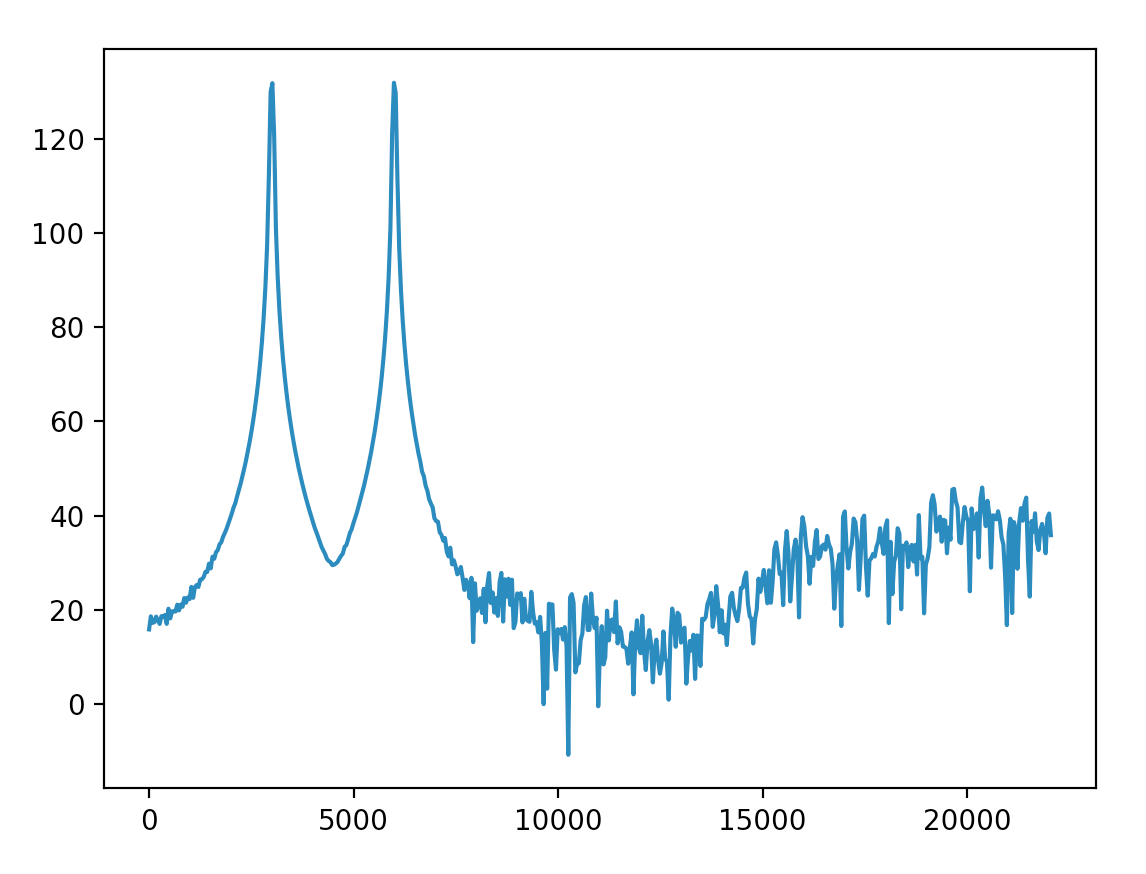

I multiplied Hanning window with the whole length signal (is that correct?) and get this. Most of the amplitude of skirts are below 40.

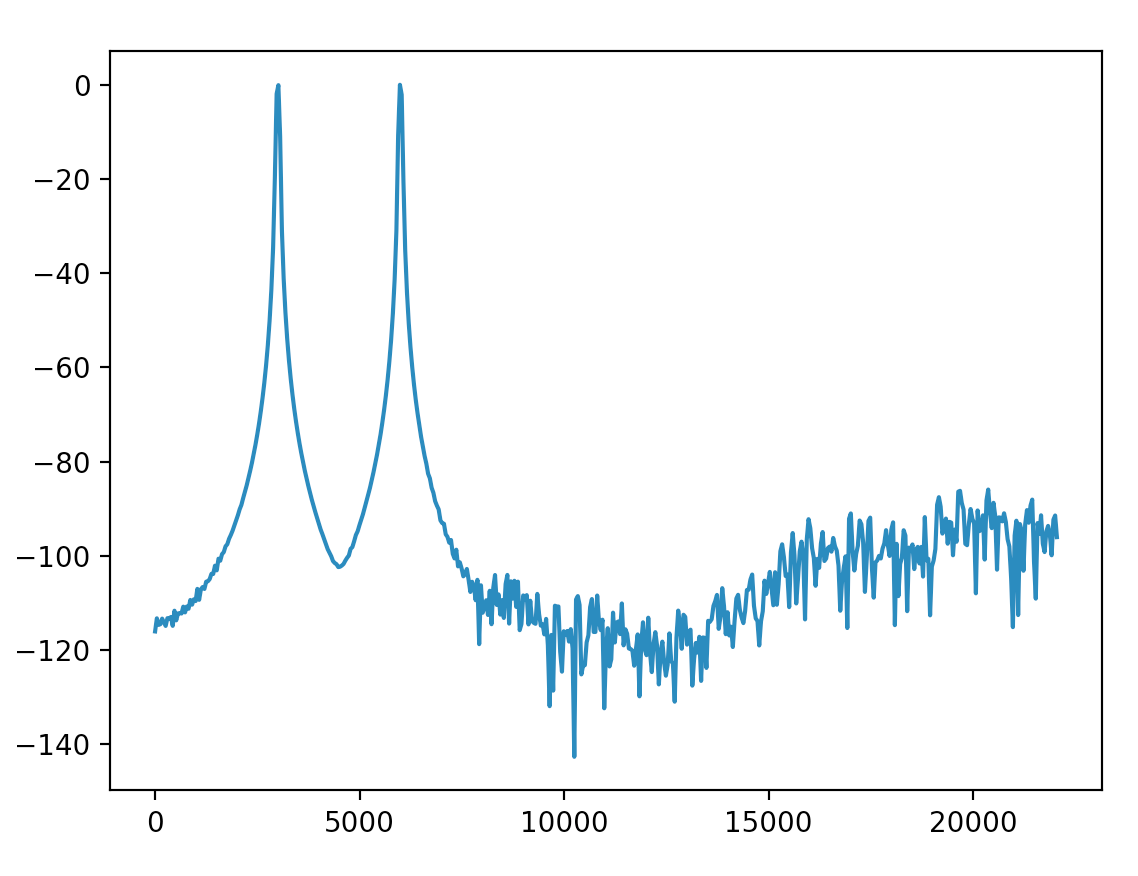

And scale the y-axis to decibel as @Mateen Ulhaq said. The result is more close to the Audacity one. Can I treat the amplitude below -90dB so low that it can be ignored?

Updated code:

fs, x = wavfile.read('input/sine3k6k.wav')

x = x * np.hanning(len(x))

rfft = np.abs(np.fft.rfft(x))

rfft_max = max(rfft)

p = 20*np.log10(rfft/rfft_max)

f = np.linspace(0, fs/2, len(p))

About the bounty

With the code in the update above, I can measure the frequency components in decibel. The highest possible value will be 0dB. But the method only works for a specific audio file because it uses rfft_max of this audio. I want to measure the frequency components of multiple audio files in one standard rule just like Audacity does.

I also started a discussion in Audacity forum, but I was still not clear how to implement my purpose.

rfft = np.abs(np.fft.rfft(x))->rfft = np.abs(np.fft.rfft(x)) / len(x)(to deal with the implicit scale factor in the FFT). – Lungrfftbe divided by the signal length? – Barryxwhose amplitude is in [-1.0, 1.0] and calculate the reference usingnp.abs(np.fft.rfft(x)) / len(x). But if I applied the reference to another audio, the result has much difference to the spectrum dB in Audacity. – BarryN. It should be size like 512, 1024, etc. But I cannot find how to use thissizein my code. My purpose is to get strength of frequency components in whole audio files. – Barry