Question: Update and save fast, relationship between tables with lots of data after both or one of the table is already saved.

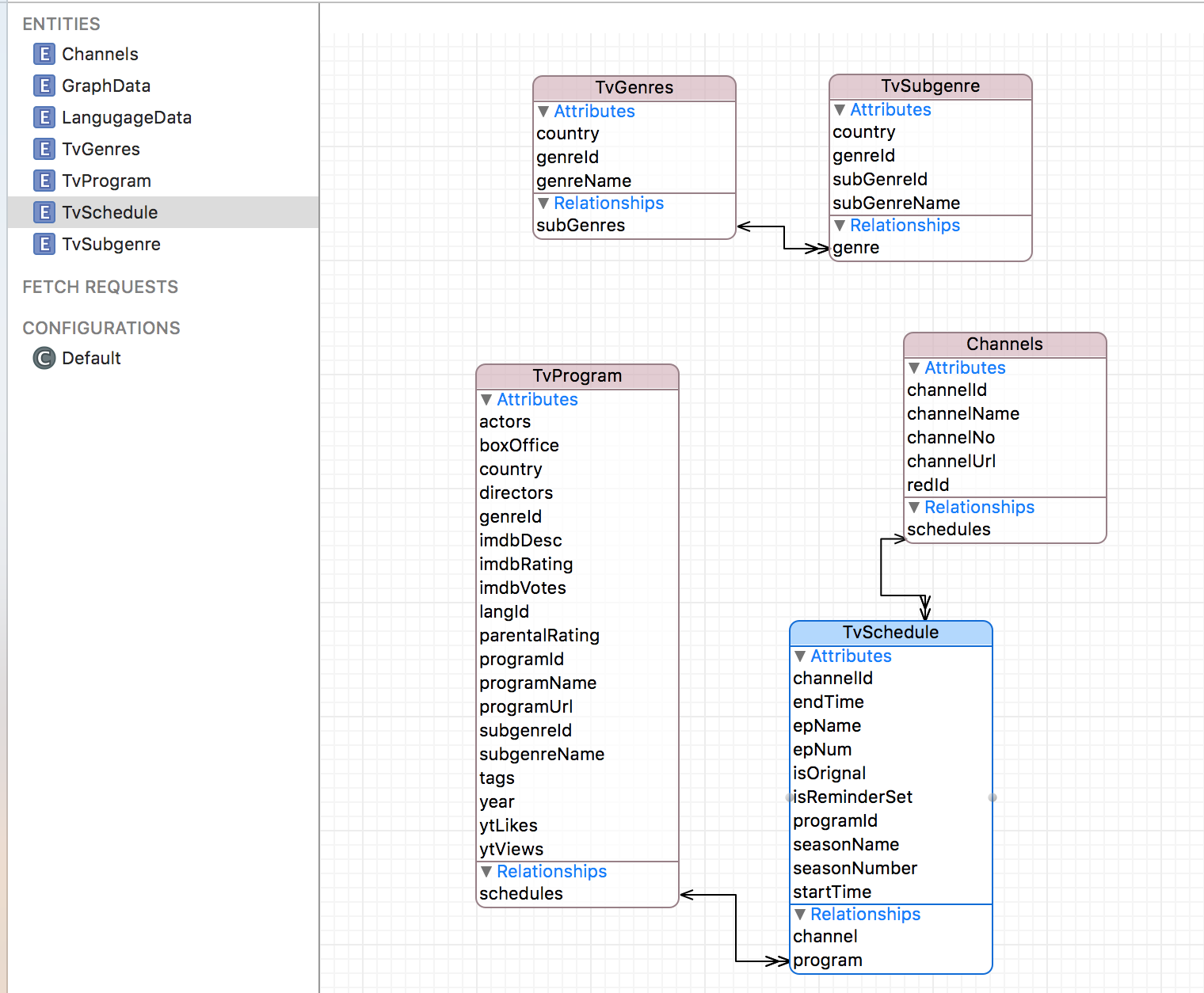

I have five tables TvGenres, TvSubgenre, TvProgram, Channels, TvSchedules with the relationship between them as shown in below image

Now the problem is all data downloading happens in sequence based on previous data and unlike SQLite, I need to set relationship between them and to do that I have to search table again and again and set the relation between them which is time-consuming so how can I do that faster

I use 2 different approaches to solve but both are not working as expected

First let me tell, how downloading is working

First I fetch all the channels details based on user languages From channels, I fetch all the schedules for next one week (that's a lot of data (around 30k+ )) And from schedules data, I fetch all the programs data (that's again a lot of data )

Approach 1,

Download all data and create object list of them and then store them at once after all downloading is done but still setting relationship among them takes time and worst thing now the loop happens twice as first I have to loop to create all the class list and then loop again to store those in table view and still don’t solve the relationship time-consuming issue.

Approach 2

Download one by one like download channels store them and then download schedules store them and then download programs and then store them in core data this is all ok but now channels have relationship with schedule and schedules have relationship with programs and to set the relation while I am storing schedules I also fetch channel related to that schedule and then set the relationship, same for program and schedules and that's taking time below is the code so how can I fix this problem or how should I download and store so it becomes as fast as possible.

Code for only storing schedules

func saveScheduleDataToCoreData(withScheduleList scheduleList: [[String : Any]], completionBlock: @escaping (_ programIds: [String]?) -> Void) {

let start = DispatchTime.now()

let context = coreDataStack.managedObjectContext

var progIds = [String]()

context.performAndWait {

var scheduleTable: TvSchedule!

for (index,response) in scheduleList.enumerated() {

let schedule: TvScheduleInformation = TvScheduleInformation(json: response )

scheduleTable = TvSchedule(context: context)

scheduleTable.channelId = schedule.channelId

scheduleTable.programId = schedule.programId

scheduleTable.startTime = schedule.startTime

scheduleTable.endTime = schedule.endTime

scheduleTable.day = schedule.day

scheduleTable.languageId = schedule.languageId

scheduleTable.isReminderSet = false

//if I comment out the below code then it reduce the time significantly from 5 min to 34.74 s

let tvChannelRequest: NSFetchRequest<Channels> = Channels.fetchRequest()

tvChannelRequest.predicate = NSPredicate(format: "channelId == %d", schedule.channelId)

tvChannelRequest.fetchLimit = 1

do {

let channelResult = try context.fetch(tvChannelRequest)

if channelResult.count == 1 {

let channelTable = channelResult[0]

scheduleTable.channel = channelTable

}

}

catch {

print("Error: \(error)")

}

progIds.append(String(schedule.programId))

//storeing after 1000 schedules

if index % 1000 == 0 {

print(index)

do {

try context.save()

} catch let error as NSError {

print("Error saving schdeules object context! \(error)")

}

}

}

}

let end = DispatchTime.now()

let nanoTime = end.uptimeNanoseconds - start.uptimeNanoseconds

print("Saving \(scheduleList.count) Schedules takes \(nanoTime) nano time")

coreDataStack.saveContext()

completionBlock(progIds)

}

Also how to do proper batch save using autoreleas pool

PS: All the material I found related to core data are expensive costing more than 3k, and with free, there isn't much information just basic stuff even apple docs don't have much code related to performance tuning and batch updates and handing relationship. Thanks in advance for anyknid of help.

channelIdfield as indexed? – Hemiterpene