I have 3 byte arrays in C# that I need to combine into one. What would be the most efficient method to complete this task?

For primitive types (including bytes), use System.Buffer.BlockCopy instead of System.Array.Copy. It's faster.

I timed each of the suggested methods in a loop executed 1 million times using 3 arrays of 10 bytes each. Here are the results:

- New Byte Array using

System.Array.Copy- 0.2187556 seconds - New Byte Array using

System.Buffer.BlockCopy- 0.1406286 seconds - IEnumerable<byte> using C# yield operator - 0.0781270 seconds

- IEnumerable<byte> using LINQ's Concat<> - 0.0781270 seconds

I increased the size of each array to 100 elements and re-ran the test:

- New Byte Array using

System.Array.Copy- 0.2812554 seconds - New Byte Array using

System.Buffer.BlockCopy- 0.2500048 seconds - IEnumerable<byte> using C# yield operator - 0.0625012 seconds

- IEnumerable<byte> using LINQ's Concat<> - 0.0781265 seconds

I increased the size of each array to 1000 elements and re-ran the test:

- New Byte Array using

System.Array.Copy- 1.0781457 seconds - New Byte Array using

System.Buffer.BlockCopy- 1.0156445 seconds - IEnumerable<byte> using C# yield operator - 0.0625012 seconds

- IEnumerable<byte> using LINQ's Concat<> - 0.0781265 seconds

Finally, I increased the size of each array to 1 million elements and re-ran the test, executing each loop only 4000 times:

- New Byte Array using

System.Array.Copy- 13.4533833 seconds - New Byte Array using

System.Buffer.BlockCopy- 13.1096267 seconds - IEnumerable<byte> using C# yield operator - 0 seconds

- IEnumerable<byte> using LINQ's Concat<> - 0 seconds

So, if you need a new byte array, use

byte[] rv = new byte[a1.Length + a2.Length + a3.Length];

System.Buffer.BlockCopy(a1, 0, rv, 0, a1.Length);

System.Buffer.BlockCopy(a2, 0, rv, a1.Length, a2.Length);

System.Buffer.BlockCopy(a3, 0, rv, a1.Length + a2.Length, a3.Length);

But, if you can use an IEnumerable<byte>, DEFINITELY prefer LINQ's Concat<> method. It's only slightly slower than the C# yield operator, but is more concise and more elegant.

IEnumerable<byte> rv = a1.Concat(a2).Concat(a3);

If you have an arbitrary number of arrays and are using .NET 3.5, you can make the System.Buffer.BlockCopy solution more generic like this:

private byte[] Combine(params byte[][] arrays)

{

byte[] rv = new byte[arrays.Sum(a => a.Length)];

int offset = 0;

foreach (byte[] array in arrays) {

System.Buffer.BlockCopy(array, 0, rv, offset, array.Length);

offset += array.Length;

}

return rv;

}

*Note: The above block requires you adding the following namespace at the the top for it to work.

using System.Linq;

To Jon Skeet's point regarding iteration of the subsequent data structures (byte array vs. IEnumerable<byte>), I re-ran the last timing test (1 million elements, 4000 iterations), adding a loop that iterates over the full array with each pass:

- New Byte Array using

System.Array.Copy- 78.20550510 seconds - New Byte Array using

System.Buffer.BlockCopy- 77.89261900 seconds - IEnumerable<byte> using C# yield operator - 551.7150161 seconds

- IEnumerable<byte> using LINQ's Concat<> - 448.1804799 seconds

The point is, it is VERY important to understand the efficiency of both the creation and the usage of the resulting data structure. Simply focusing on the efficiency of the creation may overlook the inefficiency associated with the usage. Kudos, Jon.

IEnumerable<byte> usage in your answer shows your limited knowledge of Linq because you only built expressions but not the result. If you target extreme performance, you must avoid Linq. Thank for pointing the faster execution of System.Buffer.BlockCopy –

Berardo Many of the answers seem to me to be ignoring the stated requirements:

- The result should be a byte array

- It should be as efficient as possible

These two together rule out a LINQ sequence of bytes - anything with yield is going to make it impossible to get the final size without iterating through the whole sequence.

If those aren't the real requirements of course, LINQ could be a perfectly good solution (or the IList<T> implementation). However, I'll assume that Superdumbell knows what he wants.

(EDIT: I've just had another thought. There's a big semantic difference between making a copy of the arrays and reading them lazily. Consider what happens if you change the data in one of the "source" arrays after calling the Combine (or whatever) method but before using the result - with lazy evaluation, that change will be visible. With an immediate copy, it won't. Different situations will call for different behaviour - just something to be aware of.)

Here are my proposed methods - which are very similar to those contained in some of the other answers, certainly :)

public static byte[] Combine(byte[] first, byte[] second)

{

byte[] ret = new byte[first.Length + second.Length];

Buffer.BlockCopy(first, 0, ret, 0, first.Length);

Buffer.BlockCopy(second, 0, ret, first.Length, second.Length);

return ret;

}

public static byte[] Combine(byte[] first, byte[] second, byte[] third)

{

byte[] ret = new byte[first.Length + second.Length + third.Length];

Buffer.BlockCopy(first, 0, ret, 0, first.Length);

Buffer.BlockCopy(second, 0, ret, first.Length, second.Length);

Buffer.BlockCopy(third, 0, ret, first.Length + second.Length,

third.Length);

return ret;

}

public static byte[] Combine(params byte[][] arrays)

{

byte[] ret = new byte[arrays.Sum(x => x.Length)];

int offset = 0;

foreach (byte[] data in arrays)

{

Buffer.BlockCopy(data, 0, ret, offset, data.Length);

offset += data.Length;

}

return ret;

}

Of course the "params" version requires creating an array of the byte arrays first, which introduces extra inefficiency.

params byte[][] to IEnumerable<byte[]>, thus avoiding the need for an array –

Leboff public static T[] Combine<T>(T[] first, T[] second) ? –

Upcoming I took Matt's LINQ example one step further for code cleanliness:

byte[] rv = a1.Concat(a2).Concat(a3).ToArray();

In my case, the arrays are small, so I'm not concerned about performance.

If you simply need a new byte array, then use the following:

byte[] Combine(byte[] a1, byte[] a2, byte[] a3)

{

byte[] ret = new byte[a1.Length + a2.Length + a3.Length];

Array.Copy(a1, 0, ret, 0, a1.Length);

Array.Copy(a2, 0, ret, a1.Length, a2.Length);

Array.Copy(a3, 0, ret, a1.Length + a2.Length, a3.Length);

return ret;

}

Alternatively, if you just need a single IEnumerable, consider using the C# 2.0 yield operator:

IEnumerable<byte> Combine(byte[] a1, byte[] a2, byte[] a3)

{

foreach (byte b in a1)

yield return b;

foreach (byte b in a2)

yield return b;

foreach (byte b in a3)

yield return b;

}

I actually ran into some issues with using Concat... (with arrays in the 10-million, it actually crashed).

I found the following to be simple, easy and works well enough without crashing on me, and it works for ANY number of arrays (not just three) (It uses LINQ):

public static byte[] ConcatByteArrays(params byte[][] arrays)

{

return arrays.SelectMany(x => x).ToArray();

}

The memorystream class does this job pretty nicely for me. I couldn't get the buffer class to run as fast as memorystream.

using (MemoryStream ms = new MemoryStream())

{

ms.Write(BitConverter.GetBytes(22),0,4);

ms.Write(BitConverter.GetBytes(44),0,4);

ms.ToArray();

}

Almost 15 years ago this was asked... and now I'm adding yet another answer to it... hopefully to try to bring it up to date with current .NET developments.

The OP requirements were (as perfectly stated and addressed by Jon Skeet's answer) :

- The result should be a byte array

- It should be as efficient as possible

Forcing the result to be a byte[] avoids answers with LINQ that didn't materialize it (e.g., didn't actually perform the "byte array combining") due to LINQ's deferred execution (a common pitfall when measuring performance).

To find the most efficient way we have to measure it. And it's not trivial because we have to measure different scenarios which depend on the amount of data and also the runtime we are targeting. To help with all that BenchmarkDotNet comes to the rescue!

At the time this was answered Buffer.BlockCopy was the fastest solution, but things may have changed since then. The NET team is working diligently at optimizing hot code paths in .NET newest releases, including many LINQ optimizations.

The three methods we'll be measuring are: Buffer.BlockCopy, Array.Copy and LINQ's Enumerable.SelectMany. You may be surprised (I was):

public byte[] CombineBlockCopy(params byte[][] arrays) {

byte[] combined = new byte[arrays.Sum(x => x.Length)];

int offset = 0;

foreach (byte[] array in arrays) {

Buffer.BlockCopy(array, 0, combined, offset, array.Length);

offset += array.Length;

}

return combined;

}

public byte[] CombineArrayCopy(params byte[][] arrays) {

byte[] combined = new byte[arrays.Sum(x => x.Length)];

int offset = 0;

foreach (byte[] array in arrays) {

Array.Copy(array, 0, combined, offset, array.Length);

offset += array.Length;

}

return combined;

}

public byte[] CombineSelectMany(params byte[][] arrays)

=> arrays.SelectMany(x => x).ToArray();

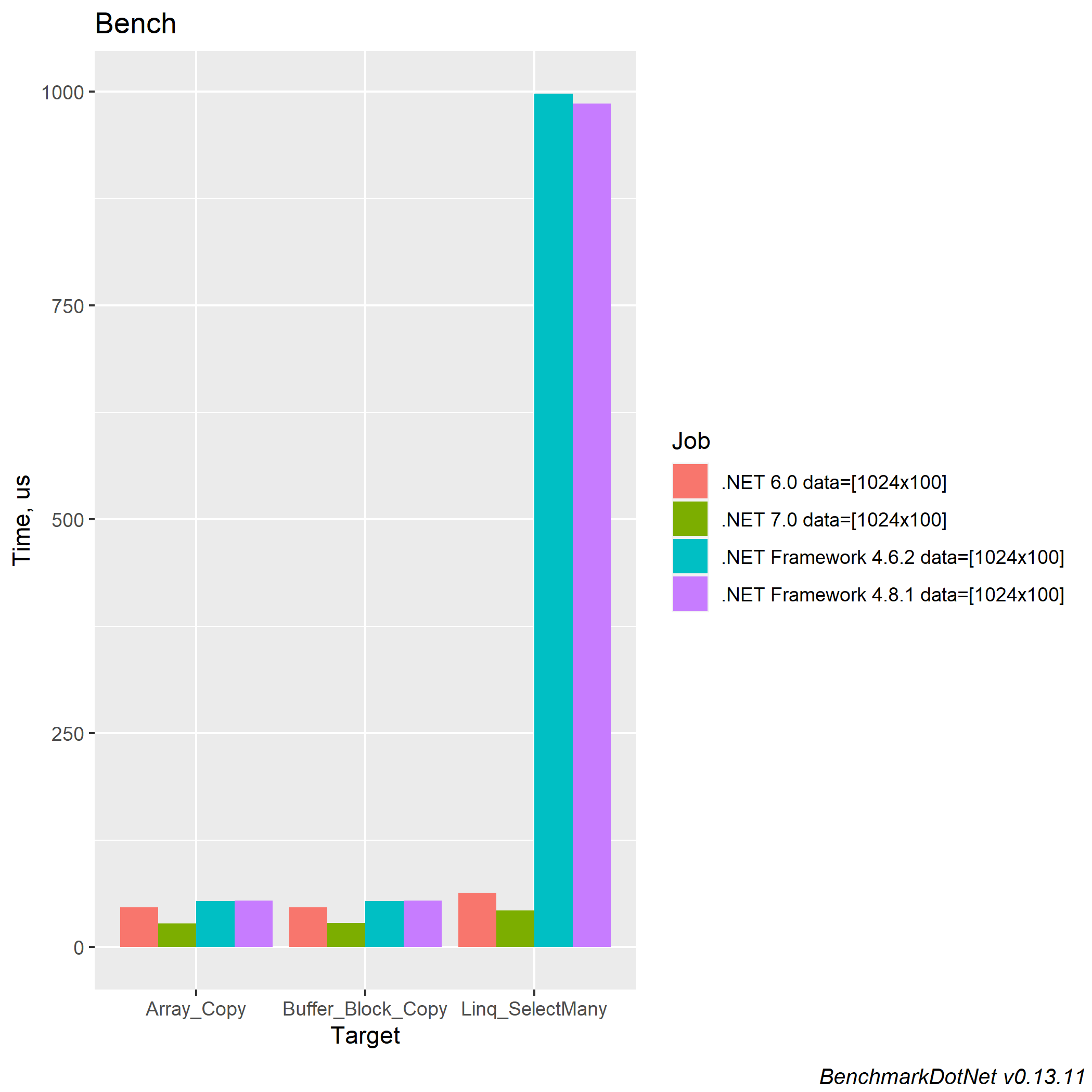

Small Arrays

Combining 1,024 arrays of 100 elements:

We can see that there were HUGE improvements in performance already in NET 6, with more optimizations still in NET 7, which makes LINQ totally fine in this scenario, while in .NET Framework LINQ was way too slow for any array combining job :-)

Note: Since there's little difference in .NET Framework 4.6.2 relative to 4.8.1 I'll omit 4.6.2 results from now on.

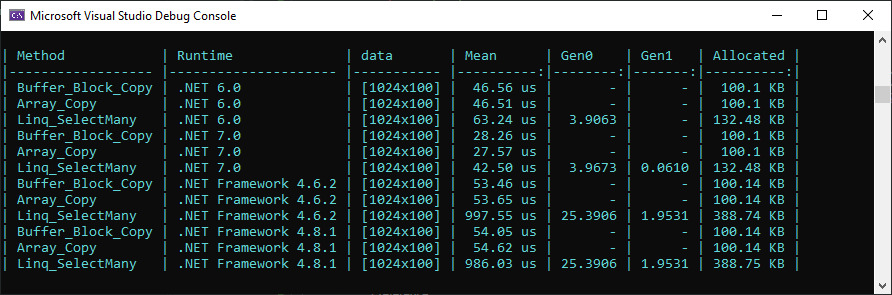

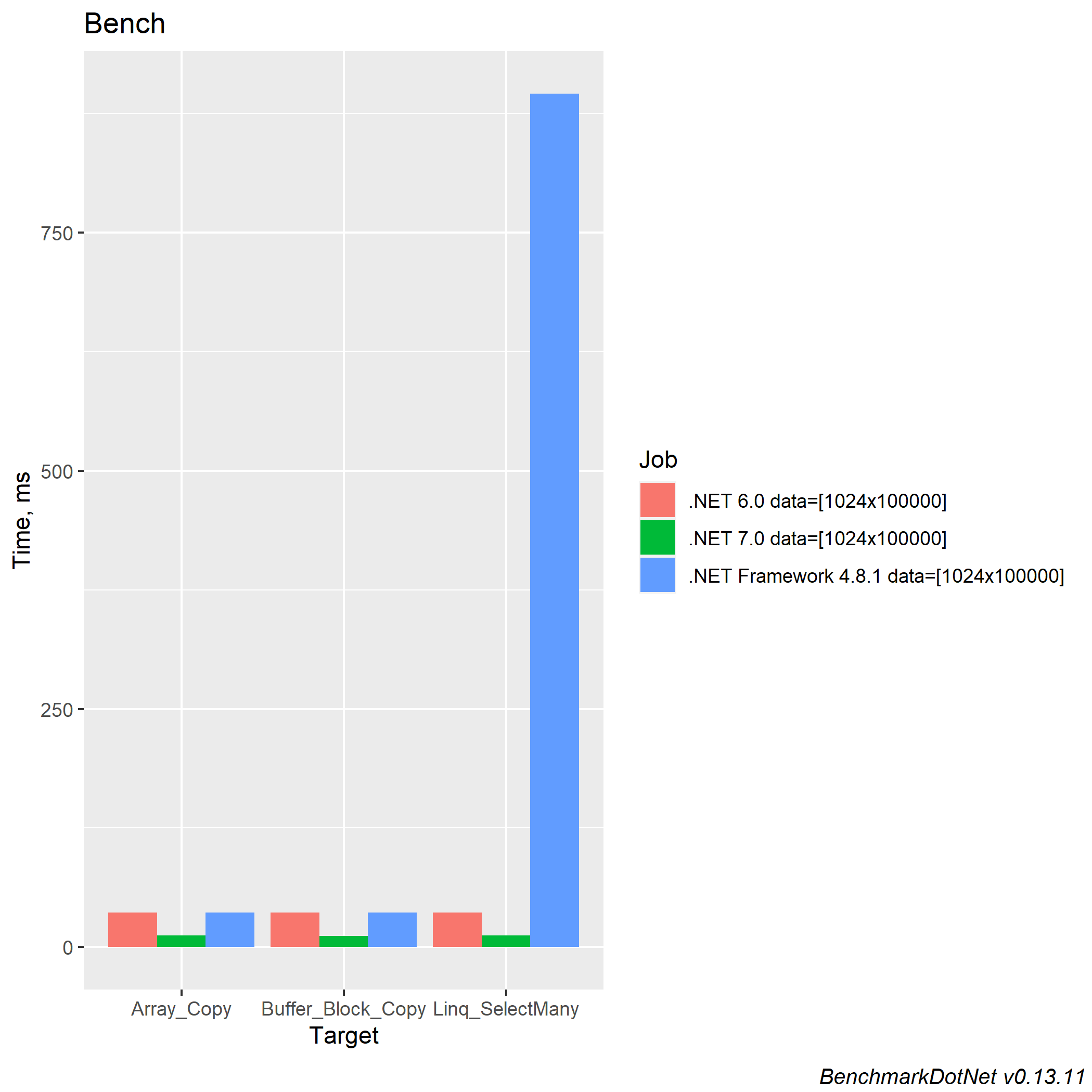

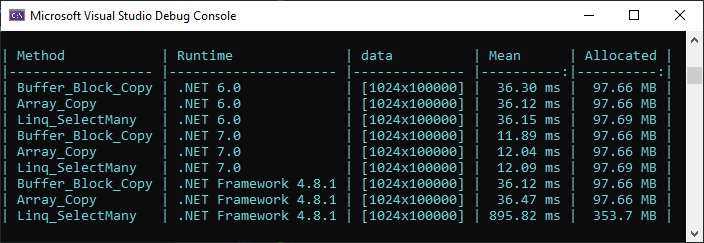

Medium Arrays

Combining 1,024 arrays of 100,000 elements:

With larger arrays the results are even more obvious. LINQ in .NET Framework should not even be considered for any performance-sensitive code, while in .NET 6 and 7 it performs as well as the other methods (and even outperforms them sometimes - although it's inside the benchmark's margin of error).

In .NET 6 all three methods performs the same (within the error margin). Array.Copy and Buffer.BlockCopy performs as well as in .NET Framework 4.8.1.

In .NET 7 all methods are significantly faster than even in .NET 6. LINQ's solution is as good as good oldies Array.Copy and Buffer.BlockCopy. So, if you're targeting .NET 7 or above the LINQ solution will substantially outperform even Buffer.BlockCopy or Array.Copy while being very succinct and self-describing code.

Conclusion

While Array.Copy and Buffer.BlockCopy used to be the kings for this kind of byte crunching, current optimizations to the runtime brought the LINQ-based solution to the same level of performance for larger arrays (and almost the same performance for small arrays).

Despite common wisdom indicating that Buffer.BlockCopy should have performed better than Array.Copy in all tested scenarios that was not the case. The difference in performance can go either way and is within the error margin. This "wisdom" may have been true for older versions of the Framework (always measure your code's performance for the target runtime and architecture!)

As always, happy benchmarking!

Benchmark Source Code

NOTE: I'll setup a Github repository with the full solution / project soon. For now, here is the full source for the benchmarks for small, medium and large arrays.

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Jobs;

using BenchmarkDotNet.Running;

BenchmarkRunner.Run<Bench>();

[MemoryDiagnoser]

[HideColumns("Job", "Error", "StdDev", "Median")]

[SimpleJob(RuntimeMoniker.Net462)]

[SimpleJob(RuntimeMoniker.Net481)]

[SimpleJob(RuntimeMoniker.Net60)]

[SimpleJob(RuntimeMoniker.Net70)]

[RPlotExporter]

public class Bench

{

static List<byte[]> Build(int size, int length) {

var list = new List<byte[]>();

for (int i = 0; i < size; i++)

list.Add(new byte[length]);

return list;

}

static readonly List<byte[]> smallArrays = Build(1024, 100);

static readonly List<byte[]> mediumArrays = Build(1024, 100_000);

static readonly List<byte[]> largeArrays = Build(1024, 1_000_000);

public IEnumerable<object> Arrays() {

yield return new Data(smallArrays);

yield return new Data(mediumArrays);

yield return new Data(largeArrays);

}

public class Data {

public Data(IEnumerable<byte[]> arrays) { Arrays = arrays; }

public IEnumerable<byte[]> Arrays { get; }

public override string ToString() => $"[{Arrays.Count()}x{Arrays.First().Length}]";

}

[Benchmark, ArgumentsSource(nameof(Arrays))]

public byte[] Buffer_Block_Copy(Data data) {

var arrays = data.Arrays;

byte[] combined = new byte[arrays.Sum(x => x.Length)];

int offset = 0;

foreach (byte[] array in arrays) {

Buffer.BlockCopy(array, 0, combined, offset, array.Length);

offset += array.Length;

}

return combined;

}

[Benchmark, ArgumentsSource(nameof(Arrays))]

public byte[] Array_Copy(Data data) {

var arrays = data.Arrays;

byte[] combined = new byte[arrays.Sum(x => x.Length)];

int offset = 0;

foreach (byte[] array in arrays) {

Buffer.BlockCopy(array, 0, combined, offset, array.Length);

offset += array.Length;

}

return combined;

}

[Benchmark, ArgumentsSource(nameof(Arrays))]

public byte[] Linq_SelectMany(Data data) {

byte[] combined = data.Arrays.SelectMany(x => x).ToArray();

return combined;

}

}

public static byte[] Concat(params byte[][] arrays) {

using (var mem = new MemoryStream(arrays.Sum(a => a.Length))) {

foreach (var array in arrays) {

mem.Write(array, 0, array.Length);

}

return mem.ToArray();

}

}

public static bool MyConcat<T>(ref T[] base_arr, ref T[] add_arr)

{

try

{

int base_size = base_arr.Length;

int size_T = System.Runtime.InteropServices.Marshal.SizeOf(base_arr[0]);

Array.Resize(ref base_arr, base_size + add_arr.Length);

Buffer.BlockCopy(add_arr, 0, base_arr, base_size * size_T, add_arr.Length * size_T);

}

catch (IndexOutOfRangeException ioor)

{

MessageBox.Show(ioor.Message);

return false;

}

return true;

}

where T : struct), but - not being an expert in the innards of the CLR - I couldn't say whether you might get exceptions on certain structs as well (e.g. if they contain reference type fields). –

Chalcidice Can use generics to combine arrays. Following code can easily be expanded to three arrays. This way you never need to duplicate code for different type of arrays. Some of the above answers seem overly complex to me.

private static T[] CombineTwoArrays<T>(T[] a1, T[] a2)

{

T[] arrayCombined = new T[a1.Length + a2.Length];

Array.Copy(a1, 0, arrayCombined, 0, a1.Length);

Array.Copy(a2, 0, arrayCombined, a1.Length, a2.Length);

return arrayCombined;

}

/// <summary>

/// Combine two Arrays with offset and count

/// </summary>

/// <param name="src1"></param>

/// <param name="offset1"></param>

/// <param name="count1"></param>

/// <param name="src2"></param>

/// <param name="offset2"></param>

/// <param name="count2"></param>

/// <returns></returns>

public static T[] Combine<T>(this T[] src1, int offset1, int count1, T[] src2, int offset2, int count2)

=> Enumerable.Range(0, count1 + count2).Select(a => (a < count1) ? src1[offset1 + a] : src2[offset2 + a - count1]).ToArray();

Here's a generalization of the answer provided by @Jon Skeet. It is basically the same, only it is usable for any type of array, not only bytes:

public static T[] Combine<T>(T[] first, T[] second)

{

T[] ret = new T[first.Length + second.Length];

Buffer.BlockCopy(first, 0, ret, 0, first.Length);

Buffer.BlockCopy(second, 0, ret, first.Length, second.Length);

return ret;

}

public static T[] Combine<T>(T[] first, T[] second, T[] third)

{

T[] ret = new T[first.Length + second.Length + third.Length];

Buffer.BlockCopy(first, 0, ret, 0, first.Length);

Buffer.BlockCopy(second, 0, ret, first.Length, second.Length);

Buffer.BlockCopy(third, 0, ret, first.Length + second.Length,

third.Length);

return ret;

}

public static T[] Combine<T>(params T[][] arrays)

{

T[] ret = new T[arrays.Sum(x => x.Length)];

int offset = 0;

foreach (T[] data in arrays)

{

Buffer.BlockCopy(data, 0, ret, offset, data.Length);

offset += data.Length;

}

return ret;

}

sizeof(...) and multiply that by the number of elements you want to copy, but sizeof can't be used with a generic type. It is possible - for some types - to use Marshal.SizeOf(typeof(T)), but you'll get runtime errors with certain types (e.g. strings). Someone with more thorough knowledge of the inner workings of CLR types will be able to point out all the possible traps here. Suffice to say that writing a generic array concatenation method [using BlockCopy] isn't trivial. –

Chalcidice All you need to pass list of Byte Arrays and this function will return you the Array of Bytes (Merged). This is the best solution i think :).

public static byte[] CombineMultipleByteArrays(List<byte[]> lstByteArray)

{

using (var ms = new MemoryStream())

{

using (var doc = new iTextSharp.text.Document())

{

using (var copy = new PdfSmartCopy(doc, ms))

{

doc.Open();

foreach (var p in lstByteArray)

{

using (var reader = new PdfReader(p))

{

copy.AddDocument(reader);

}

}

doc.Close();

}

}

return ms.ToArray();

}

}

Concat is the right answer, but for some reason a handrolled thing is getting the most votes. If you like that answer, perhaps you'd like this more general solution even more:

IEnumerable<byte> Combine(params byte[][] arrays)

{

foreach (byte[] a in arrays)

foreach (byte b in a)

yield return b;

}

which would let you do things like:

byte[] c = Combine(new byte[] { 0, 1, 2 }, new byte[] { 3, 4, 5 }).ToArray();

© 2022 - 2024 — McMap. All rights reserved.

Concatmethod:IEnumerable<byte> arrays = array1.Concat(array2).Concat(array3);– Bootblack