I have faced the same issue related to Strings equality check, One of the comparing string has

ASCII character code 128-255.

i.e., Non-breaking space - [Hex - A0] Space [Hex - 20].

To show Non-breaking space over HTML. I have used the following spacing entities. Their character and its bytes are like &emsp is very wide space[ ]{-30, -128, -125}, &ensp is somewhat wide space[ ]{-30, -128, -126}, &thinsp is narrow space[ ]{32} , Non HTML Space {}

String s1 = "My Sample Space Data", s2 = "My Sample Space Data";

System.out.format("S1: %s\n", java.util.Arrays.toString(s1.getBytes()));

System.out.format("S2: %s\n", java.util.Arrays.toString(s2.getBytes()));

Output in Bytes:

S1: [77, 121, 32, 83, 97, 109, 112, 108, 101, 32, 83, 112, 97, 99, 101, 32, 68, 97, 116, 97]

S2: [77, 121, -30, -128, -125, 83, 97, 109, 112, 108, 101, -30, -128, -125, 83, 112, 97, 99, 101, -30, -128, -125, 68, 97, 116, 97]

Use below code for Different Spaces and their Byte-Codes: wiki for List_of_Unicode_characters

String spacing_entities = "very wide space,narrow space,regular space,invisible separator";

System.out.println("Space String :"+ spacing_entities);

byte[] byteArray =

// spacing_entities.getBytes( Charset.forName("UTF-8") );

// Charset.forName("UTF-8").encode( s2 ).array();

{-30, -128, -125, 44, -30, -128, -126, 44, 32, 44, -62, -96};

System.out.println("Bytes:"+ Arrays.toString( byteArray ) );

try {

System.out.format("Bytes to String[%S] \n ", new String(byteArray, "UTF-8"));

} catch (UnsupportedEncodingException e) {

e.printStackTrace();

}

➩ ASCII transliterations of Unicode string for Java. unidecode

String initials = Unidecode.decode( s2 );

➩ using Guava: Google Core Libraries for Java.

String replaceFrom = CharMatcher.WHITESPACE.replaceFrom( s2, " " );

For URL encode for the space use Guava laibrary.

String encodedString = UrlEscapers.urlFragmentEscaper().escape(inputString);

➩ To overcome this problem used String.replaceAll() with some RegularExpression.

// \p{Z} or \p{Separator}: any kind of whitespace or invisible separator.

s2 = s2.replaceAll("\\p{Zs}", " ");

s2 = s2.replaceAll("[^\\p{ASCII}]", " ");

s2 = s2.replaceAll(" ", " ");

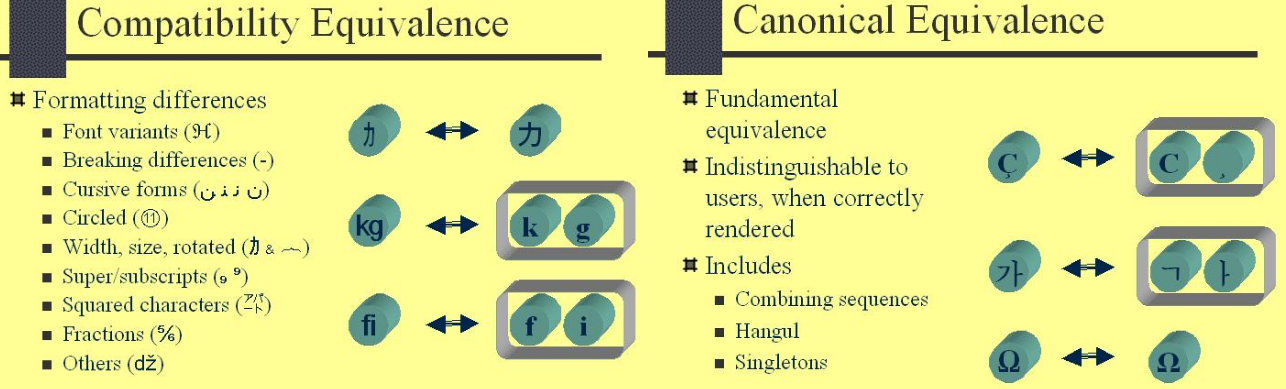

➩ Using java.text.Normalizer.Form.

This enum provides constants of the four Unicode normalization forms that are described in Unicode Standard Annex #15 — Unicode Normalization Forms and two methods to access them.

![enter image description here]()

s2 = Normalizer.normalize(s2, Normalizer.Form.NFKC);

Testing String and outputs on different approaches like ➩ Unidecode, Normalizer, StringUtils.

String strUni = "Tĥïŝ ĩš â fůňķŷ Šťŕĭńġ Æ,Ø,Ð,ß";

// This is a funky String AE,O,D,ss

String initials = Unidecode.decode( strUni );

// Following Produce this o/p: Tĥïŝ ĩš â fůňķŷ Šťŕĭńġ Æ,Ø,Ð,ß

String temp = Normalizer.normalize(strUni, Normalizer.Form.NFD);

Pattern pattern = Pattern.compile("\\p{InCombiningDiacriticalMarks}+");

temp = pattern.matcher(temp).replaceAll("");

String input = org.apache.commons.lang3.StringUtils.stripAccents( strUni );

Using Unidecode is the best choice, My final Code shown below.

public static void main(String[] args) {

String s1 = "My Sample Space Data", s2 = "My Sample Space Data";

String initials = Unidecode.decode( s2 );

if( s1.equals(s2)) { //[ , ] %A0 - %2C - %20 « http://www.ascii-code.com/

System.out.println("Equal Unicode Strings");

} else if( s1.equals( initials ) ) {

System.out.println("Equal Non Unicode Strings");

} else {

System.out.println("Not Equal");

}

}