In the documentation of the Azure pipelines, I read that:

Each agent can run only one job at a time. To run multiple jobs in parallel you must configure multiple agents.

When you run a pipeline on a self-hosted agent, by default, none of the sub-directories are cleaned in between two consecutive runs. As a result, you can do incremental builds and deployments, provided that tasks are implemented to make use of that. You can override this behavior using the workspace setting on the job.

Pipeline artifacts provide a way to share files between stages in a pipeline or between different pipelines. They are typically the output of a build process that needs to be consumed by another job or be deployed.

As a beginner, after reading this, I have some doubts:

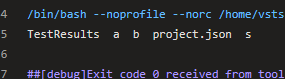

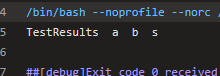

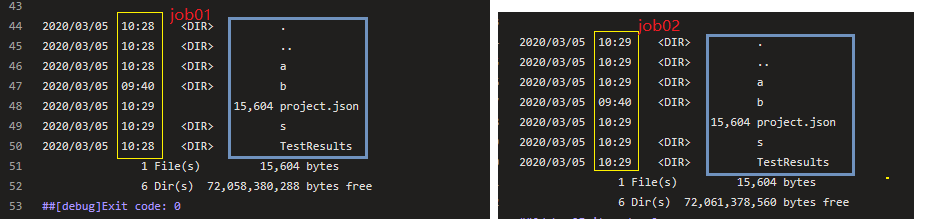

- If I have 2 jobs (2nd job runs after the 1st) in an azure-pipelines.yaml, will both the jobs run in the same agent? Do different jobs in the same pipeline share the same workspace that can be referenced via the variable

Pipeline.Workspace? ( It is clear that in the parallel job run case, it would need multiple agents). - The 1st job generates some files in one step. Is it possible to consume those files in 2nd job without using artifacts (normally using artifacts, 1st job publishes the artifact and 2nd job downloads it)?

Can some please help me to clear these doubts?