TL;DR

I tested with other image processing libraries (scikit-image, Pillow and Matlab) and none of them return the expected result.

Odds are this behavior is due to the method to perform the bi-linear interpolation to get efficient results or somehow a convention rather than a bug in my opinion.

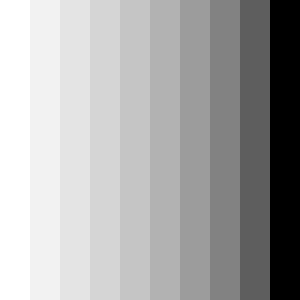

I have posted a sample code to perform image resizing with a bi-linear interpolation (check if everything is ok of course, I am not sure how to properly handle the image indexes...) that outputs the expected result.

Partial answer to the question.

What is the output of some other image processing libraries?

scikit-image

The Python module scikit-image contains lot of image processing algorithms. Here the outputs of the skimage.transform.resize method (skimage.__version__: 0.12.3):

mode='constant' (default)

Code:

import numpy as np

from skimage.transform import resize

image = np.array( [

[0., 1.],

[0., 1.]

] )

print 'image:\n', image

image_resized = resize(image, (5,5), order=1, mode='constant')

print 'image_resized:\n', image_resized

Result:

image:

[[ 0. 1.]

[ 0. 1.]]

image_resized:

[[ 0. 0.07 0.35 0.63 0.49]

[ 0. 0.1 0.5 0.9 0.7 ]

[ 0. 0.1 0.5 0.9 0.7 ]

[ 0. 0.1 0.5 0.9 0.7 ]

[ 0. 0.07 0.35 0.63 0.49]]

Result:

image:

[[ 0. 1.]

[ 0. 1.]]

image_resized:

[[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]]

Result:

image:

[[ 0. 1.]

[ 0. 1.]]

image_resized:

[[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]

[ 0. 0.1 0.5 0.9 1. ]]

Result:

image:

[[ 0. 1.]

[ 0. 1.]]

image_resized:

[[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]]

Result:

image:

[[ 0. 1.]

[ 0. 1.]]

image_resized:

[[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]

[ 0.3 0.1 0.5 0.9 0.7]]

As you can see, the default resize mode (constant) produces a different output but the edge mode returns the same result than OpenCV. None of the resize mode produces the expected result thought.

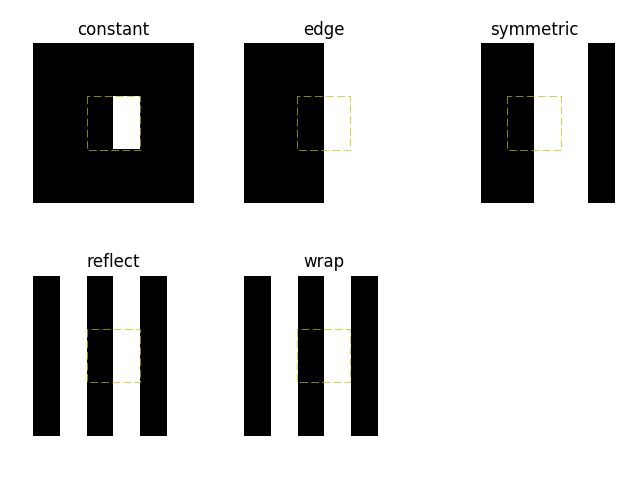

More information about Interpolation: Edge Modes.

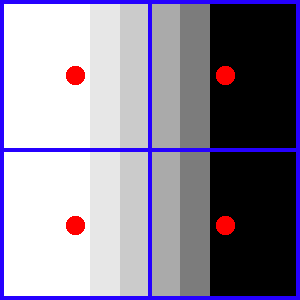

This picture sums up all the results in our case:

![edge modes]()

Pillow

Pillow

is the friendly PIL fork by Alex Clark and Contributors. PIL is the

Python Imaging Library by Fredrik Lundh and Contributors.

What about PIL.Image.Image.resize (PIL.__version__: 4.0.0)?

Code:

import numpy as np

from PIL import Image

image = np.array( [

[0., 1.],

[0., 1.]

] )

print 'image:\n', image

image_pil = Image.fromarray(image)

image_resized_pil = image_pil.resize((5,5), resample=Image.BILINEAR)

print 'image_resized_pil:\n', np.asarray(image_resized_pil, dtype=np.float)

Result:

image:

[[ 0. 1.]

[ 0. 1.]]

image_resized_pil:

[[ 0. 0.1 0.5 0.89999998 1. ]

[ 0. 0.1 0.5 0.89999998 1. ]

[ 0. 0.1 0.5 0.89999998 1. ]

[ 0. 0.1 0.5 0.89999998 1. ]

[ 0. 0.1 0.5 0.89999998 1. ]]

Pillow image resizing matches the output of the OpenCV library.

Matlab

Matlab proposes a toolbox named Image Processing Toolbox. The function imresize in this toolbox allows to resize image.

Code:

image = zeros(2,1,'double');

image(1,2) = 1;

image(2,2) = 1;

image

image_resize = imresize(image, [5 5], 'bilinear')

Result:

image =

0 1

0 1

image_resize =

0 0.1000 0.5000 0.9000 1.0000

0 0.1000 0.5000 0.9000 1.0000

0 0.1000 0.5000 0.9000 1.0000

0 0.1000 0.5000 0.9000 1.0000

0 0.1000 0.5000 0.9000 1.0000

Again, it is not the expected output with Matlab but the same result with the two previous examples.

Custom bi-linear image resize method

Basic principle

See this Wikipedia article on Bilinear interpolation for more complete information.

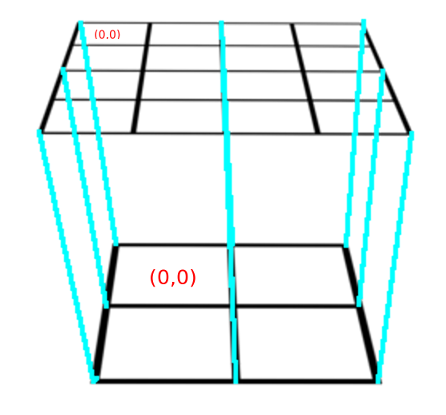

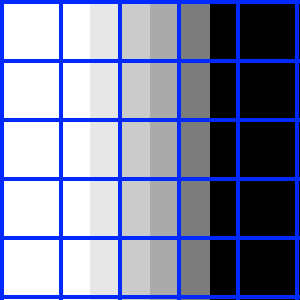

This figure should basically illustrates what happens when up-scaling from a 2x2 image to a 4x4 image:

![upscaling]()

With a nearest neighbor interpolation, the destination pixel at (0,0) will get the value of the source pixel at (0,0) as well as the pixels at (0,1), (1,0) and (1,1).

With a bi-linear interpolation, the destination pixel at (0,0) will get a value which is a linear combination of the 4 neighbors in the source image:

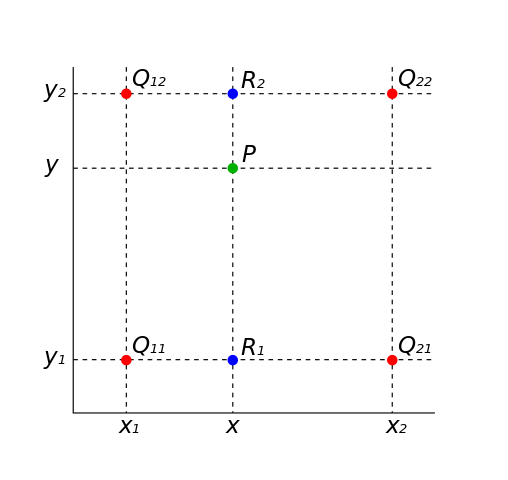

![Wikipedia: BilinearInterpolation.svg.png]()

The four red dots show the data points and the green dot is the point

at which we want to interpolate.

R1 is calculated as: R1 = ((x2 – x)/(x2 – x1))*Q11 + ((x – x1)/(x2 – x1))*Q21.

R2 is calculated as: R2 = ((x2 – x)/(x2 – x1))*Q12 + ((x – x1)/(x2 – x1))*Q22.

Finally, P is calculated as a weighted average of R1 and R2: P = ((y2 – y)/(y2 – y1))*R1 + ((y – y1)/(y2 – y1))*R2.

Using coordinates normalized between [0, 1] simplifies the formula.

C++ implementation

This blog post (Resizing Images With Bicubic Interpolation) contains C++ code to perform image resizing with a bi-linear interpolation.

This is my own adaptation (some modifications about the indexes compared to the original code, not sure if it is correct) of the code to work with cv::Mat:

#include <iostream>

#include <opencv2/core.hpp>

float lerp(const float A, const float B, const float t) {

return A * (1.0f - t) + B * t;

}

template <typename Type>

Type resizeBilinear(const cv::Mat &src, const float u, const float v, const float xFrac, const float yFrac) {

int u0 = (int) u;

int v0 = (int) v;

int u1 = (std::min)(src.cols-1, (int) u+1);

int v1 = v0;

int u2 = u0;

int v2 = (std::min)(src.rows-1, (int) v+1);

int u3 = (std::min)(src.cols-1, (int) u+1);

int v3 = (std::min)(src.rows-1, (int) v+1);

float col0 = lerp(src.at<Type>(v0, u0), src.at<Type>(v1, u1), xFrac);

float col1 = lerp(src.at<Type>(v2, u2), src.at<Type>(v3, u3), xFrac);

float value = lerp(col0, col1, yFrac);

return cv::saturate_cast<Type>(value);

}

template <typename Type>

void resize(const cv::Mat &src, cv::Mat &dst) {

float scaleY = (src.rows - 1) / (float) (dst.rows - 1);

float scaleX = (src.cols - 1) / (float) (dst.cols - 1);

for (int i = 0; i < dst.rows; i++) {

float v = i * scaleY;

float yFrac = v - (int) v;

for (int j = 0; j < dst.cols; j++) {

float u = j * scaleX;

float xFrac = u - (int) u;

dst.at<Type>(i, j) = resizeBilinear<Type>(src, u, v, xFrac, yFrac);

}

}

}

void resize(const cv::Mat &src, cv::Mat &dst, const int width, const int height) {

if (width < 2 || height < 2 || src.cols < 2 || src.rows < 2) {

std::cerr << "Too small!" << std::endl;

return;

}

dst = cv::Mat::zeros(height, width, src.type());

switch (src.type()) {

case CV_8U:

resize<uchar>(src, dst);

break;

case CV_64F:

resize<double>(src, dst);

break;

default:

std::cerr << "Src type is not supported!" << std::endl;

break;

}

}

int main() {

cv::Mat img = (cv::Mat_<double>(2,2) << 0, 1, 0, 1);

std::cout << "img:\n" << img << std::endl;

cv::Mat img_resize;

resize(img, img_resize, 5, 5);

std::cout << "img_resize=\n" << img_resize << std::endl;

return EXIT_SUCCESS;

}

It produces:

img:

[0, 1;

0, 1]

img_resize=

[0, 0.25, 0.5, 0.75, 1;

0, 0.25, 0.5, 0.75, 1;

0, 0.25, 0.5, 0.75, 1;

0, 0.25, 0.5, 0.75, 1;

0, 0.25, 0.5, 0.75, 1]

Conclusion

In my opinion, it is unlikely that the OpenCV resize() function is wrong as none of the others image processing libraries I can test produce the expected output and moreover can produce the same OpenCV output with the good parameter.

I tested against two Python modules (scikit-image and Pillow) as they are easy to use and oriented about image processing. I was also able to test with Matlab and its image processing toolbox.

A rough custom implementation of the bi-linear interpolation for image resizing produces the expected result. Two possibilities for me could explain this behavior:

- the difference is inherent to the method these image processing libraries use rather than a bug (maybe they use a method to resize images efficiently with some loss compared to a strict bi-linear implementation?)?

- it is a somehow a convention to interpolate properly excluding the border?

These libraries are open-source and one can explore into their source code to understand where the discrepancy comes from.

The linked answer shows that the interpolation works only between the two original blue dots but I cannot explain why this behavior.

Why this answer?

This answer, even if it partially answers the OP question, is a good way for me to summarize the few things I found about this topic. I believe also it could help in some way other people who may found this.