For black-and-white text

If you're using .normal compositing operation you'll definitely get not the same result as using .hardLight. Your picture shows the result of .hardLight operation.

.normal operation is classical OVER op with formula: (Image1 * A1) + (Image2 * (1 – A1)).

Here's a premultiplied text (RGB*A), so RGB pattern depends on A's opacity in this particular case. RGB of text image can contain any color, including a black one. If A=0 (black alpha) and RGB=0 (black color) and your image is premultiplied – the whole image is totally transparent, if A=1 (white alpha) and RGB=0 (black color) – the image is opaque black.

If your text has no alpha when you use .normal operation, I'll get ADD op: Image1 + Image2.

To get what you want, you need to set up a compositing operation to .hardLight.

.hardLight compositing operation works as .multiply

if alpha of text image less than 50 percent (A < 0.5, the image is almost transparent)

Formula for .multiply: Image1 * Image2

.hardLight compositing operation works as .screen

if alpha of text image greater than or equal to 50 percent (A >= 0.5, the image is semi-opaque)

Formula 1 for .screen: (Image1 + Image2) – (Image1 * Image2)

Formula 2 for .screen: 1 – (1 – Image1) * (1 – Image2)

.screen operation has much softer result than .plus, and it allows to keep alpha not greater than 1 (plus operation adds alphas of Image1 and Image2, so you might get alpha = 2, if you have two alphas). .screen compositing operation is good for making reflections.

![enter image description here]()

func editImage() {

print("Drawing image with \(selectedOpacity) alpha")

let text = "hello world"

let backgroundCGImage = #imageLiteral(resourceName: "background").cgImage!

let backgroundImage = CIImage(cgImage: backgroundCGImage)

let imageRect = backgroundImage.extent

//set up transparent context and draw text on top

let colorSpace = CGColorSpaceCreateDeviceRGB()

let alphaInfo = CGImageAlphaInfo.premultipliedLast.rawValue

let bitmapContext = CGContext(data: nil, width: Int(imageRect.width), height: Int(imageRect.height), bitsPerComponent: 8, bytesPerRow: 0, space: colorSpace, bitmapInfo: alphaInfo)!

bitmapContext.draw(backgroundCGImage, in: imageRect)

bitmapContext.setAlpha(CGFloat(selectedOpacity))

bitmapContext.setTextDrawingMode(.fill)

//TRY THREE COMPOSITING OPERATIONS HERE

bitmapContext.setBlendMode(.hardLight)

//bitmapContext.setBlendMode(.multiply)

//bitmapContext.setBlendMode(.screen)

//white text

bitmapContext.textPosition = CGPoint(x: 15 * UIScreen.main.scale, y: (20 + 60) * UIScreen.main.scale)

let displayLineTextWhite = CTLineCreateWithAttributedString(NSAttributedString(string: text, attributes: [.foregroundColor: UIColor.white, .font: UIFont.systemFont(ofSize: 58 * UIScreen.main.scale)]))

CTLineDraw(displayLineTextWhite, bitmapContext)

//black text

bitmapContext.textPosition = CGPoint(x: 15 * UIScreen.main.scale, y: 20 * UIScreen.main.scale)

let displayLineTextBlack = CTLineCreateWithAttributedString(NSAttributedString(string: text, attributes: [.foregroundColor: UIColor.black, .font: UIFont.systemFont(ofSize: 58 * UIScreen.main.scale)]))

CTLineDraw(displayLineTextBlack, bitmapContext)

let outputImage = bitmapContext.makeImage()!

topImageView.image = UIImage(cgImage: outputImage)

}

So for recreating this compositing operation you need the following logic:

//rgb1 – text image

//rgb2 - background

//a1 - alpha of text image

if a1 >= 0.5 {

//use this formula for compositing: 1–(1–rgb1)*(1–rgb2)

} else {

//use this formula for compositing: rgb1*rgb2

}

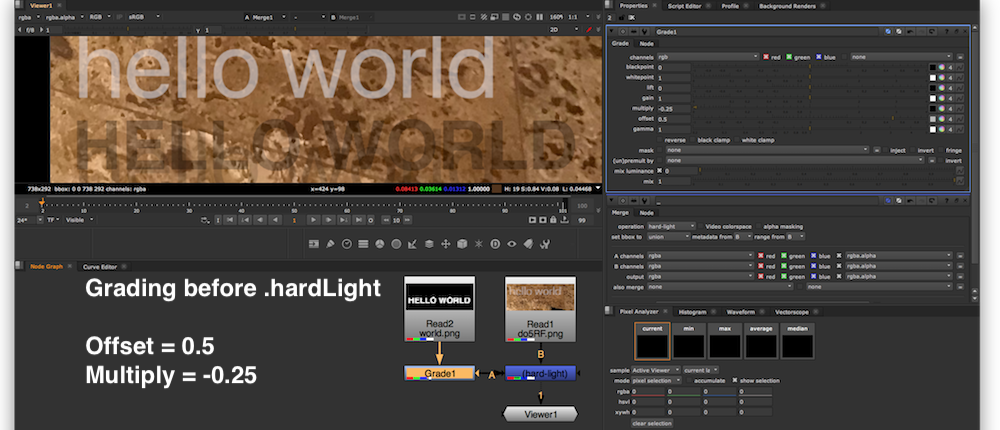

I recreated an image using compositing app The Foundry NUKE 11. Offset=0.5 here is Add=0.5.

I used property Offset=0.5 because transparency=0.5 is a pivot point of .hardLight compositing operation.

![enter image description here]()

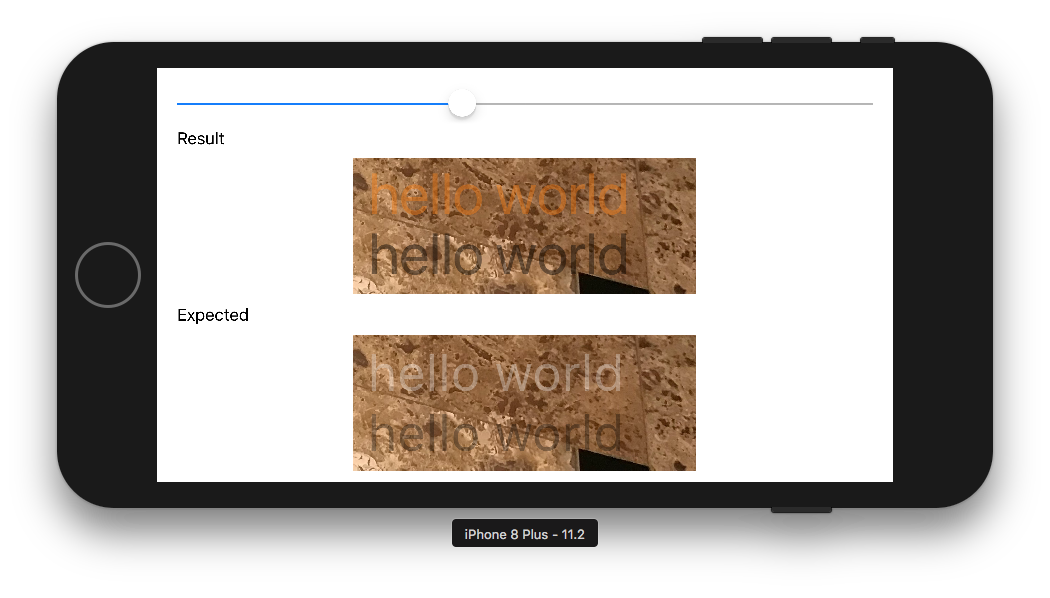

![enter image description here]()

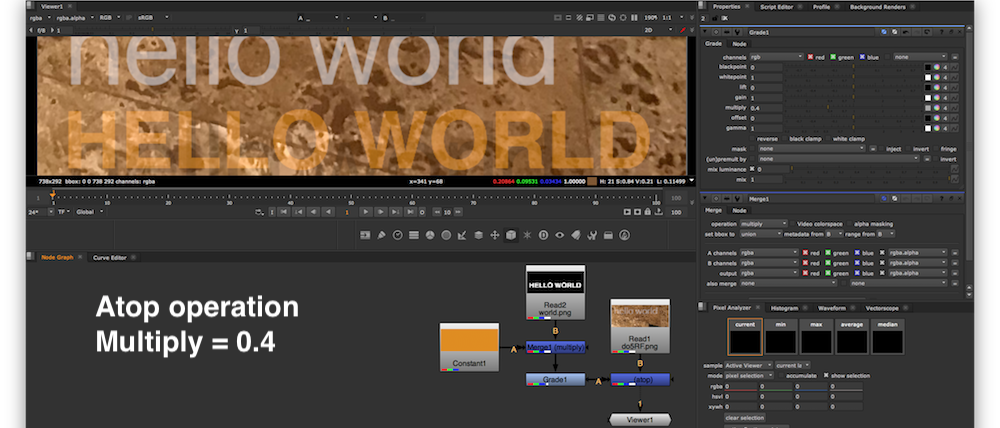

For color text

You need to use .sourceAtop compositing operation in case you have ORANGE (or any other color) text in addition to B&W text. Applying .sourceAtop case of .setBlendMode method you make Swift use the luminance of the background image to determine what to show. Alternatively you can employ CISourceAtopCompositing core image filter instead of CISourceOverCompositing.

bitmapContext.setBlendMode(.sourceAtop)

or

let compositingFilter = CIFilter(name: "CISourceAtopCompositing")

.sourceAtop operation has the following formula: (Image1 * A2) + (Image2 * (1 – A1)). As you can see you need two alpha channels: A1 is the alpha for text and A2 is the alpha for background image.

bitmapContext.textPosition = CGPoint(x: 15 * UIScreen.main.scale, y: (20 + 60) * UIScreen.main.scale)

let displayLineTextOrange = CTLineCreateWithAttributedString(NSAttributedString(string: text, attributes: [.foregroundColor: UIColor.orange, .font: UIFont.systemFont(ofSize: 58 * UIScreen.main.scale)]))

CTLineDraw(displayLineTextOrange, bitmapContext)

![enter image description here]()

![enter image description here]()