(Edited March 17th)

Here comes an improvement of the answer with this 'headline':

"Instead of using multiple sinuses with different amplitudes, you should use them with random phases:"

The problem with the way that was written is that it consumes enormous amounts of RAM. Here I have fixed it. It still uses a large amount of CPU:

from scipy.io import wavfile

import numpy as np

# It takes my CPU about 50 sec to generate 1 sec of "noise".

def band_limited_noise(min_freq, max_freq, freq_step, samples=44100, samplerate=44100):

t = np.linspace(0, samples/samplerate, samples)

freqs = np.arange(min_freq, max_freq+1, freq_step)

no_of_freqs = len(freqs)

pi_2 = 2*np.pi

phases = np.random.rand(no_of_freqs)*pi_2 # I am not sure why it is necessary to add randomness?

signals = np.zeros(samples)

for i in range(no_of_freqs):

signals = signals + np.sin(pi_2*freqs[i]*t + phases[i])

peak_value = np.max(np.abs(signals))

signals /= peak_value

return signals

if __name__ == '__main__':

# CONFIGURE HERE:

seconds = 3

samplerate = 44100

freq_step = 0.25

x = band_limited_noise(10, 20000, freq_step, seconds * samplerate, samplerate)

wavfile.write("add all frequencies 3 sec freq_step 0,25.wav", 44100, x)

EDITED March 17th:

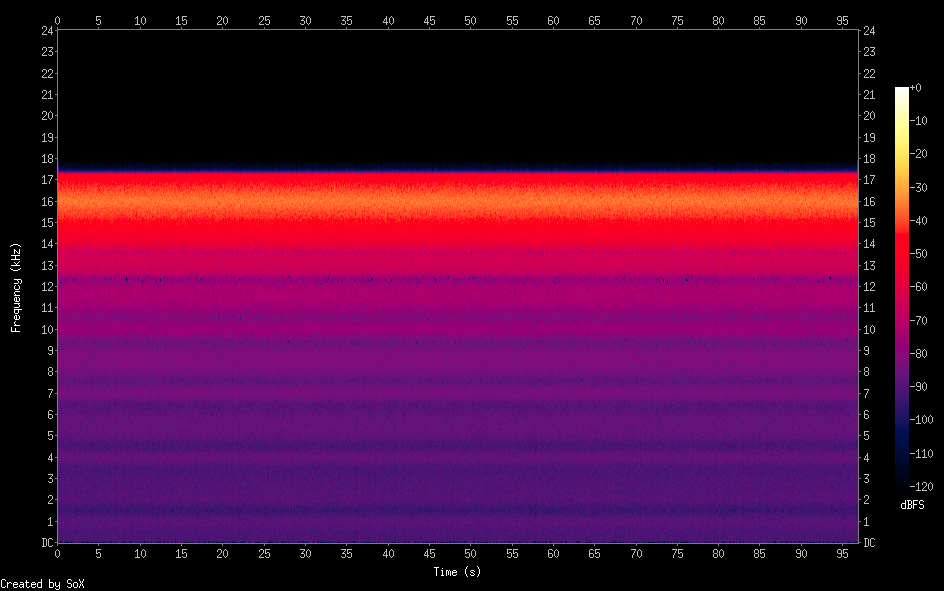

I have now done a thorough analysis of the above generated "noise", compared to more 'ordinary' white noise, created with the following, much shorter and much faster code. The above has less variation of the 'amount' of signal at each frequency:

from scipy.io import wavfile

from scipy import stats

import numpy

sample_rate = 44100

length_in_seconds = 3

no_of_repetitions = 10

noise = numpy.array( stats.truncnorm(-1, 1, loc=0, scale=1).rvs(size = sample_rate * length_in_seconds * no_of_repetitions) )

if no_of_repetitions > 1:

slices = []

for i in range(no_of_repetitions):

slices.append(

numpy.array(noise[i * sample_rate * length_in_seconds:(i + 1) * sample_rate * length_in_seconds - 1]))

added_up = numpy.zeros(len(slices[0]))

for i in range(no_of_repetitions):

added_up = added_up + slices[i]

else:

added_up = noise

peak_value = numpy.max(numpy.abs(added_up))

numpy.abs(peak_value2))

added_up /= peak_value

wavfile.write('Quick noise float64 -1 +1 scale 1 rep 10.wav', sample_rate, added_up)

In order to make the comparison of the variation of the 'amount' of signal at each frequency, I had to do a 10 Hz high pass and a 20000 Hz low pass filtering of the "Quick noise" in Audacity, before comparing it's frequency spectrum (FFT) with that of the 'noise' from the more complex Python script that adds the sinuses of a large amount of frequencies.

To generate the frequency spectrum of the 2 WAV-files (both 3 seconds) I used scipy.FFT.

I analysed the variation using more or less this:

distfit.distfit.fit_transform(numpy.abs(scipy.FFT.rfft(data_from_wav_file)))

Here comes the details of that:

import soundfile as sf

from scipy.fft import rfft, rfftfreq

import numpy as np

from matplotlib import pyplot as plot

import distfit

if __name__ == '__main__':

# CONFIGURE HERE:

soundfile = "add all frequencies 3 sec freq_step 0,125.wav"

print("Input file: ", soundfile)

with sf.SoundFile(soundfile, 'r') as f:

new_samplerate = f.samplerate

data = f.read()

cutofflen = len(data)

xf = rfftfreq(cutofflen, 1 / new_samplerate)

yf = rfft(data)

yf = np.abs(yf) # Length of a vector...

print("Fit variations in the FFT of WAV-file to statistical distribution:")

FFT_dist = distfit.distfit(distr=['norm', 't', 'dweibull', 'uniform']) # Create the distfit object and choose interesting distributions.

FFT_dist.fit_transform(yf) # Call the fit transform function

FFT_dist.plot() # dfit.plot_summary() # Plot the summary

You can see the large variations of the frequency response using:

plot.plot(xf, yf)

plot.show()

According to the mentioned FFT and distfit analysis, none of these 2 types of noise actually come near to what I want: An exact equal amount of ALL frequencies in a certain frequency band, for instance from 10 Hz to 20 KHz. The variation (=the standard deviation divided by the mean value, in the Normal distribution, computed by distfit) is = 0,538. That's not impressing. I would have liked 0,2. :-) Making the freq_step even smaller does not make it better. Actually worse.

np.random.randn), then bandpass filter it in order to give it the desired frequency characteristics before adding it to your signal. – Biennial