I am trying to write an helper function that applies a color mask to a given image. My function has to set all opaque pixels of an image to the same color.

Here is what I have so far :

extension UIImage {

func applyColorMask(color: UIColor, context: CIContext) -> UIImage {

guard let cgImageInput = self.cgImage else {

print("applyColorMask: \(self) has no cgImage attribute.")

return self

}

// Throw away existing colors, and fill the non transparent pixels with the input color

// s.r = dot(s, redVector), s.g = dot(s, greenVector), s.b = dot(s, blueVector), s.a = dot(s, alphaVector)

// s = s + bias

let colorFilter = CIFilter(name: "CIColorMatrix")!

let ciColorInput = CIColor(cgColor: color.cgColor)

colorFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 0), forKey: "inputRVector")

colorFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 0), forKey: "inputGVector")

colorFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 0), forKey: "inputBVector")

colorFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 1), forKey: "inputAVector")

colorFilter.setValue(CIVector(x: ciColorInput.red, y: ciColorInput.green, z: ciColorInput.blue, w: 0), forKey: "inputBiasVector")

colorFilter.setValue(CIImage(cgImage: cgImageInput), forKey: kCIInputImageKey)

if let cgImageOutput = context.createCGImage(colorFilter.outputImage!, from: colorFilter.outputImage!.extent) {

return UIImage(cgImage: cgImageOutput)

} else {

print("applyColorMask: failed to apply filter to \(self)")

return self

}

}

}

The code works fine for black and white but not what I expected when applying funnier colors. See the original image and the screenshots below: the same color is used for the border and for the image. Though they're different. My function is doing wrong. Did I miss something in the filter matrix ?

The original image (there's white dot at the center):

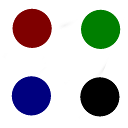

From top to bottom: The image filtered with UIColor(1.0, 1.0, 1.0, 1.0) inserted into a UIImageView which has borders of the same color. Then the same with UIColor(0.6, 0.5, 0.4, 1.0). And finally with UIColor(0.2, 0.5, 1.0, 1.0)

EDIT

Running Filterpedia gives me the same result. My understanding of the CIColorMatrix filter may be wrong then. The documentation says:

This filter performs a matrix multiplication, as follows, to transform the color vector:

- s.r = dot(s, redVector)

- s.g = dot(s, greenVector)

- s.b = dot(s, blueVector)

- s.a = dot(s, alphaVector)

- s = s + bias

Then, let say I throw up all RGB data with (0,0,0,0) vectros, and then pass (0.5, 0, 0, 0) mid-red as the bias vector; I would expect my image to have all its fully opaque pixels to (127, 0, 0). The screenshots below shows that it is slightly lighter (red=186):

Here is some pseudo code I want to do:

// image "im" is a vector of pixels

// pixel "p" is struct of rgba values

// color "col" is the input color or a struct of rgba values

for (p in im) {

p.r = col.r

p.g = col.g

p.b = col.b

// Nothing to do with the alpha channel

}

1,0,0,0for red,0,1,0,0for green, and0,0,1,0for blue, you get a normal looking picture. Now, to reduce the red channel by half, setinputRVectorto0.5,0,0,0. To remove all red set it to0,0,0,0. That's basically how I use it. I'm sure there's other combinations to get other results, but that should get you started. – DavieUISliders, displaying you the min/max/current values. If it's like the original version, there's six different test images along with a few dozen custom filters using either Metal or CIKernel (that use GLSL). One caveat: it's meant to run on iPad only (you can use the simulator but like any CoreImage projects the performance is very poor). – DavieCIPhotoProcessingMono. Also, if you know how to write/consume aCIColorKernel, I can give you the GLSL code for that. It's pretty simple - 4 lines of code wrapped around akernel vec4function. – DavieCIColorKernel. If I'm correct in what you want, let me know an I'll write up the Swift code to do it. – Davie