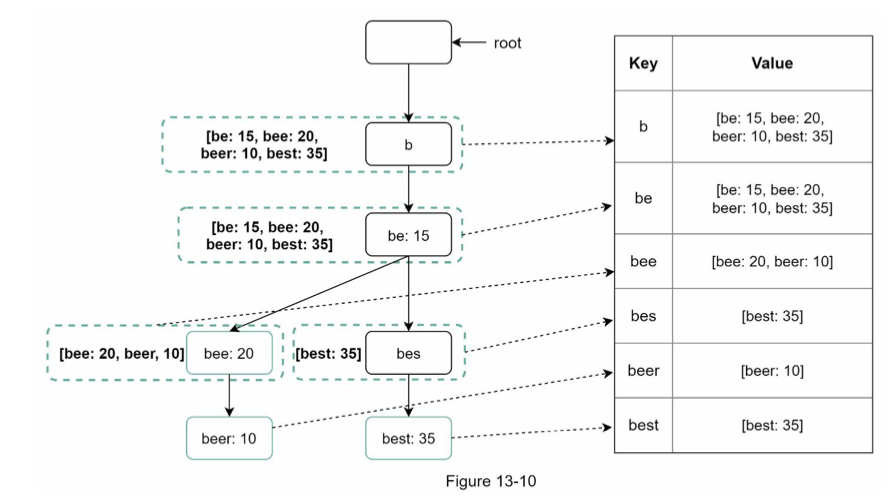

I have been reading a bit about tries, and how they are a good structure for typeahead designs. Aside from the trie, you usually also have a key/value pair for nodes and pre-computed top-n suggestions to improve response times.

Usually, from what I've gathered, it is ideal to keep them in memory for fast searches, such as what was suggested in this question: Scrabble word finder: building a trie, storing a trie, using a trie?. However, what if your Trie is too big and you have to shard it somehow? (e.g. perhaps a big e-commerce website).

The key/value pair for pre-computed suggestions can be obviously implemented in a key/value store (either kept in memory, like memcached/redis or in a database, and horizontally scalled as needed), but what is the best way to store a trie if it can't fit in memory? Should it be done at all, or should distributed systems each hold part of the trie in memory, while also replicating it so that it is not lost?

Alternatively, a search service (e.g. Solr or Elasticsearch) could be used to produce search suggestions/auto-complete, but I'm not sure whether the performance is up to par for this particular use-case. The advantage of the Trie is that you can pre-compute top-N suggestions based on its structure, leaving the search service to handle actual search on the website.

I know there are off-the-shelf solutions for this, but I'm mostly interesting in learning how to re-invent the wheel on this one, or at least catch a glimpse of the best practices if one wants to broach this topic.

What are your thoughts?

Edit: I also saw this post: https://medium.com/@prefixyteam/how-we-built-prefixy-a-scalable-prefix-search-service-for-powering-autocomplete-c20f98e2eff1, which basically covers the use of Redis as primary data store for a skip list with mongodb for LRU prefixes. Seems an OK approach, but I would still want to learn if there are other viable/better approaches.