I am doing a lab setup of EKS/Kubectl and after the completion cluster build, I run the following:

> kubectl get node

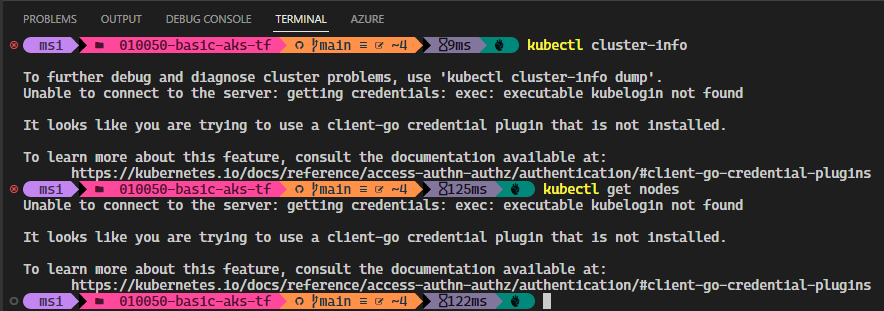

And I get the following error:

Unable to connect to the server: getting credentials: exec: exit status 2

Moreover, I am sure it is a configuration issue for,

kubectl version

usage: aws [options] <command> <subcommand> [<subcommand> ...] [parameters]

To see help text, you can run:

aws help

aws <command> help

aws <command> <subcommand> help

aws: error: argument operation: Invalid choice, valid choices are:

create-cluster | delete-cluster

describe-cluster | describe-update

list-clusters | list-updates

update-cluster-config | update-cluster-version

update-kubeconfig | wait

help

Client Version: version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.1", GitCommit:"d224476cd0730baca2b6e357d144171ed74192d6", GitTreeState:"clean", BuildDate:"2020-01-14T21:04:32Z", GoVersion:"go1.13.5", Compiler:"gc", Platform:"darwin/amd64"}

Unable to connect to the server: getting credentials: exec: exit status 2

Please advise next steps for troubleshooting.