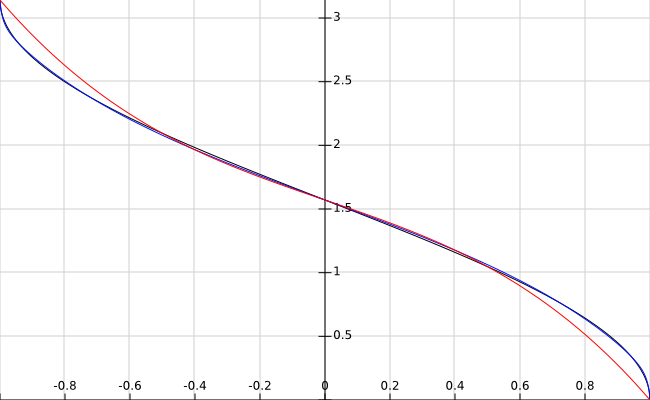

The code is intended for Visual Studio C++ and is based on my proposed fast approximation of the arctangent using the Padé-Chebyshev method (see Fast & accurate atan/arctan approximation algorithm). The function provides almost complete single-precision format accuracy, only slightly inferior in accuracy to the standard acosf() function, but significantly faster (more than fourfold in my system). The function calculates arccos x = 2 arctg ((1-x)/(1+x))^0.5. The maximum absolute error is about 4.7e-7 radians. The root mean square relative error is about 3.8e-8. It should be noted that the approximation error does not have a systematic component; the error is almost entirely due to rounding errors of intermediate calculation results.

_declspec(naked) float _vectorcall arccos(float x)

{

static const float ct[11] = // Constants table

{

2.26527548f, // a0*(a1+b0)=a0*c1

3.50788045f, // 2*a0*a1

0.0810196251f, // a0*(a3+b2)=a0*c3

0.0289801192f, // 2*a0*a3

1.53400576f, // a0

3.06801152f, // 2*a0

0.901376426f, // a0*(a2+b1)=a0*c2

0.906169057f, // 2*a0*a2

0.141592654f, // pi-3

3.0f, // 3

1.0f // 1

};

_asm

{

mov edx,offset ct // edx contains the address of constants table

vmovss xmm1,[edx+40] // xmm1 = 1

vsubss xmm2,xmm1,xmm0 // xmm2 = 1-x

vaddss xmm0,xmm0,xmm1 // xmm0 = 1+x

vdivss xmm2,xmm2,xmm0 // xmm2 = (1-x)/(1+x) = y

vmovups xmm3,[edx+16] // xmm3 = 2*a0*a2 # a0*c2 : 2*a0 # a0

vsqrtss xmm0,xmm2,xmm2 // xmm0 = y^0.5 = t

vshufps xmm2,xmm2,xmm2,0 // xmm2 = y # y : y # y

vmulps xmm4,xmm2,xmm2 // xmm4 = y^2 # y^2 : y^2 # y^2

vucomiss xmm2,xmm1 // Compare y with 1

ja arccos_m // Goto if x<0 (y>1) and x<>NaN

vfmadd231ps xmm3,xmm2,[edx] // xmm3 ~ 2*(a3*y+a2) # c3*y+c2 : 2*(a1*y+1) # c1*y+1

vmovhlps xmm1,xmm1,xmm3 // xmm1 ~ 2*(a3*y+a2) # c3*y+c2

vfmadd231ps xmm3,xmm4,xmm1 // xmm3 ~ 2*(a3*y^3+a2*y^2+a1*y+1) # c3*y^3+c2*y^2+c1*y+1

vmovshdup xmm2,xmm3 // xmm2 = 2*P; xmm3 = Q

vdivss xmm2,xmm2,xmm3 // xmm2 = 2*P/Q

vmulss xmm0,xmm0,xmm2 // xmm0 = 2*t*P/Q = 2*arctg(t) = arccos(x)

ret // Return

arccos_m: // For t>1 use the formula pi-2*arctg(1/t)

vmovss xmm1,[edx+32] // xmm1 = pi-3

vucomiss xmm0,xmm2 // Compare t with y to reveal infinity (case x=-1)

jnb arccos_end // If x=-1 the required data is already in xmm1

vfmadd213ps xmm3,xmm2,[edx] // xmm3 ~ 2*(a2*y+a3) # c2*y+c3 : 2*(y+a1) # y+c1

vmovhlps xmm2,xmm2,xmm3 // xmm2 ~ 2*(a2*y+a3) # c2*y+c3

vfmadd213ps xmm3,xmm4,xmm2 // xmm3 ~ 2*(y^3+a1*y^2+a2*y+a3) # y^3+c1*y^2+c2*y+c3

vmovshdup xmm4,xmm3 // xmm4 = 2*P; xmm3 = Q

vmulss xmm0,xmm0,xmm3 // xmm0 = t*Q

vfmsub132ss xmm1,xmm4,xmm0 // xmm1 = (pi-3)*t*Q-2*P

vdivss xmm1,xmm1,xmm0 // xmm1 = (pi-3)-2*P/(t*Q)

arccos_end: // Add 3 to result

vaddss xmm0,xmm1,[edx+36] // xmm0 = pi-2*P/(x*Q) = 2*arctg(t) = arccos(x)

ret // Return

}

}

sinffrommath.hon my system takes only about 2.5x as much time as your approximation. Considering your function is inlined and the lib call is not, this is really not much difference. My guess is if you added range reduction so it was usuable in the same way as the standard function, you would have exactly the same speed. – Holmgren