I am exploring ELK stack and coming across an issue.

I have generated logs, forwarded the logs to logstash, logs are in JSON format so they are pushed directly into ES with only JSON filter in Logstash config, connected and started Kibana pointing to the ES.

Logstash Config:

filter {

json {

source => "message"

}

Now I have indexes created for each day's log and Kibana happily shows all of the logs from all indexes.

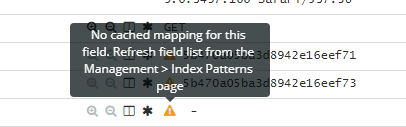

My issue is: there are many fields in logs which are not enabled/indexed for filtering in Kibana. When I try to add them to the filer in Kibana, it says "unindexed fields cannot be searched".

Note: these are not sys/apache log. There are custom logs in JSON format.

Log format:

{"message":"ResponseDetails","@version":"1","@timestamp":"2015-05-23T03:18:51.782Z","type":"myGateway","file":"/tmp/myGatewayy.logstash","host":"localhost","offset":"1072","data":"text/javascript","statusCode":200,"correlationId":"a017db4ebf411edd3a79c6f86a3c0c2f","docType":"myGateway","level":"info","timestamp":"2015-05-23T03:15:58.796Z"}

fields like 'statusCode', 'correlationId' are not getting indexed. Any reason why?

Do I need to give a Mapping file to ES to ask it to index either all or given fields?