The wording you refer to looks like that which I often use. The specification says this though:

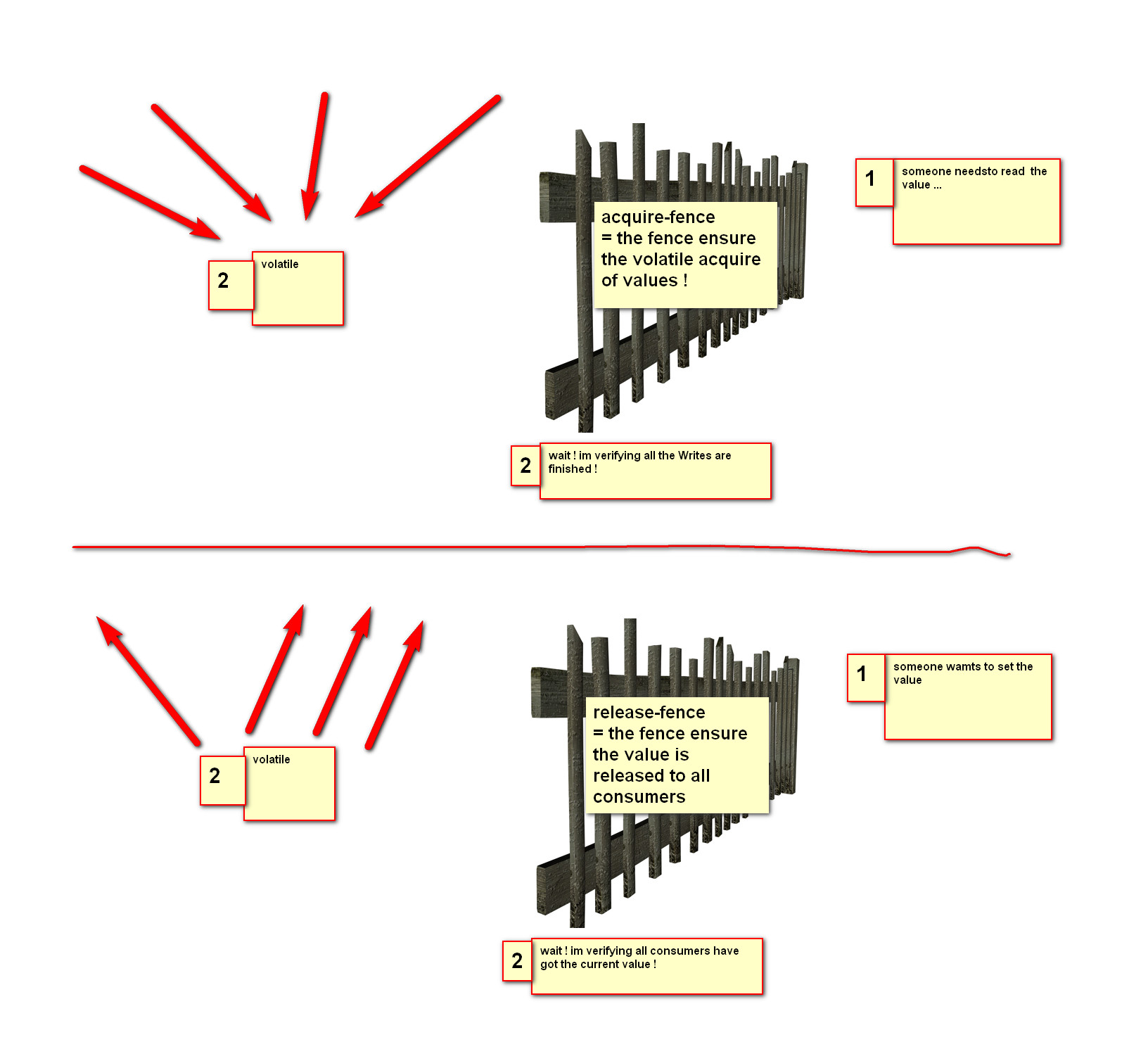

- A read of a volatile field is called a volatile read. A volatile read has "acquire semantics"; that is, it is guaranteed to occur prior to any references to memory that occur after it in the instruction sequence.

- A write of a volatile field is called a volatile write. A volatile write has "release semantics"; that is, it is guaranteed to happen after any memory references prior to the write instruction in the instruction sequence.

But, I usually use the wording you cited in your question because I want to put the focus on the fact that instructions can be moved. The wording you cited and the specification are equivalent.

I am going to present several examples. In these examples I am going to use a special notation that uses an ↑ arrow to indicate a release-fence and a ↓ arrow to indicate an acquire-fence. No other instruction is allowed to float down past an ↑ arrow or up past an ↓ arrow. Think of the arrow head as repelling everything away from it.

Consider the following code.

static int x = 0;

static int y = 0;

static void Main()

{

x++

y++;

}

Rewriting it to show the individual instructions would look like this.

static void Main()

{

read x into register1

increment register1

write register1 into x

read y into register1

increment register1

write register1 into y

}

Now, because there are no memory barriers in this example the C# compiler, JIT compiler, or hardware is free to optimize it in many different ways as long as the logical sequence as perceived by the executing thread is consistent with the physical sequence. Here is one such optimization. Notice how the reads and writes to/from x and y got swapped.

static void Main()

{

read y into register1

read x into register2

increment register1

increment register2

write register1 into y

write register2 into x

}

Now this time will change those variables to volatile. I will use our arrow notation to mark the memory barriers. Notice how the order of the reads and writes to/from x and y are preserved. This is because instructions cannot move past our barriers (denoted by the ↓ and ↑ arrow heads). Now, this is important. Notice that the increment and write of x instructions were still allowed to float down and the read of y floated up. This is still valid because we were using half fences.

static volatile int x = 0;

static volatile int y = 0;

static void Main()

{

read x into register1

↓ // volatile read

read y into register2

↓ // volatile read

increment register1

increment register2

↑ // volatile write

write register1 into x

↑ // volatile write

write register2 into y

}

This is a very trivial example. Look at my answer here for a non-trivial example of how volatile can make a difference in the double-checked pattern. I use the same arrow notation I used here to make it easy to visualize what is happening.

Now, we also have the Thread.MemoryBarrier method to work with. It generates a full fence. So if we used our arrow notation we can visualize how that works as well.

Consider this example.

static int x = 0;

static int y = 0;

static void Main

{

x++;

Thread.MemoryBarrier();

y++;

}

Which then looks like this if we are to show the individual instructions as before. Notice that instruction movement is prevented altogether now. There is really no other way this can get executed without compromising the logical sequence of the instructions.

static void Main()

{

read x into register1

increment register1

write register1 into x

↑ // Thread.MemoryBarrier

↓ // Thread.MemoryBarrier

read y into register1

increment register1

write register1 into y

}

Okay, one more example. This time let us use VB.NET. VB.NET does not have the volatile keyword. So how can we mimic a volatile read in VB.NET? We will use Thread.MemoryBarrier.1

Public Function VolatileRead(ByRef address as Integer) as Integer

Dim local = address

Thread.MemoryBarrier()

Return local

End Function

And this is what it looks like with our arrow notation.

Public Function VolatileRead(ByRef address as Integer) as Integer

read address into register1

↑ // Thread.MemoryBarrier

↓ // Thread.MemoryBarrier

return register1

End Function

It is important to note that since we want to mimic a volatile read the call to Thread.MemoryBarrier must be placed after the actual read. Do not fall into the trap of thinking that a volatile read means a "fresh read" and a volatile write means a "committed write". That is not how it works and it certainly is not what the specification describes.

Update:

In reference to the image.

wait! I am verifing that all the Writes are finished!

and

wait! I am verifying that all the consumers have got the current

value!

This is the trap I was talking about. The statements are not completely accurate. Yes, a memory barrier implemented at the hardware level may synchronize the cache coherency lines and as a result the statements above may be somewhat accurate acount of what happens. But, volatile does nothing more than restrict the movement of instructions. The specification says nothing about loading a value from memory or storing it to memory at the spot where the memory barrier is place.

1There is, of course, the Thread.VolatileRead builtin already. And you will notice that it is implemented exactly as I have done here.