I could not understand belady anomaly even after reading the Wikipedia article and accepted answer. After writing the trace I kind of got it. Here I’d like to share my understanding.

Keys to understanding belady anomaly.

- Unlike LRU, FIFO just pushes out the oldest elements regardless of

frequency. So staying in FIFO longer means falling victim to

eviction.

From here, I refer to 3 and 4 pages FIFO queue as FIFO3 and FIFO4.

To understand Wikipedia example, I divided it into 2 parts. When FIFO3 catches up with FIFO4 and when FIFO3 overtakes FIFO4.

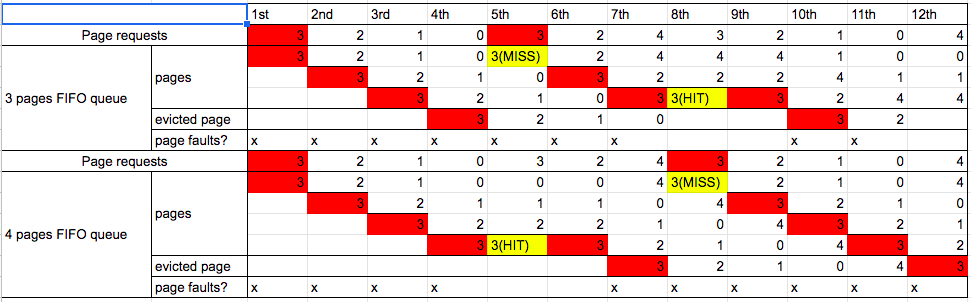

How FIFO3 catch up with FIFO4 on 9th

![enter image description here]()

Look at 3 in both FIFOs. In FIFO3, 3 is evicted on 4th and came back on 5th. So it was still there on 8th and cache hit happened.

In FIFO4, 3 is HIT on 5th, but this cache hit made 3 stays longer and evicted on 7th, right before next 3 on 8th.

2 is the same as 3. Second 2(6th) is MISS on FIFO3 and HIT on FIFO4, but third 2(9th) is HIT on FIFO3, and MISS on FIFO4.

It might help to think like this. On FIFO3, 3 was updated on 5th so stayed longer until 8th. On FIFO4 3 was old and evicted on 7th, right before next 3 comes.

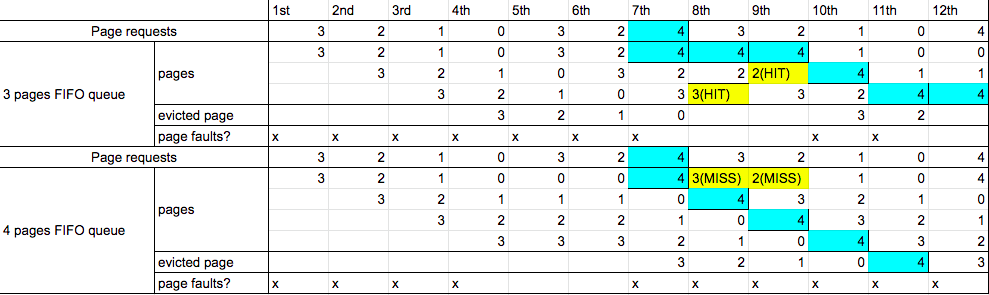

How FIFO3 overtakes FIFO4

![enter image description here]()

Because there are 2 cache misses on 8, 9th for FIFO4, 4 is pushed down and evicted on 11th in FIFO4.

FIFO3 still retains 4 on 12th because there are cache hit on 8, 9th, so 4 was not pushed down.

I think this is why Wikipedia's aritcle says "Penny Wise, Pound Foolish"

Conclusion

FIFO is a simple and naive algorithm that does not take frequency into account.

You might get a better understanding by applying LRU(Least Recently Used) to Wikipedia’s example. In LRU, 4 is better than 3.