I am trying to implement a sequence-to-sequence task using LSTM by Keras with the TensorFlow backend. The inputs are English sentences with variable lengths. To construct a dataset with 2-D shape [batch_number, max_sentence_length], I add EOF at the end of the line and pad each sentence with enough placeholders, e.g. #. And then each character in the sentence is transformed into a one-hot vector, so that the dataset has 3-D shape [batch_number, max_sentence_length, character_number]. After LSTM encoder and decoder layers, softmax cross-entropy between output and target is computed.

To eliminate the padding effect in model training, masking could be used on input and loss function. Mask input in Keras can be done by using layers.core.Masking. In TensorFlow, masking on loss function can be done as follows: custom masked loss function in TensorFlow.

However, I don't find a way to realize it in Keras, since a user-defined loss function in Keras only accepts parameters y_true and y_pred. So how to input true sequence_lengths to loss function and mask?

Besides, I find a function _weighted_masked_objective(fn) in \keras\engine\training.py. Its definition is

Adds support for masking and sample-weighting to an objective function.

But it seems that the function can only accept fn(y_true, y_pred). Is there a way to use this function to solve my problem?

To be specific, I modify the example of Yu-Yang.

from keras.models import Model

from keras.layers import Input, Masking, LSTM, Dense, RepeatVector, TimeDistributed, Activation

import numpy as np

from numpy.random import seed as random_seed

random_seed(123)

max_sentence_length = 5

character_number = 3 # valid character 'a, b' and placeholder '#'

input_tensor = Input(shape=(max_sentence_length, character_number))

masked_input = Masking(mask_value=0)(input_tensor)

encoder_output = LSTM(10, return_sequences=False)(masked_input)

repeat_output = RepeatVector(max_sentence_length)(encoder_output)

decoder_output = LSTM(10, return_sequences=True)(repeat_output)

output = Dense(3, activation='softmax')(decoder_output)

model = Model(input_tensor, output)

model.compile(loss='categorical_crossentropy', optimizer='adam')

model.summary()

X = np.array([[[0, 0, 0], [0, 0, 0], [1, 0, 0], [0, 1, 0], [0, 1, 0]],

[[0, 0, 0], [0, 1, 0], [1, 0, 0], [0, 1, 0], [0, 1, 0]]])

y_true = np.array([[[0, 0, 1], [0, 0, 1], [1, 0, 0], [0, 1, 0], [0, 1, 0]], # the batch is ['##abb','#babb'], padding '#'

[[0, 0, 1], [0, 1, 0], [1, 0, 0], [0, 1, 0], [0, 1, 0]]])

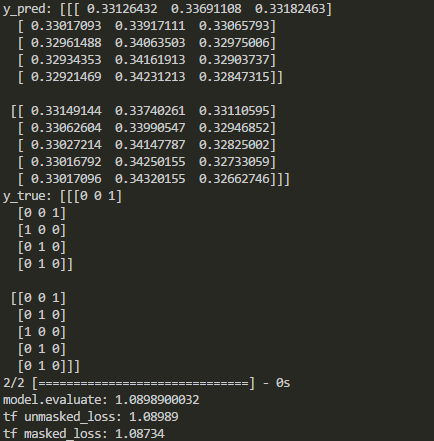

y_pred = model.predict(X)

print('y_pred:', y_pred)

print('y_true:', y_true)

print('model.evaluate:', model.evaluate(X, y_true))

# See if the loss computed by model.evaluate() is equal to the masked loss

import tensorflow as tf

logits=tf.constant(y_pred, dtype=tf.float32)

target=tf.constant(y_true, dtype=tf.float32)

cross_entropy = tf.reduce_mean(-tf.reduce_sum(target * tf.log(logits),axis=2))

losses = -tf.reduce_sum(target * tf.log(logits),axis=2)

sequence_lengths=tf.constant([3,4])

mask = tf.reverse(tf.sequence_mask(sequence_lengths,maxlen=max_sentence_length),[0,1])

losses = tf.boolean_mask(losses, mask)

masked_loss = tf.reduce_mean(losses)

with tf.Session() as sess:

c_e = sess.run(cross_entropy)

m_c_e=sess.run(masked_loss)

print("tf unmasked_loss:", c_e)

print("tf masked_loss:", m_c_e)

The output in Keras and TensorFlow are compared as follows:

As shown above, masking is disabled after some kinds of layers. So how to mask the loss function in Keras when those layers are added?