I have Celery tasks that are received but will not execute. I am using Python 2.7 and Celery 4.0.2. My message broker is Amazon SQS.

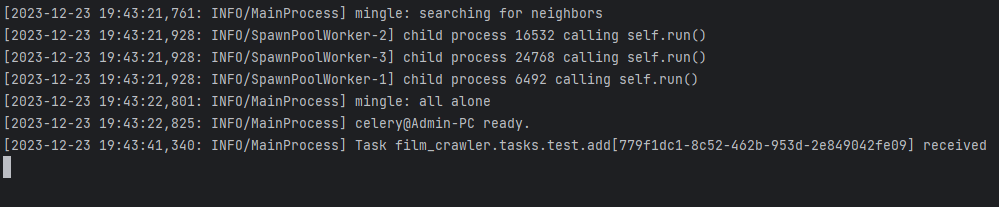

This the output of celery worker:

$ celery worker -A myapp.celeryapp --loglevel=INFO

[tasks]

. myapp.tasks.trigger_build

[2017-01-12 23:34:25,206: INFO/MainProcess] Connected to sqs://13245:**@localhost//

[2017-01-12 23:34:25,391: INFO/MainProcess] celery@ip-111-11-11-11 ready.

[2017-01-12 23:34:27,700: INFO/MainProcess] Received task: myapp.tasks.trigger_build[b248771c-6dd5-469d-bc53-eaf63c4f6b60]

I have tried adding -Ofair when running celery worker but that did not help. Some other info that might be helpful:

- Celery always receives 8 tasks, although there are about 100 messages waiting to be picked up.

- About once in every 4 or 5 times a task actually will run and complete, but then it gets stuck again.

- This is the result of

ps aux. Notice that it is running celery in 3 different processes (not sure why) and one of them has 99.6% CPU utilization, even though it's not completing any tasks or anything.

Processes:

$ ps aux | grep celery

nobody 7034 99.6 1.8 382688 74048 ? R 05:22 18:19 python2.7 celery worker -A myapp.celeryapp --loglevel=INFO

nobody 7039 0.0 1.3 246672 55664 ? S 05:22 0:00 python2.7 celery worker -A myapp.celeryapp --loglevel=INFO

nobody 7040 0.0 1.3 246672 55632 ? S 05:22 0:00 python2.7 celery worker -A myapp.celeryapp --loglevel=INFO

Settings:

CELERY_BROKER_URL = 'sqs://%s:%s@' % (AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY.replace('/', '%2F'))

CELERY_BROKER_TRANSPORT = 'sqs'

CELERY_BROKER_TRANSPORT_OPTIONS = {

'region': 'us-east-1',

'visibility_timeout': 60 * 30,

'polling_interval': 0.3,

'queue_name_prefix': 'myapp-',

}

CELERY_BROKER_HEARTBEAT = 0

CELERY_BROKER_POOL_LIMIT = 1

CELERY_BROKER_CONNECTION_TIMEOUT = 10

CELERY_DEFAULT_QUEUE = 'myapp'

CELERY_QUEUES = (

Queue('myapp', Exchange('default'), routing_key='default'),

)

CELERY_ALWAYS_EAGER = False

CELERY_ACKS_LATE = True

CELERY_TASK_PUBLISH_RETRY = True

CELERY_DISABLE_RATE_LIMITS = False

CELERY_IGNORE_RESULT = True

CELERY_SEND_TASK_ERROR_EMAILS = False

CELERY_TASK_RESULT_EXPIRES = 600

CELERY_RESULT_BACKEND = 'django-db'

CELERY_TIMEZONE = TIME_ZONE

CELERY_TASK_SERIALIZER = 'json'

CELERY_ACCEPT_CONTENT = ['application/json']

CELERYD_PID_FILE = "/var/celery_%N.pid"

CELERYD_HIJACK_ROOT_LOGGER = False

CELERYD_PREFETCH_MULTIPLIER = 1

CELERYD_MAX_TASKS_PER_CHILD = 1000

Report:

$ celery report -A myapp.celeryapp

software -> celery:4.0.2 (latentcall) kombu:4.0.2 py:2.7.12

billiard:3.5.0.2 sqs:N/A

platform -> system:Linux arch:64bit, ELF imp:CPython

loader -> celery.loaders.app.AppLoader

settings -> transport:sqs results:django-db