I'm doing different text classification experiments. Now I need to calculate the AUC-ROC for each task. For the binary classifications, I already made it work with this code:

scaler = StandardScaler(with_mean=False)

enc = LabelEncoder()

y = enc.fit_transform(labels)

feat_sel = SelectKBest(mutual_info_classif, k=200)

clf = linear_model.LogisticRegression()

pipe = Pipeline([('vectorizer', DictVectorizer()),

('scaler', StandardScaler(with_mean=False)),

('mutual_info', feat_sel),

('logistregress', clf)])

y_pred = model_selection.cross_val_predict(pipe, instances, y, cv=10)

# instances is a list of dictionaries

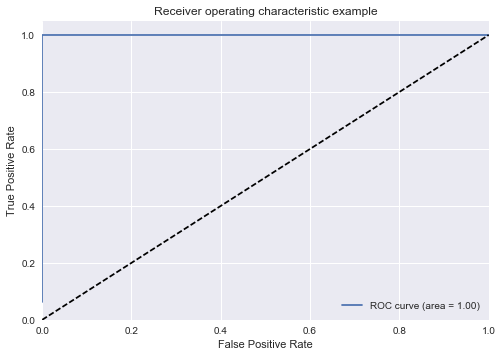

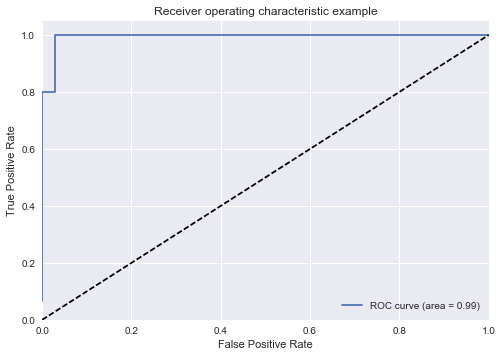

#visualisation ROC-AUC

fpr, tpr, thresholds = roc_curve(y, y_pred)

auc = auc(fpr, tpr)

print('auc =', auc)

plt.figure()

plt.title('Receiver Operating Characteristic')

plt.plot(fpr, tpr, 'b',

label='AUC = %0.2f'% auc)

plt.legend(loc='lower right')

plt.plot([0,1],[0,1],'r--')

plt.xlim([-0.1,1.2])

plt.ylim([-0.1,1.2])

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate')

plt.show()

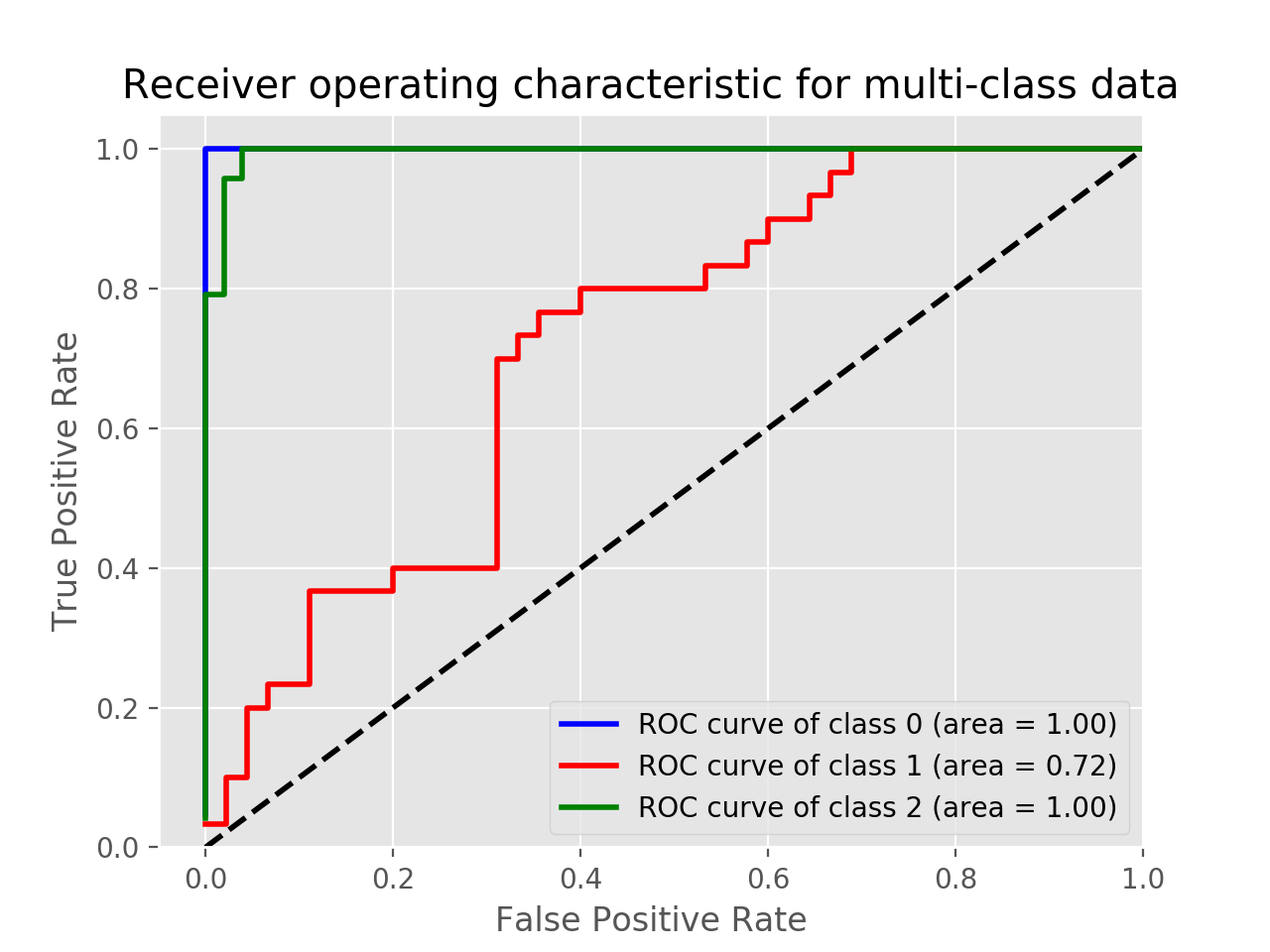

But now I need to do it for the multiclass classification task. I read somewhere that I need to binarize the labels, but I really don't get how to calculate ROC for multiclass classification. Tips?

sklearntutorial – Attah