The number of filters is the number of neurons, since each neuron performs a different convolution on the input to the layer (more precisely, the neurons' input weights form convolution kernels).

A feature map is the result of applying a filter (thus, you have as many feature maps as filters), and its size is a result of window/kernel size of your filter and stride.

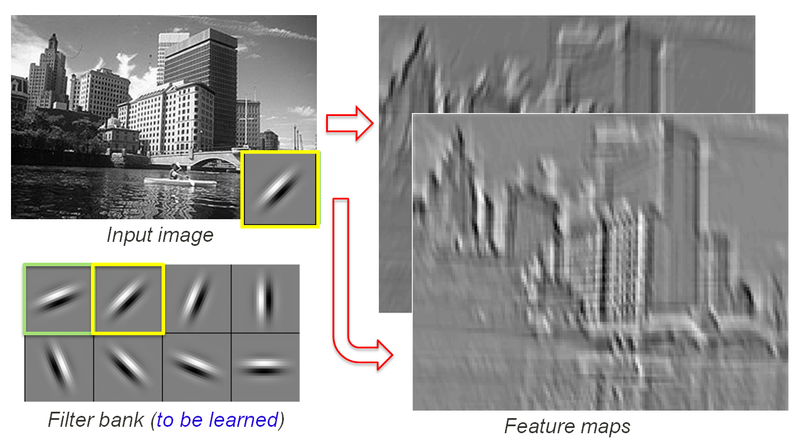

The following image was the best I could find to explain the concept at high level:

![enter image description here]() Note that 2 different convolutional filters are applied to the input image, resulting in 2 different feature maps (the output of the filters). Each pixel of each feature map is an output of the convolutional layer.

Note that 2 different convolutional filters are applied to the input image, resulting in 2 different feature maps (the output of the filters). Each pixel of each feature map is an output of the convolutional layer.

For instance, if you have 28x28 input images and a convolutional layer with 20 7x7 filters and stride 1, you will get 20 22x22 feature maps at the output of this layer. Note that this is presented to the next layer as a volume with width = height = 22 and depth = num_channels = 20. You could use the same representation to train your CNN on RGB images such as the ones from the CIFAR10 dataset, which would be 32x32x3 volumes (convolution is applied only to the 2 spatial dimensions).

EDIT: There seems to be some confusion going on in the comments that I'd like to clarify. First, there are no neurons. Neurons are just a metaphor in neural networks. That said, "how many neurons are there in a convolutional layer" cannot be answered objectively, but relative to your view of the computations performed by the layer. In my view, a filter is a single neuron that sweeps through the image, providing different activations for each position. An entire feature map is produced by a single neuron/filter at multiple positions in my view. The commentors seem to have another view that is as valid as mine. They see each filter as a set of weights for a convolution operation, and one neuron for each attended position in the image, all sharing the same set of weights defined by the filter. Note that both views are functionally (and even fundamentally) the same, as they use the same parameters, computations, and produce the same results. Therefore, this is a non-issue.