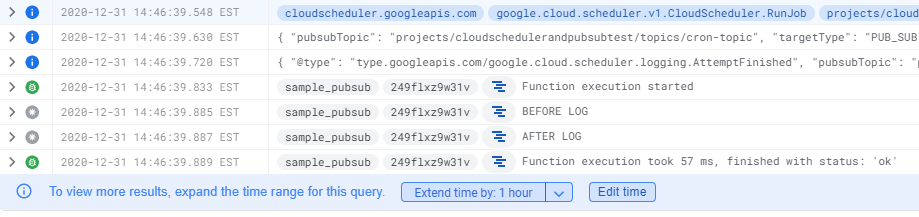

I'm experimenting with GCP's cloud functions and python for the first time and wanted to get python's logging integrated sufficiently so that they fit well with GCP's logging infrastructure (specifically so that severity levels are recognized, and ideally execution_ids and trace ids also are included.

I've been following https://cloud.google.com/logging/docs/setup/python to get this set up.

My code:

import base64

import logging

import google.cloud.logging

client = google.cloud.logging.Client()

client.get_default_handler()

client.setup_logging()

logging.getLogger().setLevel(logging.DEBUG)

def sample_pubsub(event, context):

pubsub_message = base64.b64decode(event['data']).decode('utf-8')

print('BEFORE LOG')

logging.info(f'Event received: payload data == [{pubsub_message}]')

logging.debug('This is debug')

logging.warn('This should be a warning')

logging.error('This should be an error')

print('AFTER LOG')

When I run this locally using the function-framework this works perfectly (as far as I can tell) outputting like so to the console:

{"message": " * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)", "timestamp": {"seconds": 1609443581, "nanos": 119384527}, "thread": 140519851886400, "severity": "INFO"}

{"message": " * Restarting with inotify reloader", "timestamp": {"seconds": 1609443581, "nanos": 149804115}, "thread": 140519851886400, "severity": "INFO"}

{"message": " * Debugger is active!", "timestamp": {"seconds": 1609443584, "nanos": 529310703}, "thread": 140233360983872, "severity": "WARNING"}

{"message": " * Debugger PIN: 327-539-151", "timestamp": {"seconds": 1609443584, "nanos": 533129930}, "thread": 140233360983872, "severity": "INFO"}

BEFORE LOG

{"message": "Event received: payload data == []", "timestamp": {"seconds": 1609443585, "nanos": 77324390}, "thread": 140232720623360, "severity": "INFO"}

{"message": "This is debug", "timestamp": {"seconds": 1609443585, "nanos": 77804565}, "thread": 140232720623360, "severity": "DEBUG"}

{"message": "This should be a warning", "timestamp": {"seconds": 1609443585, "nanos": 78260660}, "thread": 140232720623360, "severity": "WARNING"}

{"message": "This should be an error", "timestamp": {"seconds": 1609443585, "nanos": 78758001}, "thread": 140232720623360, "severity": "ERROR"}

AFTER LOG

{"message": "127.0.0.1 - - [31/Dec/2020 14:39:45] \"\u001b[37mPOST / HTTP/1.1\u001b[0m\" 200 -", "timestamp": {"seconds": 1609443585, "nanos": 82943439}, "thread": 140232720623360, "severity": "INFO"}

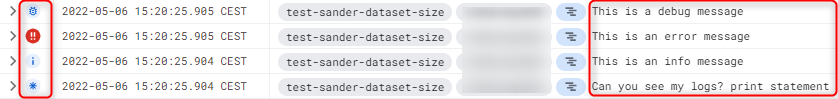

So... then I deploy it to the cloud and trigger it there thru its associated topic, and I see:

So, stdout seems to work fine but the logger output is missing. Final comment: I did create the account key and have put the json file into the function deployment root folder, and created the environment variable GOOGLE_APPLICATION_CREDENTIALS=key.json. On the chance that the problem is that the file isn't being picked up, I also tested this with the value referring to a non-existent file. The deployment fails if I do this so I'm confident the key file is being picked up.

Which brings me to my question: what am I doing wrong?

EDIT - Adding env details

I am deploying the function using the GSDK as follows:

gcloud functions deploy sample_pubsub --source=${SOURCE_DIR} --runtime=python38 --trigger-topic=${PUBSUB_TOPIC} --set-env-vars GOOGLE_APPLICATION_CREDENTIALS=key.json,PYTHONUNBUFFERED=1There is a requirements.txt file in the same folder as the function py file specifying ONLY "google-cloud-logging" without any version constraints. ** For local debugging I have a venv created with python 3.8.5 and I've pip-installed only google-cloud-logging and functions-framework - again without any version constraints.

Having said that, if I do a pip freeze within my activated virtual environment:

appdirs==1.4.3

CacheControl==0.12.6

cachetools==4.2.0

certifi==2019.11.28

chardet==3.0.4

click==7.1.2

cloudevents==1.2.0

colorama==0.4.3

contextlib2==0.6.0

deprecation==2.1.0

distlib==0.3.0

distro==1.4.0

Flask==1.1.2

functions-framework==2.1.0

google-api-core==1.24.1

google-auth==1.24.0

google-cloud-core==1.5.0

google-cloud-logging==2.0.2

googleapis-common-protos==1.52.0

grpcio==1.34.0

gunicorn==20.0.4

html5lib==1.0.1

idna==2.8

ipaddr==2.2.0

itsdangerous==1.1.0

Jinja2==2.11.2

lockfile==0.12.2

MarkupSafe==1.1.1

msgpack==0.6.2

packaging==20.3

pep517==0.8.2

progress==1.5

proto-plus==1.13.0

protobuf==3.14.0

pyasn1==0.4.8

pyasn1-modules==0.2.8

pyparsing==2.4.6

pytoml==0.1.21

pytz==2020.5

requests==2.22.0

retrying==1.3.3

rsa==4.6

six==1.14.0

urllib3==1.25.8

watchdog==1.0.2

webencodings==0.5.1

Werkzeug==1.0.1