Problem

I am trying to run a remote Spark Job through IntelliJ with a Spark HDInsight cluster (HDI 4.0). In my Spark application I am trying to read an input stream from a folder of parquet files from Azure blob storage using Spark's Structured Streaming built in readStream function.

The code works as expected when I run it on a Zeppelin notebook attached to the HDInsight cluster. However, when I deploy my Spark application to the cluster, I encounter the following error:

java.lang.IllegalAccessError: class org.apache.hadoop.hdfs.web.HftpFileSystem cannot access its superinterface org.apache.hadoop.hdfs.web.TokenAspect$TokenManagementDelegator

Subsequently, I am unable to read any data from blob storage.

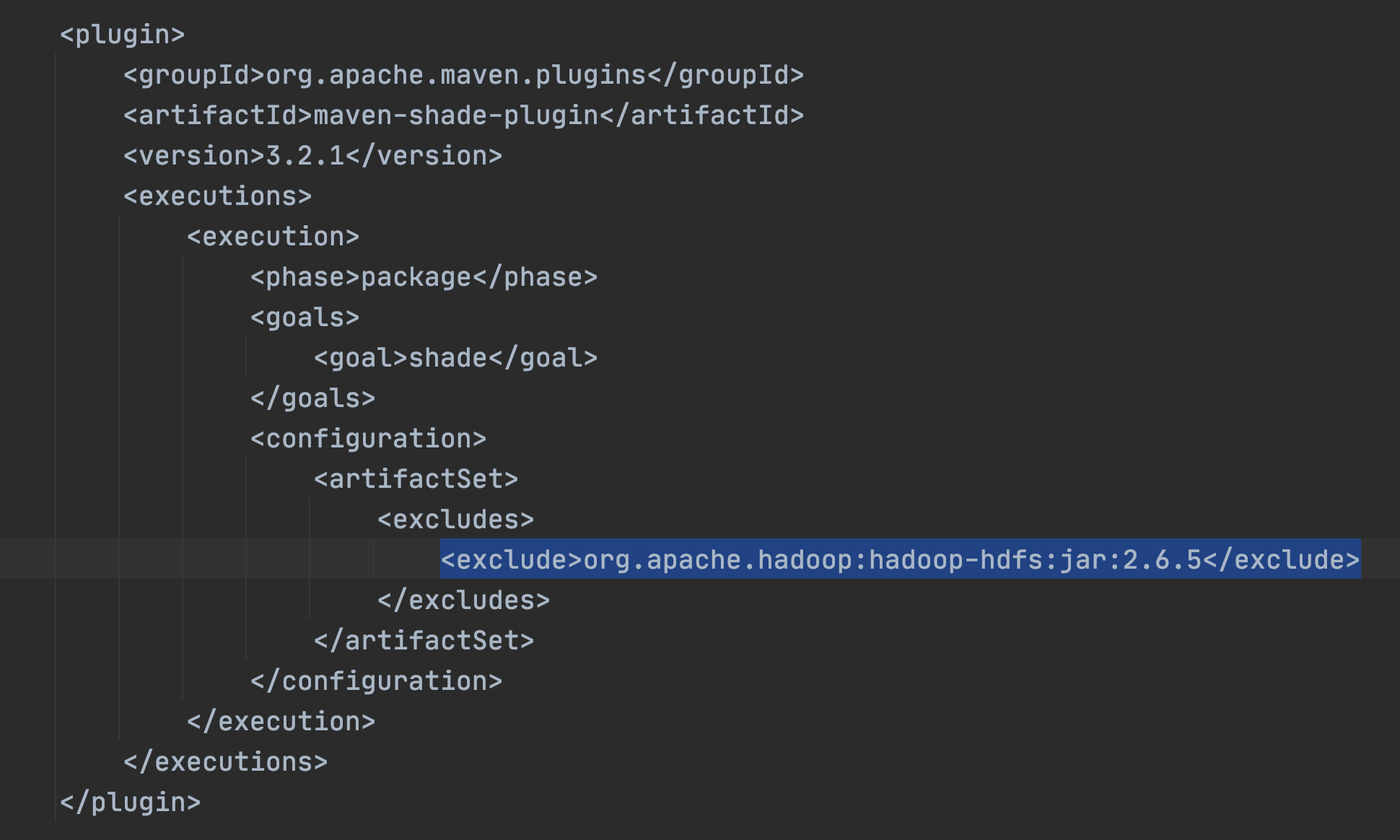

The little information I found online suggested that this is caused by a version conflict between Spark and Hadoop. The application is run with Spark 2.4 prebuilt for Hadoop 2.7.

Fix

To fix this, I ssh into each head and worker node of the cluster and manually downgrade the Hadoop dependencies to 2.7.3 from 3.1.x to match the version in my local spark/jars folder. After doing this , I am then able to deploy my application successfully. Downgrading the cluster from HDI 4.0 is not an option as it is the only cluster that can support Spark 2.4.

Summary

To summarize, could the issue be that I am using a Spark download prebuilt for Hadoop 2.7? Is there a better way to fix this conflict instead of manually downgrading the Hadoop versions on the cluster's nodes or changing the Spark version I am using?