UPD (2022)

For those who knows Russian, I made a presentation

and wrote couple of articles on this topic:

- RAM consumption in Golang: problems and solutions (Потребление оперативной памяти в языке Go: проблемы и пути решения)

- Preventing Memory Leaks in Go, Part 1. Business Logic Errors (Предотвращаем утечки памяти в Go, ч. 1. Ошибки бизнес-логики)

- Preventing memory leaks in Go, part 2. Runtime features (Предотвращаем утечки памяти в Go, ч. 2. Особенности рантайма)

Original answer (2017)

I was always confused about the growing residential memory of my Go applications, and finally I had to learn the profiling tools that are present in Go ecosystem. Runtime provides many metrics within a runtime.Memstats structure, but it may be hard to understand which of them can help to find out the reasons of memory growth, so some additional tools are needed.

Profiling environment

Use https://github.com/tevjef/go-runtime-metrics in your application. For instance, you can put this in your main:

import(

metrics "github.com/tevjef/go-runtime-metrics"

)

func main() {

//...

metrics.DefaultConfig.CollectionInterval = time.Second

if err := metrics.RunCollector(metrics.DefaultConfig); err != nil {

// handle error

}

}

Run InfluxDB and Grafana within Docker containers:

docker run --name influxdb -d -p 8086:8086 influxdb

docker run -d -p 9090:3000/tcp --link influxdb --name=grafana grafana/grafana:4.1.0

Set up interaction between Grafana and InfluxDB Grafana (Grafana main page -> Top left corner -> Datasources -> Add new datasource):

![enter image description here]()

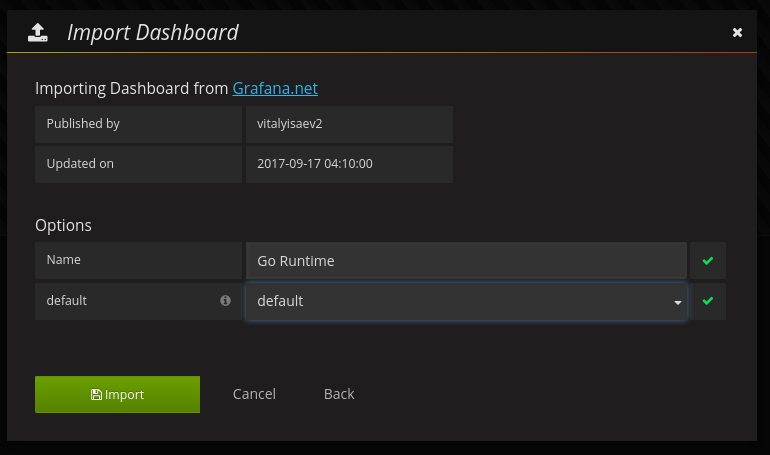

Import dashboard #3242 from https://grafana.com (Grafana main page -> Top left corner -> Dashboard -> Import):

![enter image description here]()

Finally, launch your application: it will transmit runtime metrics to the contenerized Influxdb. Put your application under a reasonable load (in my case it was quite small - 5 RPS for a several hours).

Memory consumption analysis

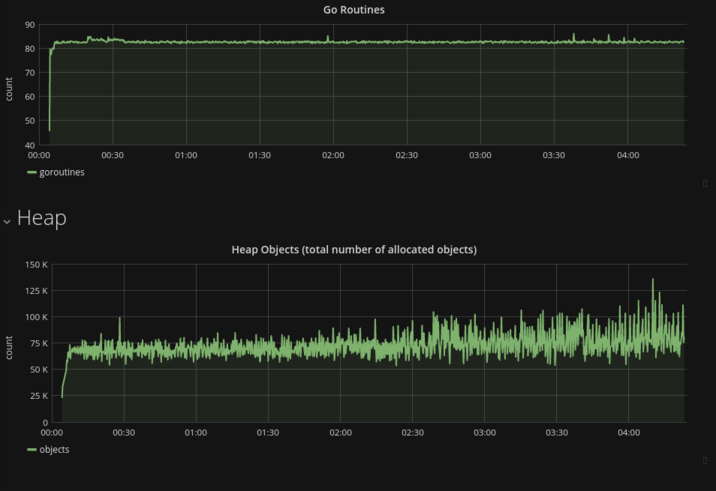

Sys (the synonim of RSS) curve is quite similar to HeapSys curve. Turns out that dynamic memory allocation was the main factor of overall memory growth, so the small amount of memory consumed by stack variables seem to be constant and can be ignored;- The constant amount of goroutines garantees the absence of goroutine leaks / stack variables leak;

- The total amount of allocated objects remains the same (there is no point in taking into account the fluctuations) during the lifetime of the process.

- The most surprising fact:

HeapIdle is growing with the same rate as a Sys, while HeapReleased is always zero. Obviously runtime doesn't return memory to OS at all , at least under the conditions of this test:

HeapIdle minus HeapReleased estimates the amount of memory

that could be returned to the OS, but is being retained by

the runtime so it can grow the heap without requesting more

memory from the OS.

![enter image description here]()

![enter image description here]()

For those who's trying to investigate the problem of memory consumption I would recommend to follow the described steps in order to exclude some trivial errors (like goroutine leak).

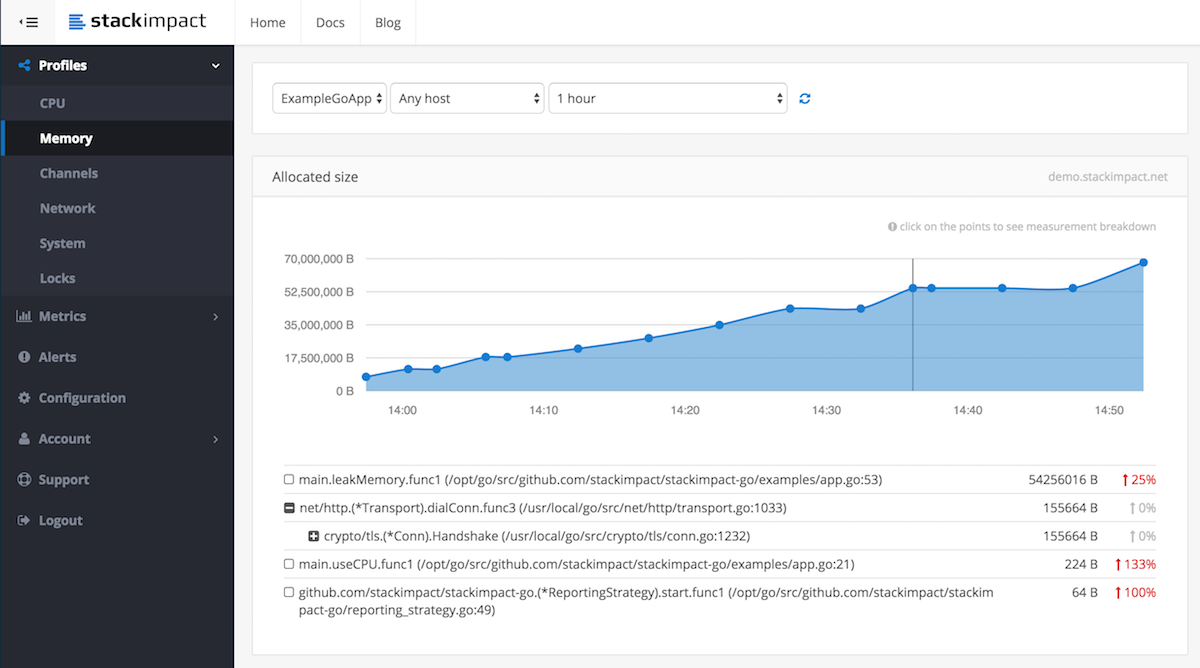

Freeing memory explicitly

It's interesting that the one can significantly decrease memory consumption with explicit calls to debug.FreeOSMemory():

// in the top-level package

func init() {

go func() {

t := time.Tick(time.Second)

for {

<-t

debug.FreeOSMemory()

}

}()

}

![comparison]()

In fact, this approach saved about 35% of memory as compared with default conditions.