With PyTorch Tensorboard I can log my train and valid loss in a single Tensorboard graph like this:

writer = torch.utils.tensorboard.SummaryWriter()

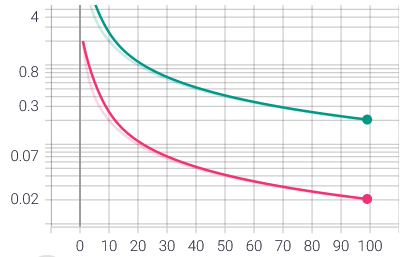

for i in range(1, 100):

writer.add_scalars('loss', {'train': 1 / i}, i)

for i in range(1, 100):

writer.add_scalars('loss', {'valid': 2 / i}, i)

How can I achieve the same with Pytorch Lightning's default Tensorboard logger?

def training_step(self, batch: Tuple[Tensor, Tensor], _batch_idx: int) -> Tensor:

inputs_batch, labels_batch = batch

outputs_batch = self(inputs_batch)

loss = self.criterion(outputs_batch, labels_batch)

self.log('loss/train', loss.item()) # creates separate graph

return loss

def validation_step(self, batch: Tuple[Tensor, Tensor], _batch_idx: int) -> None:

inputs_batch, labels_batch = batch

outputs_batch = self(inputs_batch)

loss = self.criterion(outputs_batch, labels_batch)

self.log('loss/valid', loss.item(), on_step=True) # creates separate graph

self.log(). Was hoping I had missed something as logging train/valid loss together seems like a pretty basic use case to me. – Catechin