I have a Raspberry Pi board with dedicated camera that records video only in h264. I am looking for the best method to stream and play recorded video in real-time (as in, less than 1 sec delay) in c# windows forms app. The additional requirement is that such stream can be easily processed before displaying, for example for searching for objects on the image.

Stuff I tried:

- VLC server on raspi and VLC control in c# forms app <- simple solution, with RTSP, but has a serious flaw, which is a ~3sec delay in image displayed. I couldn't fix it with buffor size/options etc.

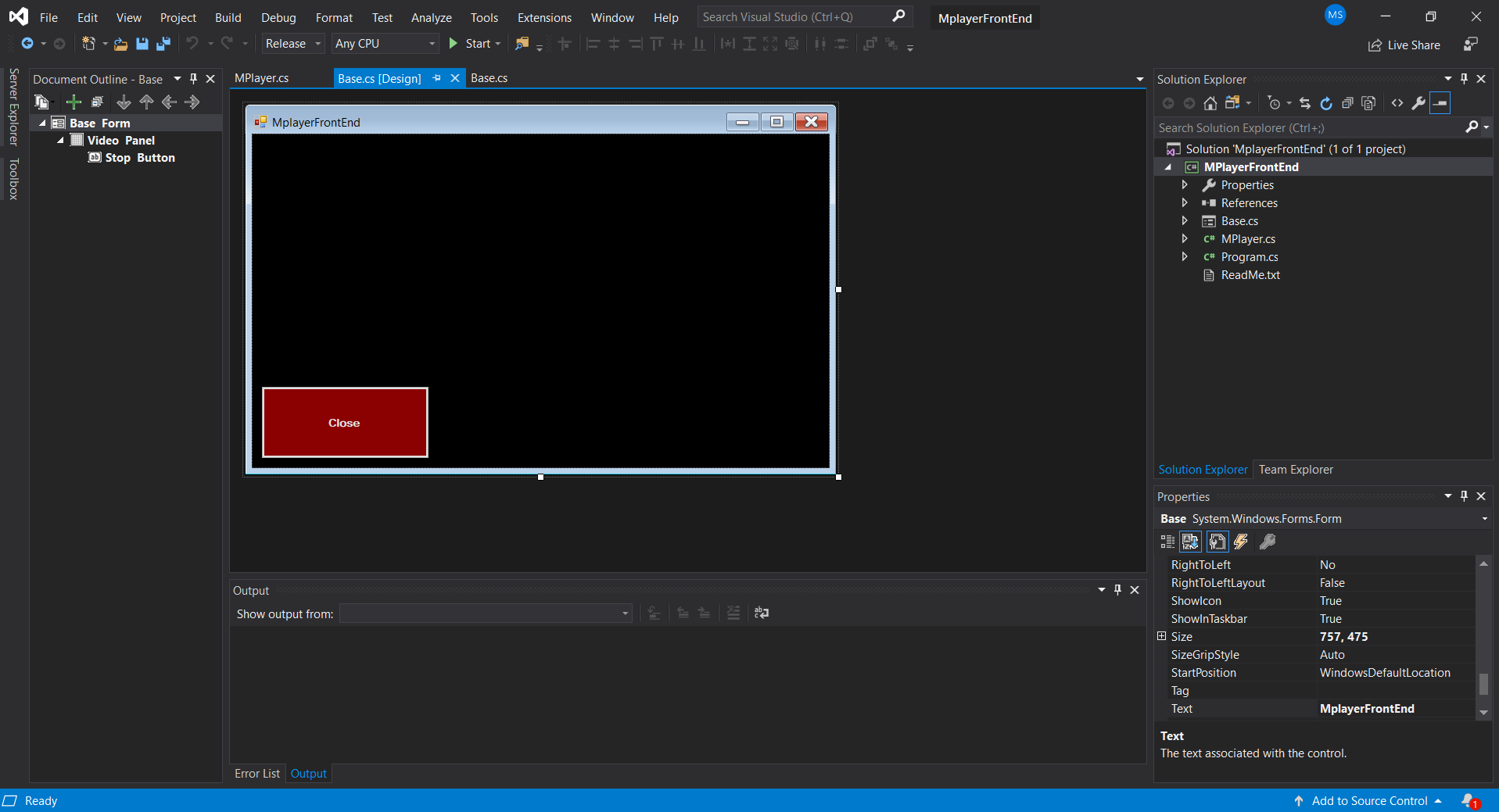

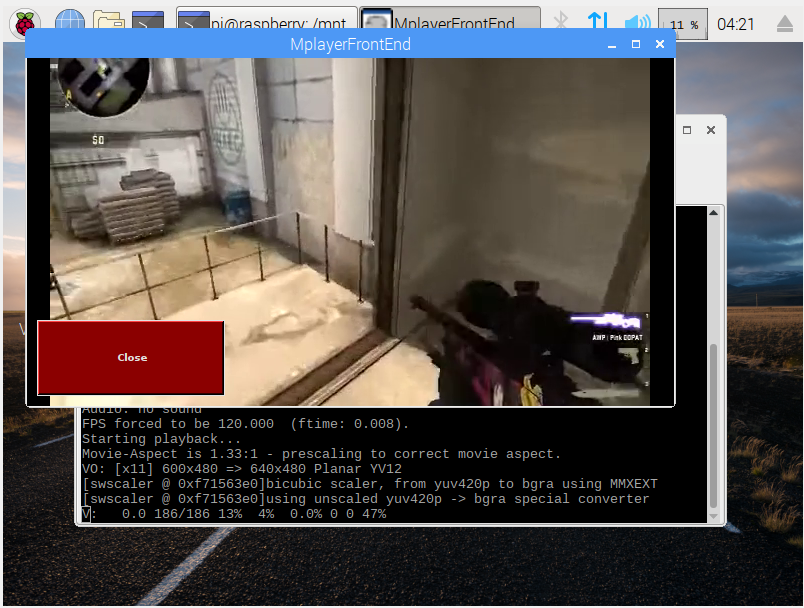

- creating a socket on raspi with nc, receiving raw h264 data in c# and passing it to mplayer frontend <- If I simply start raspivid | nc and on the laptop nc | mplayer, i get exactly the results i want, the video i get is pretty much realtime, but the problem arises when i try to create mplayer frontend in c# and simulate the nc.exe. Maybe I'm passing the h264 data wrong (simply write them to stdin) or maybe something else.

- using https://github.com/cisco/openh264 <- I compiled everything, but i can't even get to decode sample vid.h264 i recorded on raspi with h264dec.exe, not to mention using it in c#.

h264dec.exe vid.h264 out.yuv

This produces 0bytes out.yuv file, while:

h264dec.exe vid.h264

Gives me error message: "No input file specified in configuration file."

- ffmpeg <- I implemented ffplay.exe playback in c# app but the lack of easy method to take screencaps etc. discouraged me to further investigate and develop.

I'm not even sure whether I'm properly approaching the subject, so I'd be really thankful for every piece of advice I can get.

EDIT Here is my 'working' solution I am trying to implement in c#

raspivid --width 400 --height 300 -t 9999999 --framerate 25 --output - | nc -l 5884

nc ip_addr 5884 | mplayer -nosound -fps 100 -demuxer +h264es -cache 1024 -

The key here is FPS 100, becuase then mplayer skips lag and plays the video it immediately receives with normal speed. The issue here is that I don't know how to pass video data from socket into mplayer via c#, because I guess it is not done via stdin (already tried that).