I have installed Kafka on a local Minikube by using the Helm charts https://github.com/confluentinc/cp-helm-charts following these instructions https://docs.confluent.io/current/installation/installing_cp/cp-helm-charts/docs/index.html like so:

helm install -f kafka_config.yaml confluentinc/cp-helm-charts --name kafka-home-delivery --namespace cust360

The kafka_config.yaml is almost identical to the default yaml, with the one exception being that I scaled it down to 1 server/broker instead of 3 (just because I'm trying to conserve resources on my local minikube; hopefully that's not relevant to my problem).

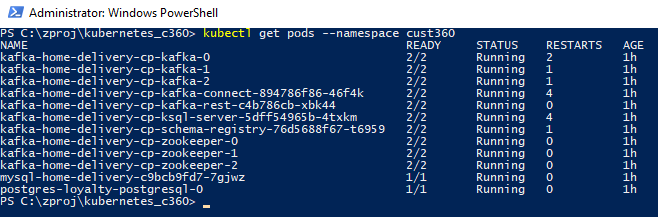

Also running on Minikube is a MySQL instance. Here's the output of kubectl get pods --namespace myNamespace:

I want to connect MySQL and Kafka, using one of the connectors (like Debezium MySQL CDC, for instance). In the instructions, it says:

Install your connector

Use the Confluent Hub client to install this connector with:

confluent-hub install debezium/debezium-connector-mysql:0.9.2

Sounds good, except 1) I don't know which pod to run this command on, 2) None of the pods seem to have a confluent-hub command available.

Questions:

- Does confluent-hub not come installed via those Helm charts?

- Do I have to install confluent-hub myself?

- If so, which pod do I have to install it on?

kafka-connectpod? You also may want to take a look at Strimzi. It provides a kube native way to roll Kafka clusters on k8s. – Mozambique