When you use %sh you are executing shell commands on the driver node using its local filesystem. However, /FileStore/ is not in the local filesystem, which is why you are experiencing the problem. You can see that by trying:

%sh ls /FileStore

# ls: cannot access '/FileStore': No such file or directory

vs.

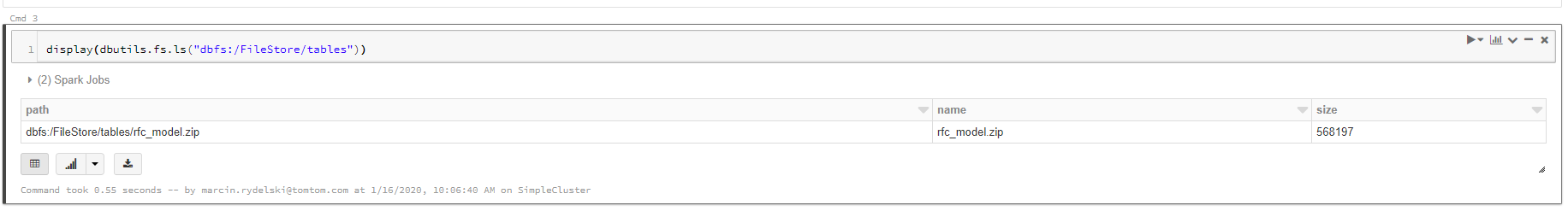

dbutils.fs.ls("/FileStore")

// resX: Seq[com.databricks.backend.daemon.dbutils.FileInfo] = WrappedArray(...)

You have to either use an unzip utility that can work with the Databricks file system or you have to copy the zip from the file store to the driver disk, unzip and then copy back to /FileStore.

You can address the local file system using file:/..., e.g.,

dbutils.fs.cp("/FileStore/file.zip", "file:/tmp/file.zip")

Hope this helps.

Side note 1: Databricks file system management is not super intuitive, esp when it comes to the file store. For example, in theory, the Databricks file system (DBFS) is mounted locally as /dbfs/. However, /dbfs/FileStore does not address the file store, while dbfs:/FileStore does. You are not alone. :)

Side note 2: if you need to do this for many files, you can distribute the work to the cluster workers by creating a Dataset[String] with the file paths and than ds.map { name => ... }.collect(). The collect action will force execution. In the body of the map function you will have to use shell APIs instead of %sh.

Side note 3: a while back I used the following Scala utility to unzip on Databricks. Can't verify it still works but it could give you some ideas.

def unzipFile(zipPath: String, outPath: String): Unit = {

val fis = new FileInputStream(zipPath)

val zis = new ZipInputStream(fis)

val filePattern = """(.*/)?(.*)""".r

println("Unzipping...")

Stream.continually(zis.getNextEntry).takeWhile(_ != null).foreach { file =>

// @todo need a consistent path handling abstraction

// to address DBFS mounting idiosyncracies

val dirToCreate = outPath.replaceAll("/dbfs", "") + filePattern.findAllMatchIn(file.getName).next().group(1)

dbutils.fs.mkdirs(dirToCreate)

val filename = outPath + file.getName

if (!filename.endsWith("/")) {

println(s"FILE: ${file.getName} to $filename")

val fout = new FileOutputStream(filename)

val buffer = new Array[Byte](1024)

Stream.continually(zis.read(buffer)).takeWhile(_ != -1).foreach(fout.write(buffer, 0, _))

}

}

}