First let me begin by saying - I am new to Janus / GStreamer / WebRTC.

I have to stream a remote camera connected on robot hardware using GStreamer and WebRTC on to a browser.

But as a proof of concept, I first wanted to achieve the same with videotestsrc. So, I have been trying to achieve the following:

- Build a GStreamer Pipeline

- Send that to Janus using UDPSink

- Run Janus gateway and show the test video stream on a browser (Chrome and Firefox).

Here is what I have done so far:

1. Created the following GST Pipeline:

gst-launch-1.0 videotestsrc ! video/x-raw,width=1024,height=768,framerate=30/1 ! timeoverlay ! x264enc ! rtph264pay config-interval=1 pt=96 ! udpsink host=192.168.1.6 port=8004

2. And I am using this modified streamingtest.html code: streamingtest2.js and streamingtest2.html:

var server = null;

if (window.location.protocol === 'http:') {

server = "http://" + window.location.hostname + ":8088/janus";

} else {

server = "https://" + window.location.hostname + ":8089/janus";

}

var janus = null;

var streaming = null;

var started = false;

var spinner = null;

var selectedStream = null;

$(document).ready(function () {

// Initialize the library (console debug enabled)

Janus.init({

debug: true, callback: function () {

startJanus();

}

});

});

function startJanus() {

console.log("starting Janus");

$('#start').click(function () {

if (started) {

return;

}

started = true;

// Make sure the browser supports WebRTC

if (!Janus.isWebrtcSupported()) {

console.error("No webrtc support");

return;

};

// Create session

janus = new Janus({

server: server,

success: function () {

console.log("Success");

attachToStreamingPlugin(janus);

},

error: function (error) {

console.log(error);

console.log("janus error");

},

destroyed: function () {

console.log("destroyed");

}

});

});

}

function attachToStreamingPlugin(janus) {

// Attach to streaming plugin

console.log("Attach to streaming plugin");

janus.attach({

plugin: "janus.plugin.streaming",

success: function (pluginHandle) {

streaming = pluginHandle;

console.log("Plugin attached! (" + streaming.getPlugin() + ", id=" + streaming.getId() + ")");

// Setup streaming session

updateStreamsList();

},

error: function (error) {

console.log(" -- Error attaching plugin... " + error);

console.error("Error attaching plugin... " + error);

},

onmessage: function (msg, jsep) {

console.log(" ::: Got a message :::");

console.log(JSON.stringify(msg));

processMessage(msg);

handleSDP(jsep);

},

onremotestream: function (stream) {

console.log(" ::: Got a remote stream :::");

console.log(JSON.stringify(stream));

handleStream(stream);

},

oncleanup: function () {

console.log(" ::: Got a cleanup notification :::");

}

});//end of janus.attach

}

function processMessage(msg) {

var result = msg["result"];

if (result && result["status"]) {

var status = result["status"];

switch (status) {

case 'starting':

console.log("starting - please wait...");

break;

case 'preparing':

console.log("preparing");

break;

case 'started':

console.log("started");

break;

case 'stopped':

console.log("stopped");

stopStream();

break;

}

} else {

console.log("no status available");

}

}

// we never appear to get this jsep thing

function handleSDP(jsep) {

console.log(" :: jsep :: ");

console.log(jsep);

if (jsep !== undefined && jsep !== null) {

console.log("Handling SDP as well...");

console.log(jsep);

// Answer

streaming.createAnswer({

jsep: jsep,

media: { audioSend: false, videoSend: false }, // We want recvonly audio/video

success: function (jsep) {

console.log("Got SDP!");

console.log(jsep);

var body = { "request": "start" };

streaming.send({ "message": body, "jsep": jsep });

},

error: function (error) {

console.log("WebRTC error:");

console.log(error);

console.error("WebRTC error... " + JSON.stringify(error));

}

});

} else {

console.log("no sdp");

}

}

function handleStream(stream) {

console.log(" ::: Got a remote stream :::");

console.log(JSON.stringify(stream));

// Show the stream and hide the spinner when we get a playing event

console.log("attaching remote media stream");

Janus.attachMediaStream($('#remotevideo').get(0), stream);

$("#remotevideo").bind("playing", function () {

console.log("got playing event");

});

}

function updateStreamsList() {

var body = { "request": "list" };

console.log("Sending message (" + JSON.stringify(body) + ")");

streaming.send({

"message": body, success: function (result) {

if (result === null || result === undefined) {

console.error("no streams available");

return;

}

if (result["list"] !== undefined && result["list"] !== null) {

var list = result["list"];

console.log("Got a list of available streams:");

console.log(list);

console.log("taking the first available stream");

var theFirstStream = list[0];

startStream(theFirstStream);

} else {

console.error("no streams available - list is null");

return;

}

}

});

}

function startStream(selectedStream) {

var selectedStreamId = selectedStream["id"];

console.log("Selected video id #" + selectedStreamId);

if (selectedStreamId === undefined || selectedStreamId === null) {

console.log("No selected stream");

return;

}

var body = { "request": "watch", id: parseInt(selectedStreamId) };

streaming.send({ "message": body });

}

function stopStream() {

console.log("stopping stream");

var body = { "request": "stop" };

streaming.send({ "message": body });

streaming.hangup();

}<!--

// janus-gateway streamingtest refactor so I can understand it better

// GPL v3 as original

// https://github.com/meetecho/janus-gateway

// https://github.com/meetecho/janus-gateway/blob/master/html/streamingtest.js

-->

<!DOCTYPE html>

<html>

<head>

<script src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="text/javascript" src="https://cdnjs.cloudflare.com/ajax/libs/jquery/1.9.1/jquery.min.js"></script>

<script type="text/javascript"

src="https://cdnjs.cloudflare.com/ajax/libs/twitter-bootstrap/3.4.1/js/bootstrap.min.js"></script>

<script type="text/javascript"

src="https://cdnjs.cloudflare.com/ajax/libs/bootbox.js/5.4.0/bootbox.min.js"></script>

<script type="text/javascript" src="https://cdnjs.cloudflare.com/ajax/libs/spin.js/2.3.2/spin.min.js"></script>

<script type="text/javascript" src="https://cdnjs.cloudflare.com/ajax/libs/toastr.js/2.1.4/toastr.min.js"></script>

<script type="text/javascript" src="janus.js"></script>

</head>

<body>

<div>

<button class="btn btn-default" autocomplete="off" id="start">Start</button><br />

<div id="stream">

<video controls autoplay id="remotevideo" width="320" height="240" style="border: 1px solid;">

</video>

</div>

</div>

<script type="text/javascript" src="streamingtest2.js"></script>

</body>

</html>3. I start the Janus server using:

sudo /usr/local/janus/bin/janus

And my Janus streaming config file (janus.plugin.streaming.jcfg) looks like this (full file here: janus.plugin.streaming.jcfg on PasteBin:

rtp-sample: {

type = "rtp"

id = 1

description = "Test Stream - 1"

metadata = "You can use this metadata section to put any info you want!"

audio = true

video = true

audioport = 8005

audiopt = 10

audiortpmap = "opus/48000/2"

videoport = 8004

videopt = 96

videortpmap = "H264/90000"

}

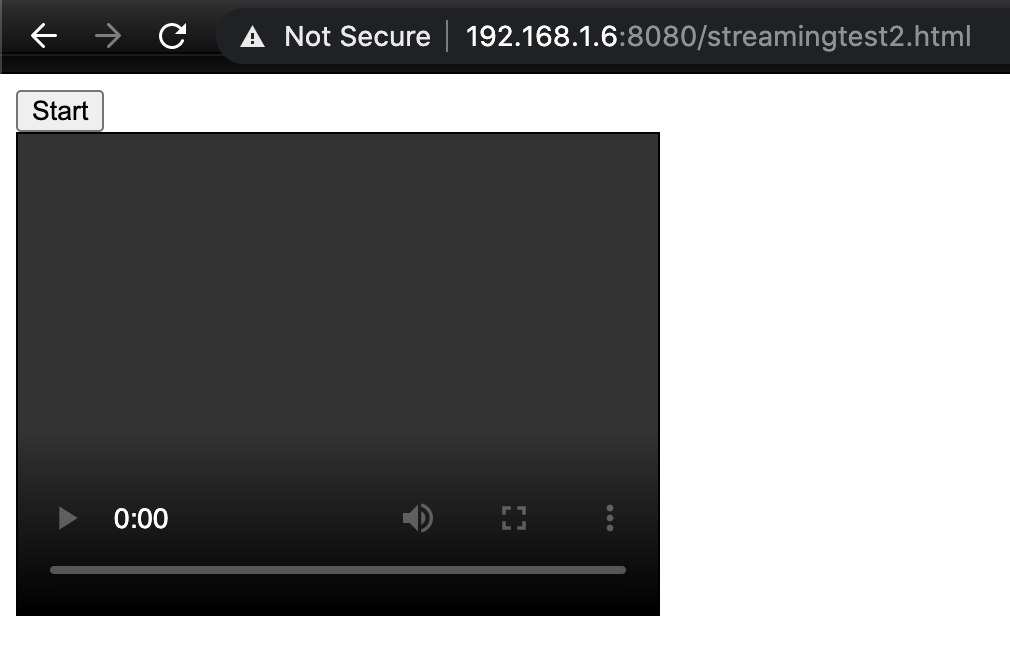

4. Start a local http server on port 8080 and open the streamingtest2.html (my current local IP address is 192.168.1.6):

192.168.1.6:8080/streamingtest2.html

5. This start the test page which contains a tag and a Start button.

When I click the Start button, it connects to Janus API on port 8088 and waits for the video stream.

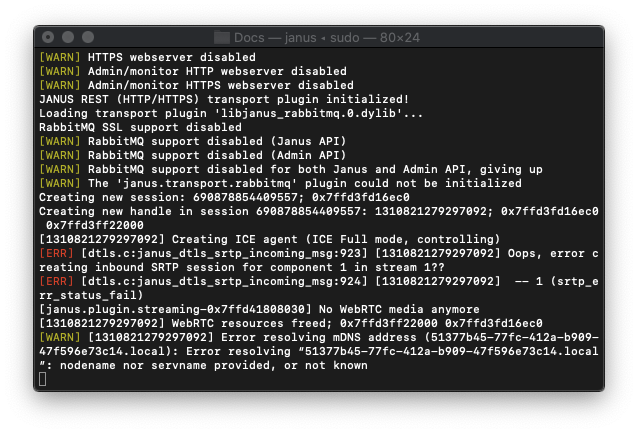

6. At the same time, if I see the janus server terminal window, it shows:

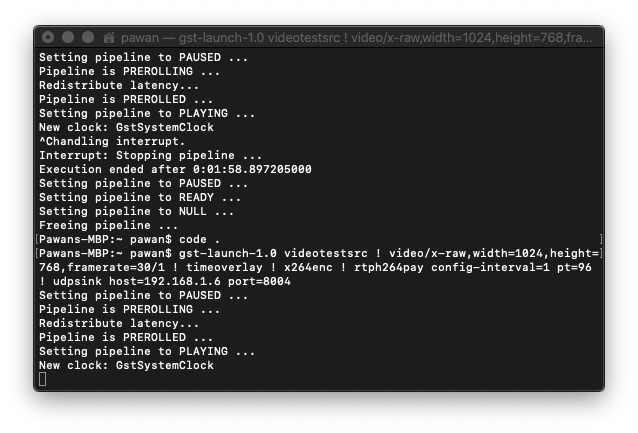

7. And Gst Pipeline terminal shows this:

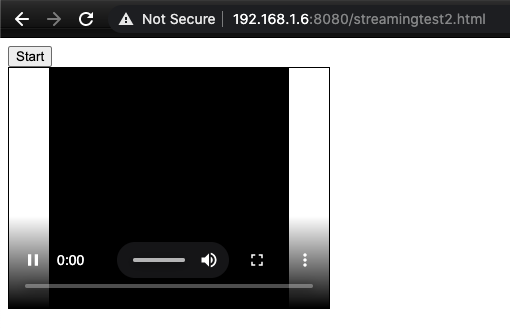

8. On the browser, the video tag gets filled with a 5 seconds "black" stream and then stops.

Please advise what I am doing wrong (most probably its in the pipeline or some certificate issues in Janus configuration).

Or please advise if this can be achieved in a simpler manner using GStreamer's WebRTCBin?

Please see this Pastebin https://pastebin.com/KeHAWjXx for my Google Chrome console log when all these above steps occur. Please provide some inputs so that I can stream the video using GStreamer and Janus. I also don't know how WebRTCBin comes into use in all this.

srflxcandidate in addition to the mdnshostcandidate. – Beatup