I have two images (slices) which were taken by two camera sensors to complete one image. However, due to some differences in sensors' performance, the colour/tones of them are different and I need to match them to make one unified image.

I used the HistogramMatcher function that is included in Fiji (Image J) explained here to match the colours of the second image as the first one. It gives an acceptable result but still needs further processing.

So my question is, what are the best approaches to have a unified image. should I start with brightness, hue then saturation? Also is there other than 'HistogramMatcher' function to match colours?

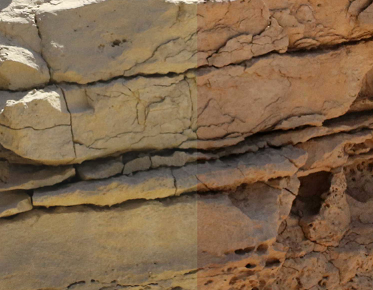

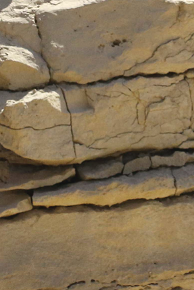

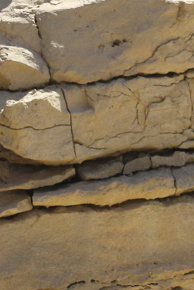

below is an example of an image