I coded a resilience strategy based on retry, and a circuit-breaker policies. Now working, but with a issue in its behavior.

I noticed when the circuit-breaker is on half-open, and the onBreak() event is being executed again to close the circuit, one additional retry is triggered for the retry policy (this one aside of the health verification for the half-open status).

Let me explain step by step:

I've defined two strongly-typed policies for retry, and circuit-breaker:

static Policy<HttpResponseMessage> customRetryPolicy;

static Policy<HttpResponseMessage> customCircuitBreakerPolicy;

static HttpStatusCode[] httpStatusesToProcess = new HttpStatusCode[]

{

HttpStatusCode.ServiceUnavailable, //503

HttpStatusCode.InternalServerError, //500

};

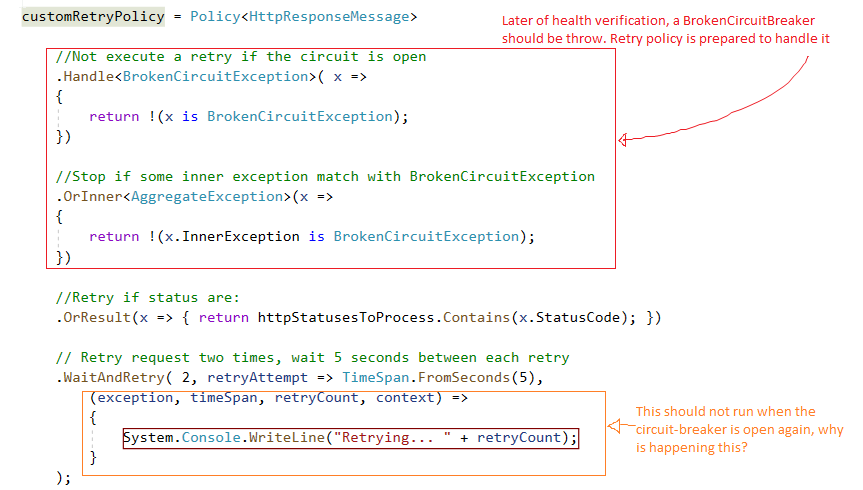

Retry policy is working this way: two (2) retry per request, waiting five (5) second between each retry. If the internal circuit-breaker is open, must not retry. Retry only for 500, and 503 Http statuses.

customRetryPolicy = Policy<HttpResponseMessage>

//Not execute a retry if the circuit is open

.Handle<BrokenCircuitException>( x =>

{

return !(x is BrokenCircuitException);

})

//Stop if some inner exception match with BrokenCircuitException

.OrInner<AggregateException>(x =>

{

return !(x.InnerException is BrokenCircuitException);

})

//Retry if status are:

.OrResult(x => { return httpStatusesToProcess.Contains(x.StatusCode); })

// Retry request two times, wait 5 seconds between each retry

.WaitAndRetry( 2, retryAttempt => TimeSpan.FromSeconds(5),

(exception, timeSpan, retryCount, context) =>

{

System.Console.WriteLine("Retrying... " + retryCount);

}

);

Circuit-breaker policy is working in this way: Allow max three (3) failures in a row, next open the circuit for thirty (30) seconds. Open circuit ONLY for HTTP-500.

customCircuitBreakerPolicy = Policy<HttpResponseMessage>

// handling result or exception to execute onBreak delegate

.Handle<AggregateException>(x =>

{ return x.InnerException is HttpRequestException; })

// just break when server error will be InternalServerError

.OrResult(x => { return (int) x.StatusCode == 500; })

// Broken when fail 3 times in a row,

// and hold circuit open for 30 seconds

.CircuitBreaker(3, TimeSpan.FromSeconds(30),

onBreak: (lastResponse, breakDelay) =>{

System.Console.WriteLine("\n Circuit broken!");

},

onReset: () => {

System.Console.WriteLine("\n Circuit Reset!");

},

onHalfOpen: () => {

System.Console.WriteLine("\n Circuit is Half-Open");

});

Finally, those two policies are being nested this way:

try

{

customRetryPolicy.Execute(() =>

customCircuitBreakerPolicy.Execute(() => {

//for testing purposes "api/values", is returning 500 all time

HttpResponseMessage msResponse

= GetHttpResponseAsync("api/values").Result;

// This just print messages on console, no pay attention

PrintHttpResponseAsync(msResponse);

return msResponse;

}));

}

catch (BrokenCircuitException e)

{

System.Console.WriteLine("CB Error: " + e.Message);

}

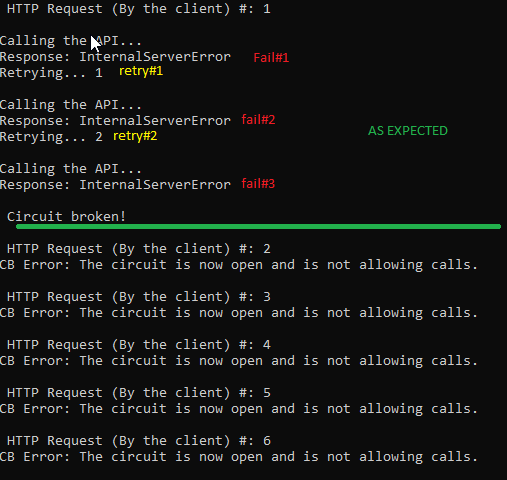

What is the result that I expected?

- The first server responses is HTTP-500 (as expected)

- Retry#1, Failed (as expected)

- Retry#2, Failed (as expected)

- As we have three faults, circuit-breaker is now open (as expected)

- GREAT! This is working, perfectly!

- Circuit-breaker is open for the next thirty (30) seconds (as expected)

- Thirty seconds later, circuit-breaker is half-open (as expected)

- One attempt to check the endpoint health (as expected)

- Server response is a HTTP-500 (as expected)

- Circuit-breaker is open for the next thirty (30) seconds (as expected)

- HERE THE PROBLEM: an additional retry is launched when the circuit-breaker is already open!

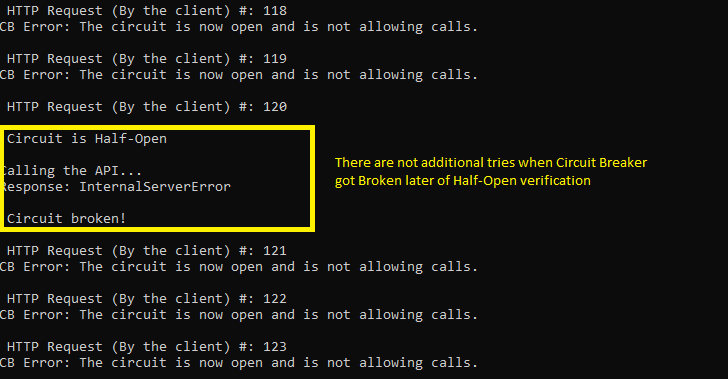

Look at the images:

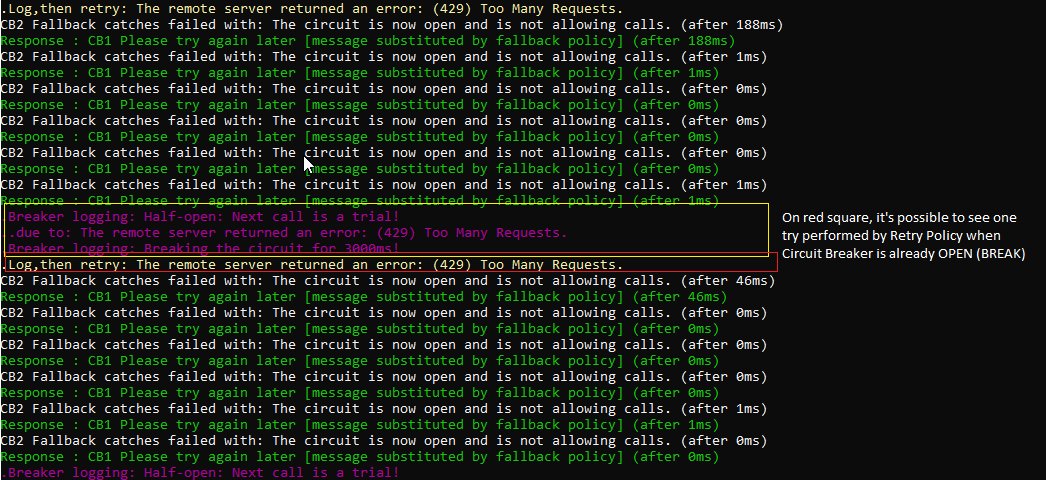

I'm trying to understand this behavior. Why one additional retry is being executed when the circuit-breaker is open for second, third,..., N time?

I've reviewed the machine state model for the retry, and circuit-breaker policies, but I don't understand why this additional retry is being performed.

Flow for the circuit-breaker: https://github.com/App-vNext/Polly/wiki/Circuit-Breaker#putting-it-all-together-

Flow for the retry policy: https://github.com/App-vNext/Polly/wiki/Retry#how-polly-retry-works

This really matter, because the time for the retry is being awaited (5 seconds for this example), and at the end, this is a waste of time for high-concurrency.

Any help / direction, will be appreciated. Many thanks.

[asp.net]tag refers to ASP.NET (.NET Framework) not ASP.NET Core. – FoggiadurationOfBreak) all incoming requests are rejected immediately. You can define asleepDurationProviderwhich receives aContextthat can be shared between retry and cb. So, it is doable, but a bit tricky and hacky. Do you want me to provide an example? – MigrateWaitTimeToRetry, every time that CB verify toCLOSE / OPENlater of the first break. One additional retry with that long time will be executed when the CB already knows that resources behind are failing. I think that trying a different approach of handling a strong-typed´Policy<TResult>could have a different result, but my business need are handling HTTP statuses. I will continue trying meanwhile. – ImpartOpenstate and will not trigger until it transits toHalf-Open. I hope it helps you a bit. – Migrate