Basically I want to achieve this with Flask and LangChain: https://www.youtube.com/watch?v=x8uwwLNxqis.

I'm building a Q&A Flask app that uses LangChain in the backend, but I'm having trouble to stream the response from ChatGPT. My chain looks like this:

chain = VectorDBQA.from_chain_type(llm=ChatOpenAI(model_name="gpt-3.5-turbo", streaming=True, chain_type="stuff", vectorstore=docsearch)

...

result = chain({"query": query})

output = result['result']

Jinja simply prints the {{ output }}, and it works fine, but the result doesn't appear in the website until the entire response is finished. I want to stream the result as it's being generated by ChatGPT.

I've tried using stream_template, but it doesn't work (it doesn't stream the result, it just prints the full response at once, although I could be doing something wrong).

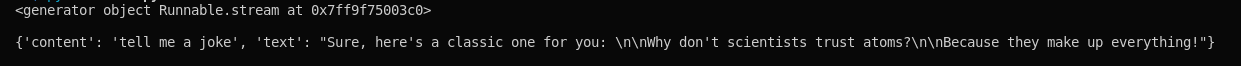

I finally solved it: